The IBEX 35 index

In this article, Nithisha CHALLA (ESSEC Business School, Grande Ecole Program – Master in Management, 2021-2023) presents the IBEX 35 index representing the Spanish equity market and details its characteristics.

The IBEX 35 index

The Bolsa de Madrid’s benchmark stock market index, the IBEX 35 index, is regarded as Spain’s primary stock exchange. The company that runs the Spanish stock exchanges, Bolsas y Mercados Espaoles (BME), which was founded on January 14, 1992, is in charge of managing it.

The 35 most liquid and well-capitalized companies traded on the Bolsa de Madrid make up the index. Based on trading volume, liquidity, and free-float market capitalization, the companies listed are chosen. The index includes businesses from a wide range of industries, including consumer goods, energy, finance, and telecommunications.

The IBEX 35 index is a free-float market capitalization-weighted index, which means that the index’s weights are based on market capitalization and are float-adjusted for each stock. This makes sure that the movements of the index are more influenced by larger companies than by smaller ones.

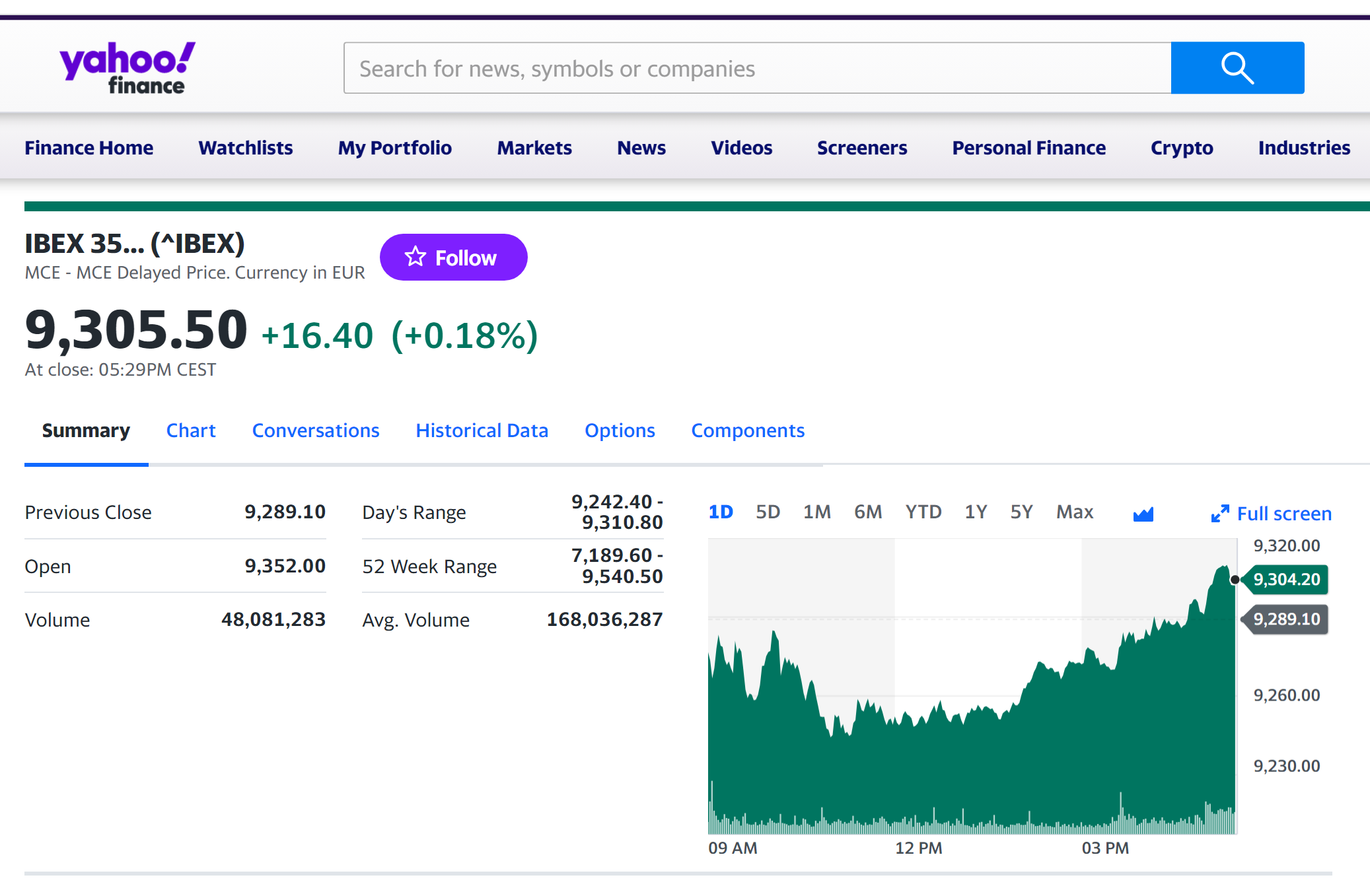

The IBEX 35 index is widely represented on trading platforms and financial websites, like other significant stock market indices. The performance of the Spanish economy and the overall health of the European Union are closely watched by investors and analysts around the world.

The ticker symbol used in the financial industry for the IBEX 35 index is “IBEX”.

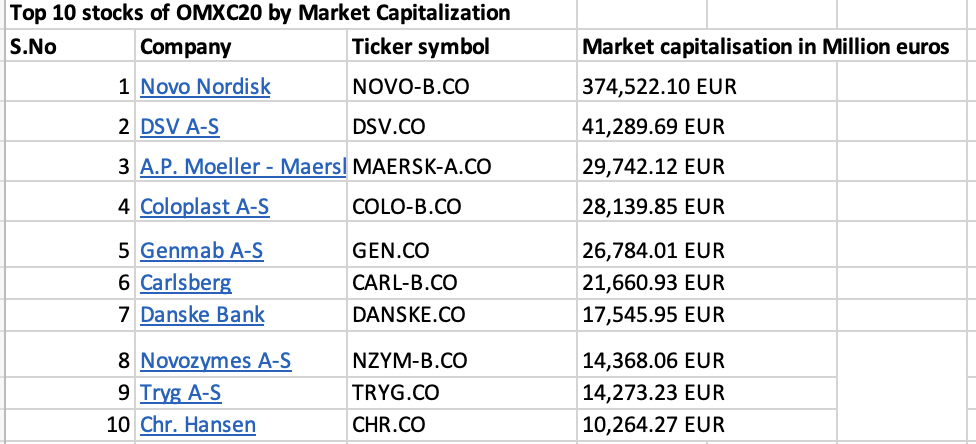

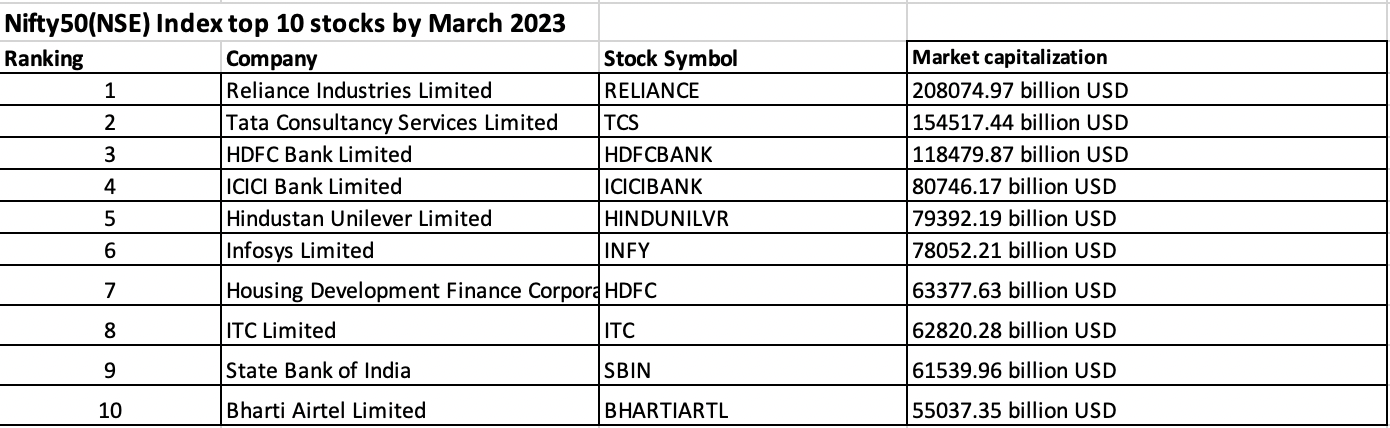

Table 1 below gives the Top 10 stocks in the IBEX 35 index in terms of market capitalization as of January 31, 2023.

Table 1. Top 10 stocks in the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance financial website).

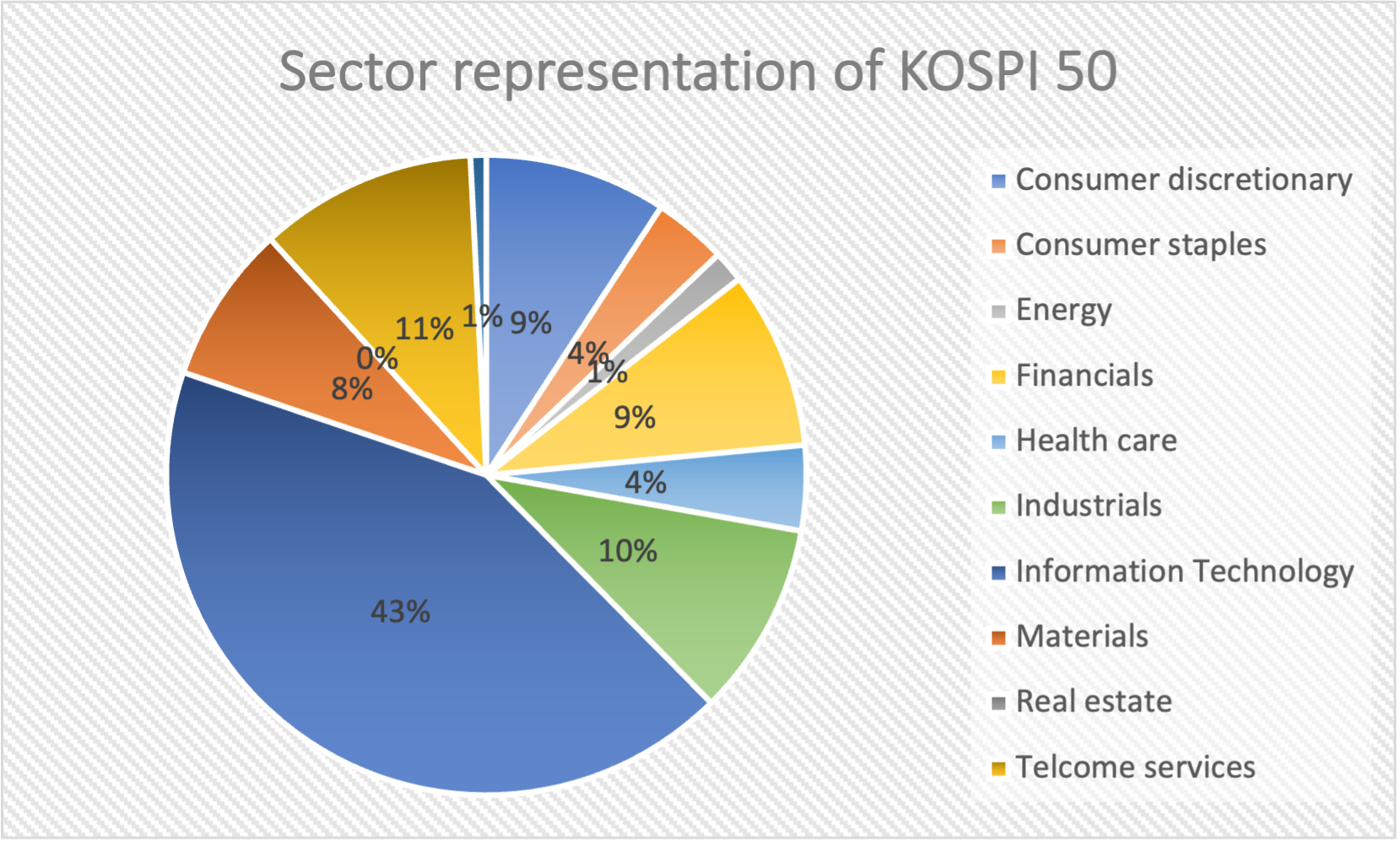

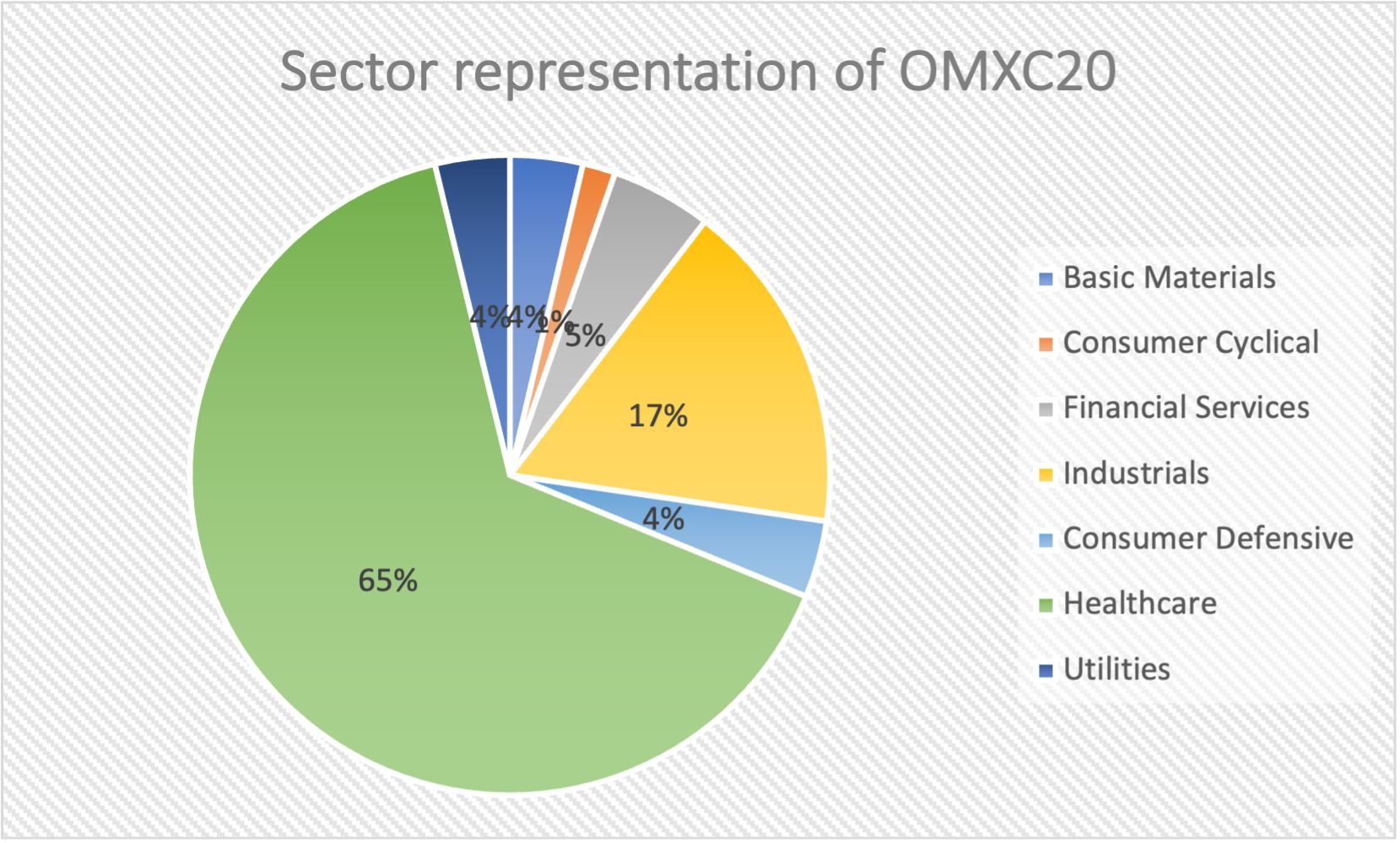

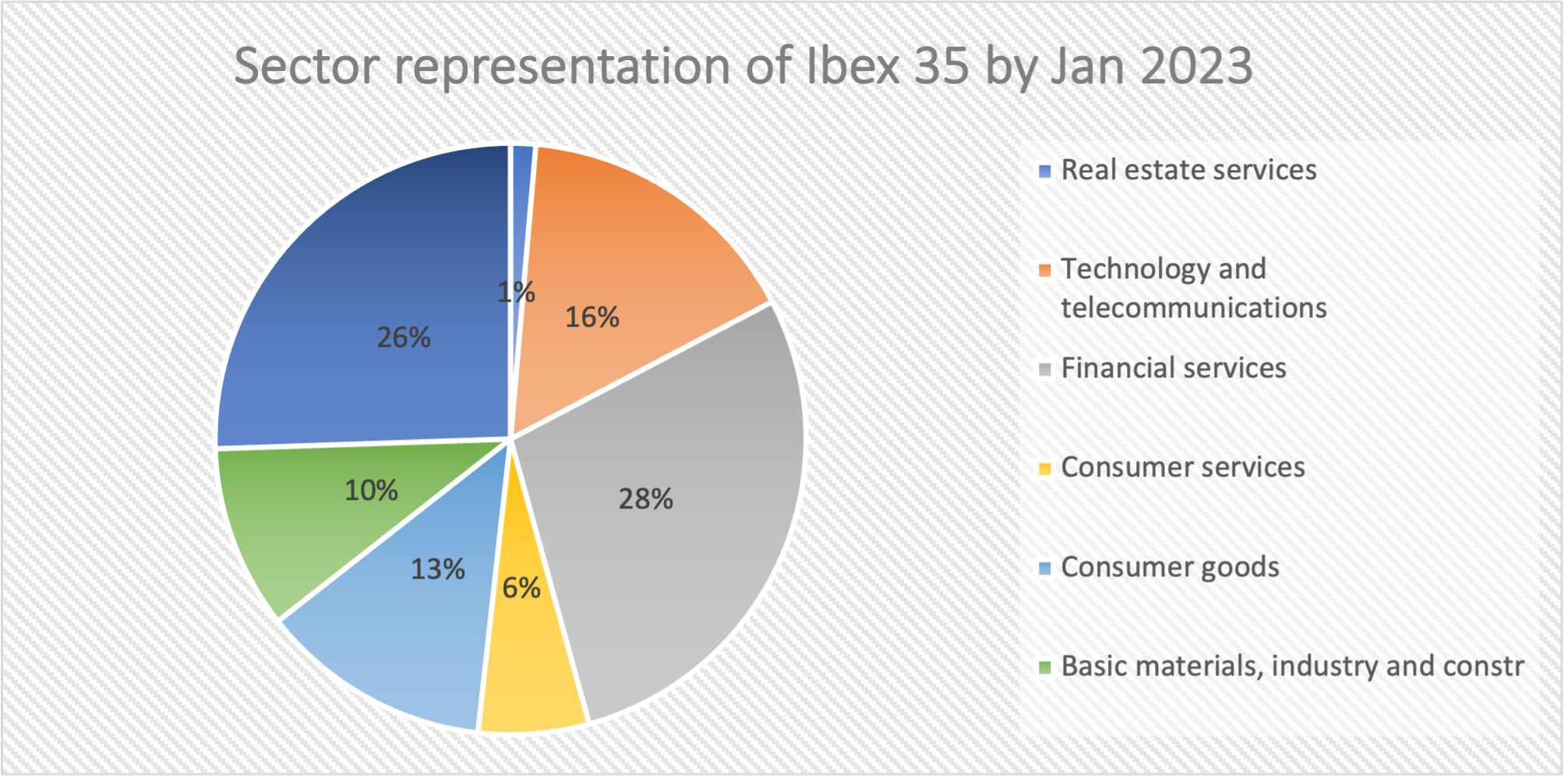

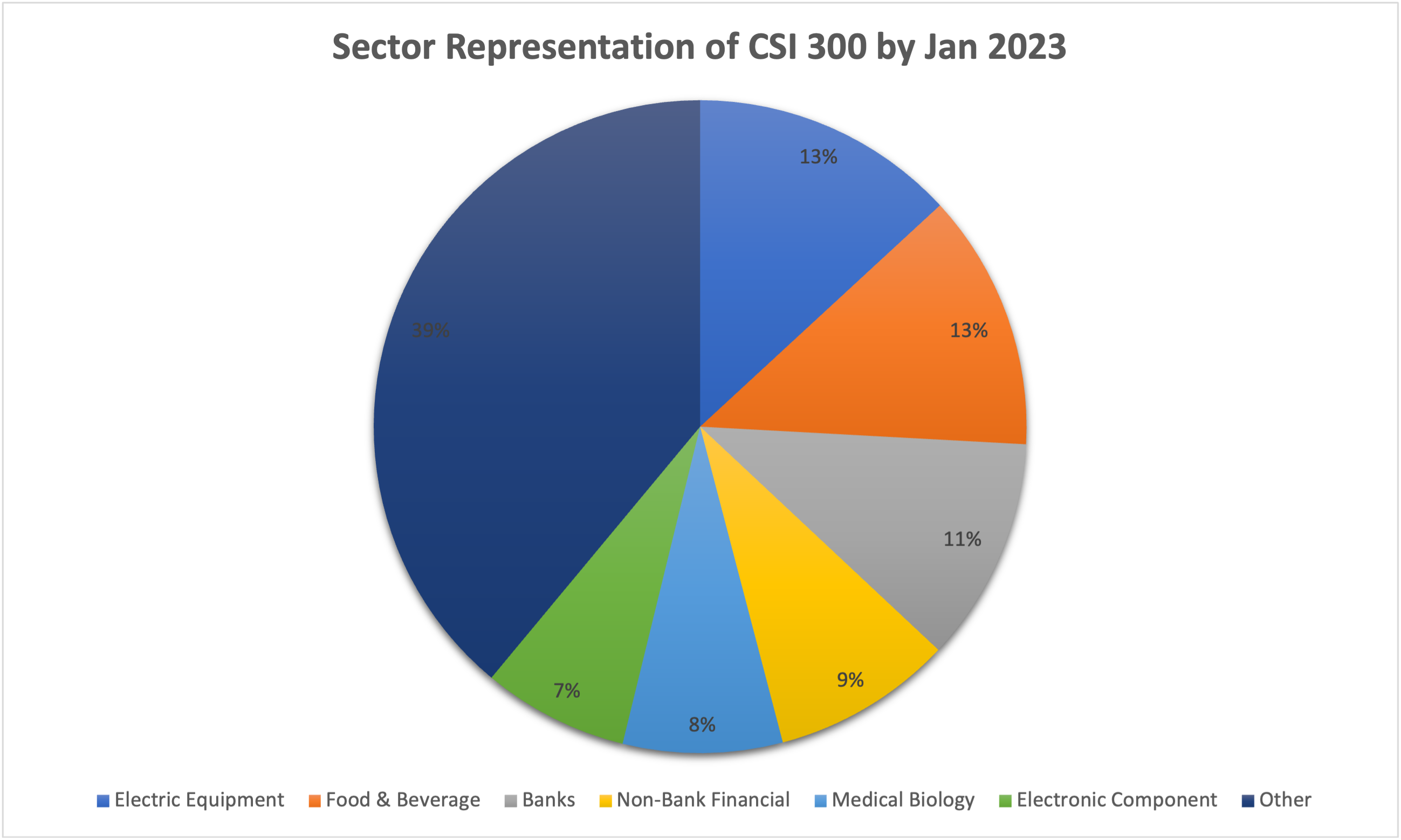

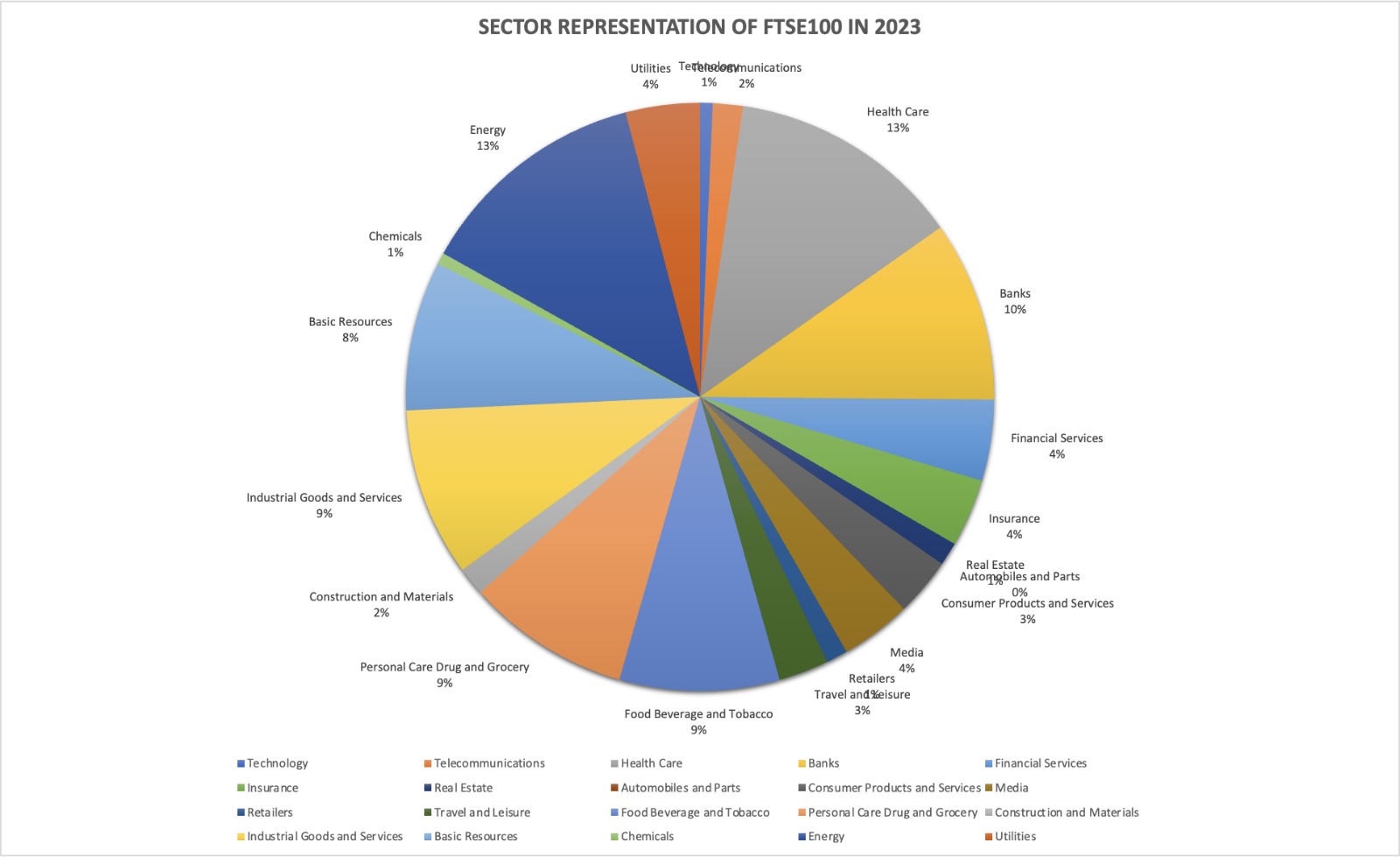

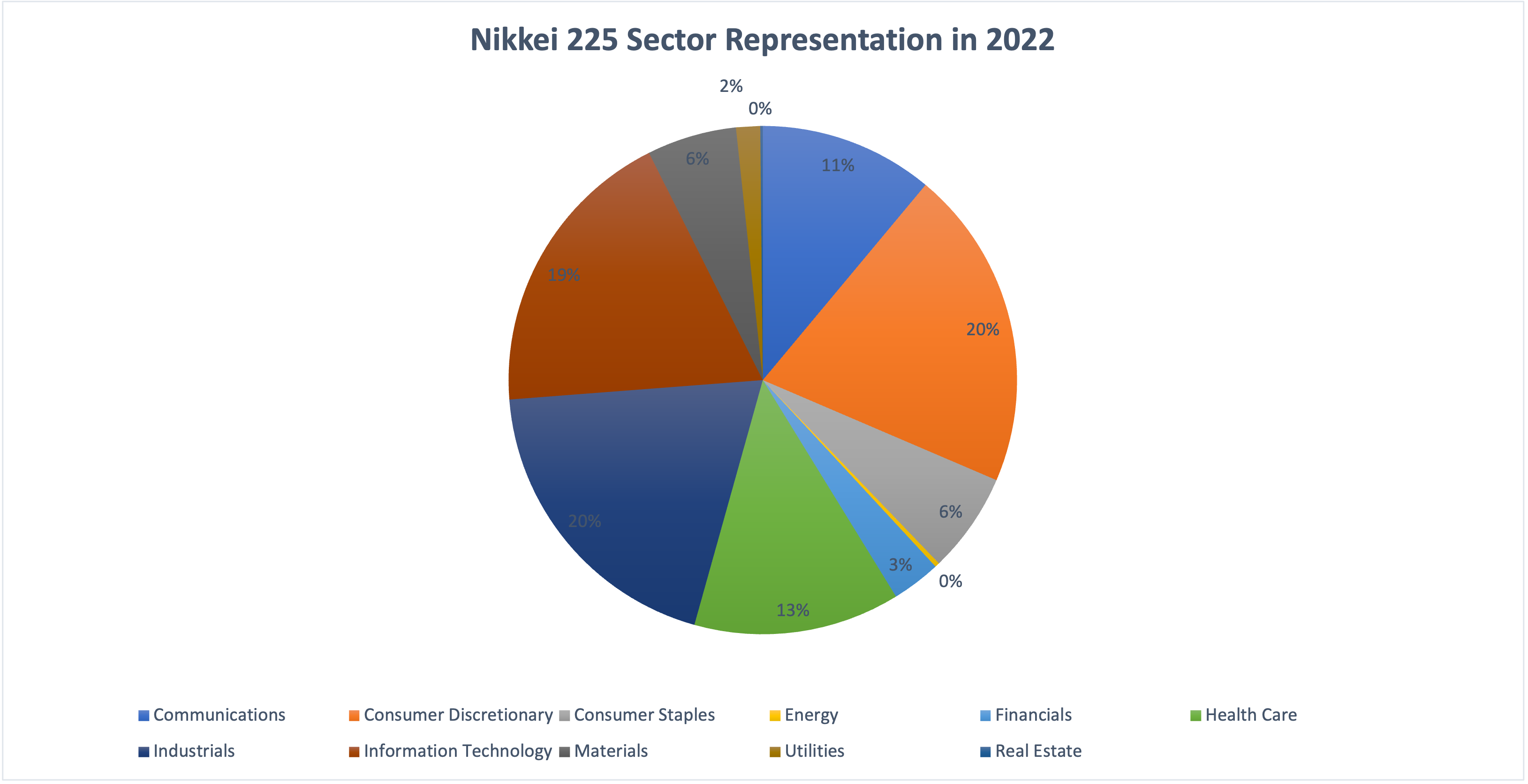

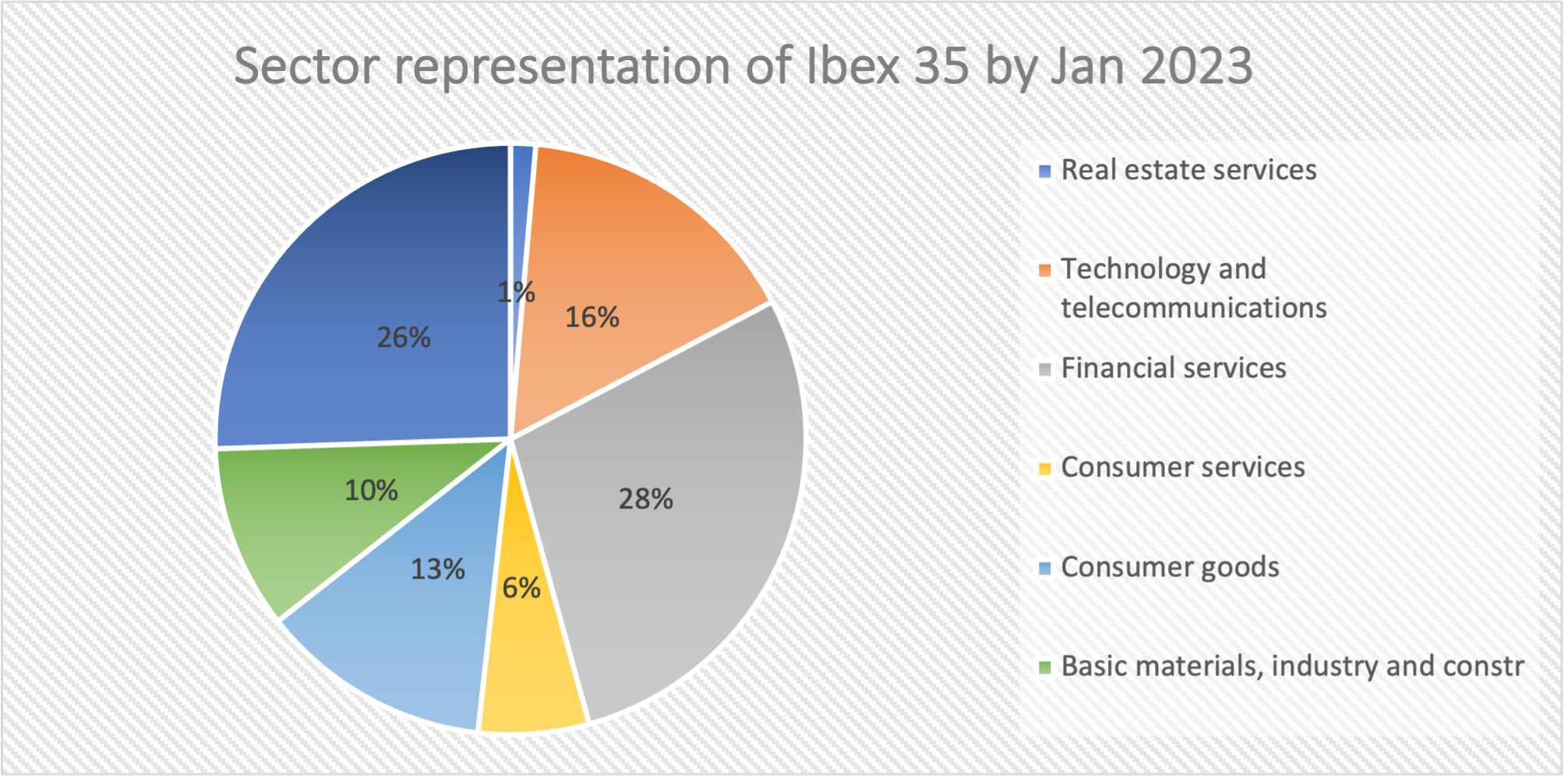

Table 2 below gives the sector representation of the IBEX 35 index in terms of number of stocks and market capitalization as of January 31, 2023.

Table 2. Sector representation in the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance website).

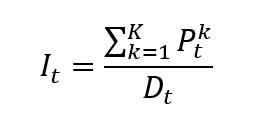

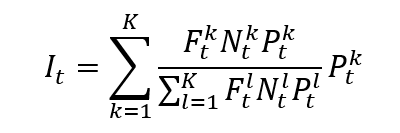

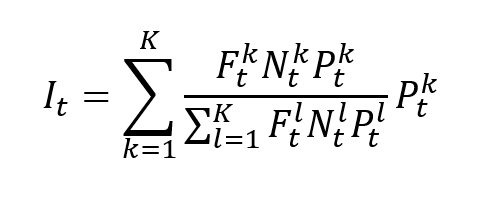

Calculation of the IBEX 35 index value

As a free-float market-capitalization-weighted index that is float-adjusted, the IBEX 35 index is calculated by taking into account the market capitalization of each of the companies that make up the index. To ensure that the index accurately captures the performance of the Spanish stock market, Bolsas y Mercados Espaoles (BME), the Spanish stock exchange, reviews and rebalances the index twice a year. The stocks that will be included in the index are chosen by the Technical Advisory Committee of the BME, which takes into account elements like liquidity, market capitalization, and trading volume.

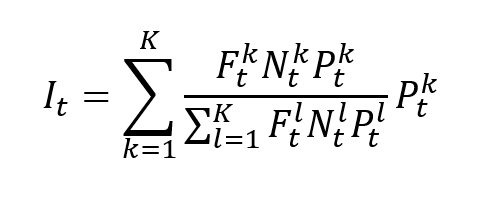

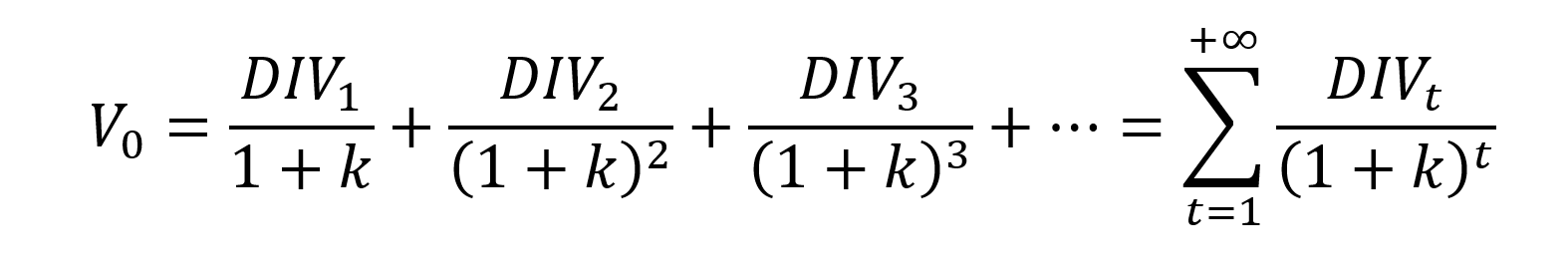

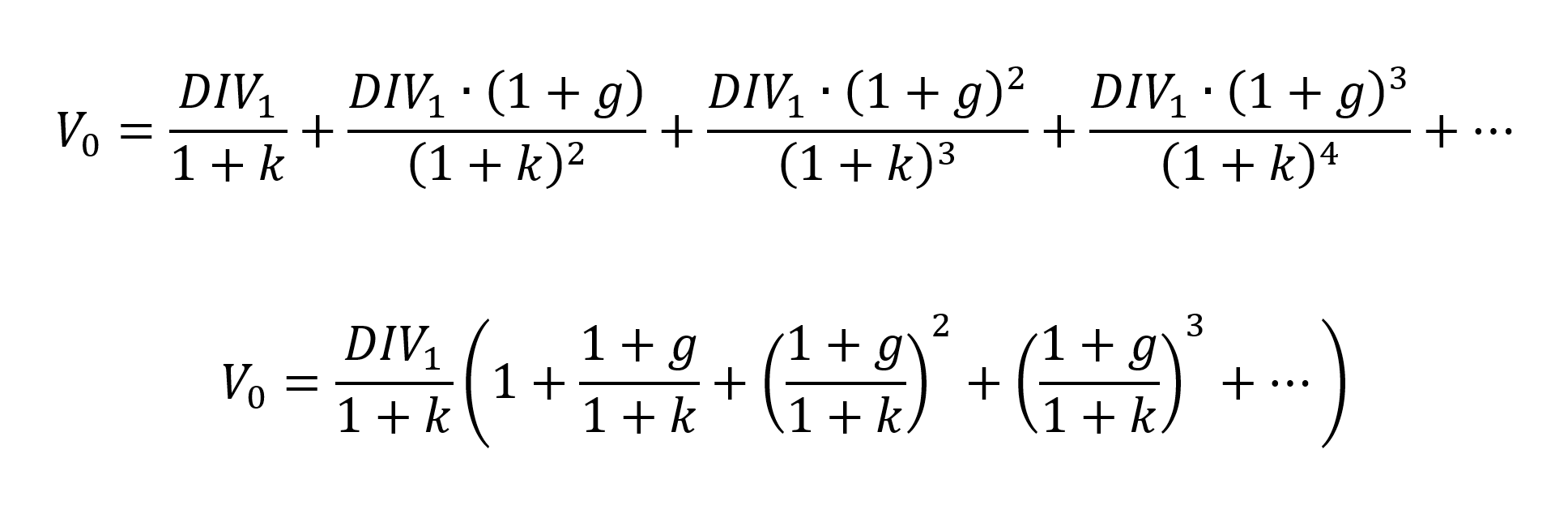

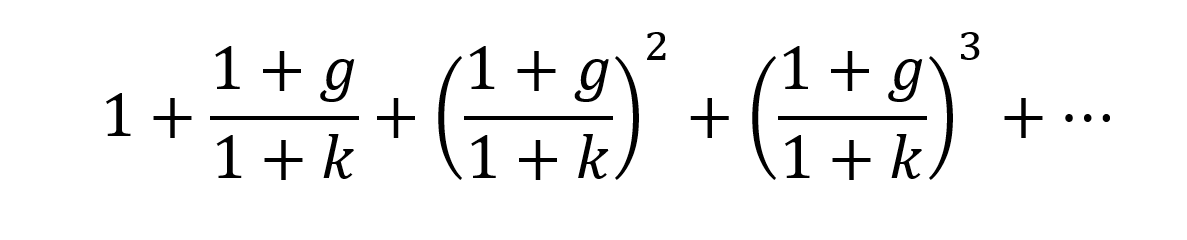

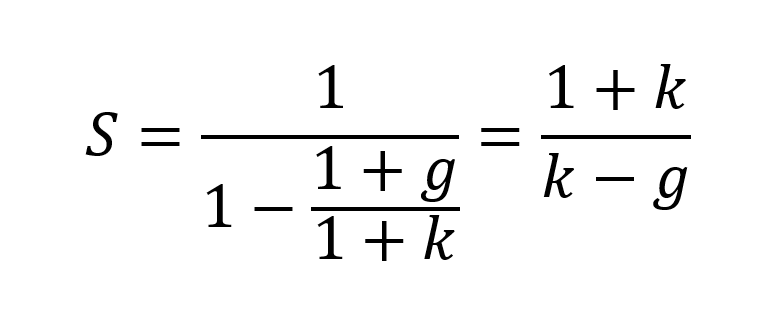

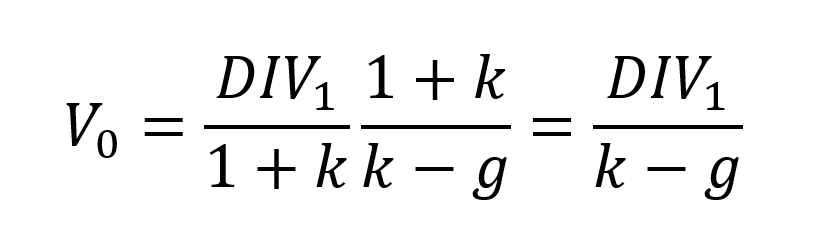

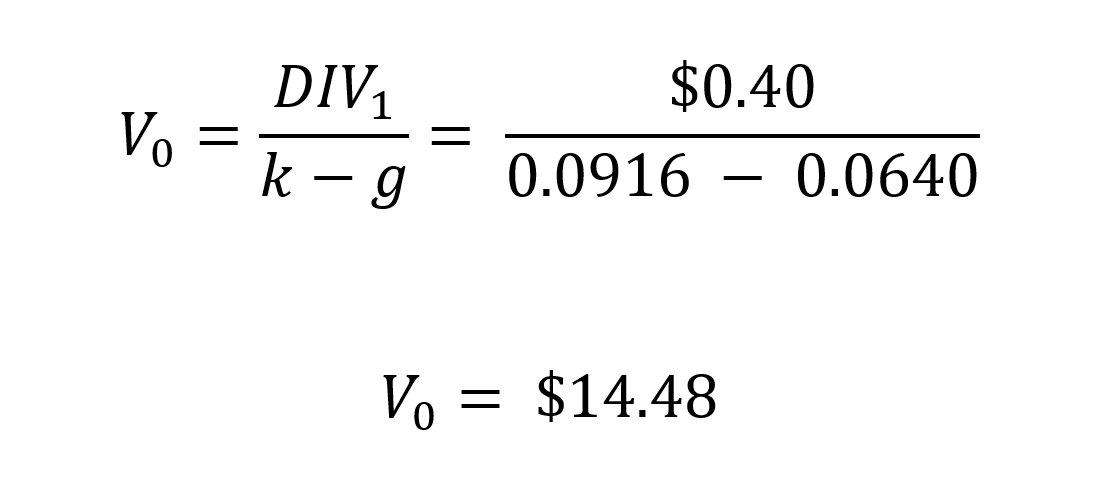

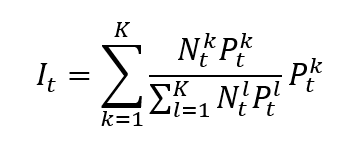

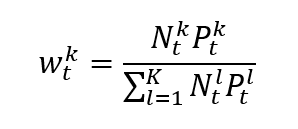

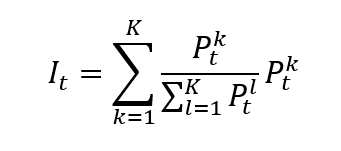

The formula to compute the IBEX 35 index is given by

where I is the index value, k a given asset, K the number of assets in the index, Pk the market price of asset k, Nk the number of issued shares for asset k, Fk the float factor of asset k, and t the time of calculation of the index.

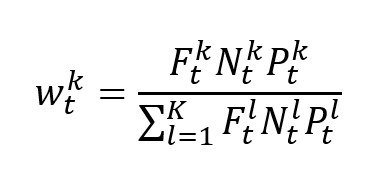

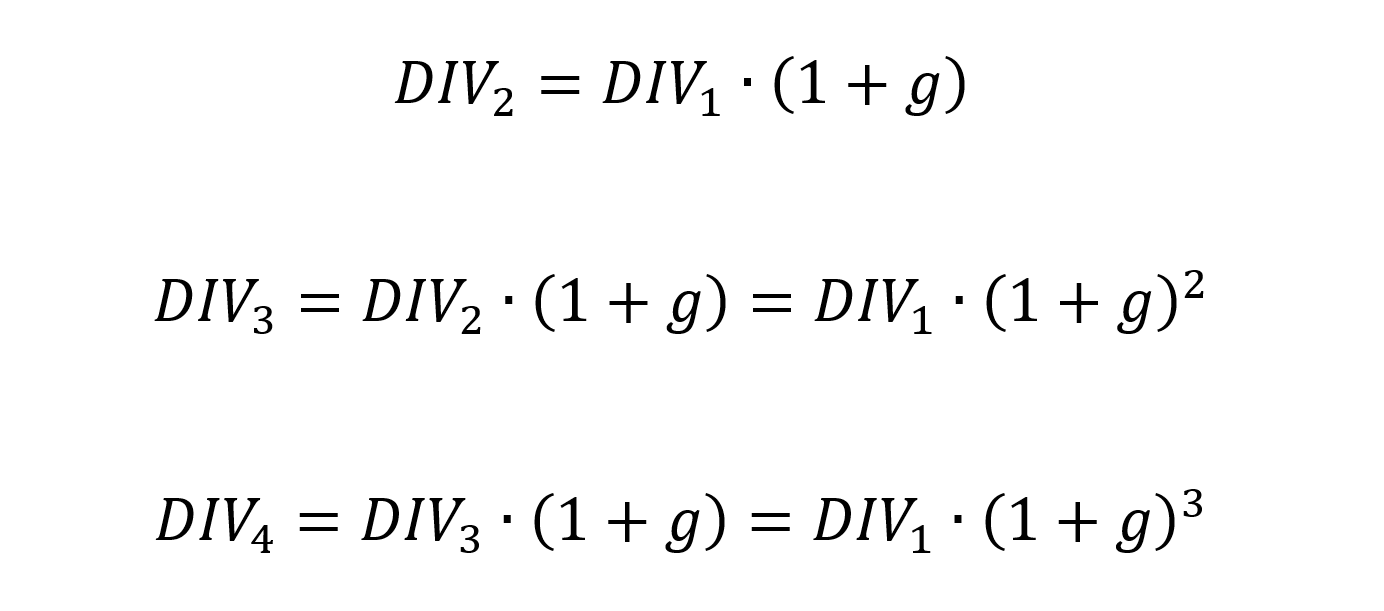

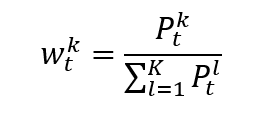

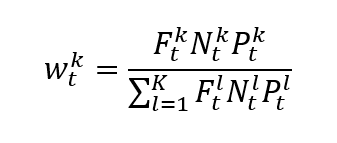

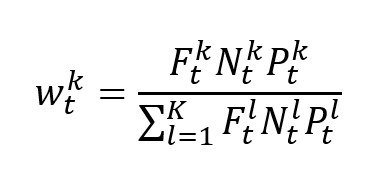

In a float-adjusted market-capitalization-weighted index, the weight of asset k is given by

Use of the IBEX 35 index in asset management

The IBEX 35 index serves as a benchmark for assessing the performance of the Spanish stock market. Because it is a widely used indicator of the performance of the Spanish stock market, it can help investors with important asset management tasks like passive investments, evaluating corporate risk, asset allocation, portfolio management, and so forth. However, the performance of all markets or sectors is not accurately reflected by the IBEX 35 index, which only includes the 35 Spanish stocks with the highest level of liquidity. Therefore, when evaluating the performance of the Spanish equity market, investors should also consider other indices like the FTSE Spain Index and the MSCI Spain Index.

Benchmark for equity funds

Investors frequently use the IBEX 35 index as a benchmark. When using the IBEX 35 index as a benchmark for equity funds in Spain, it is important to remember that it only includes 35 of the largest and most popularly traded companies listed on the Spanish stock exchange. As a result, it might not accurately represent the whole Spanish market, as there are many small and mid-cap companies in Spain that are not represented by the index. The benchmark index to be used will ultimately depend on the specific investment objectives and strategies of the fund in question.

Financial products around the IBEX 35 index

Through the IBEX 35 index, these financial products give investors access to the Spanish stock market, portfolio diversification, and the potential to profit from market fluctuations.

Some of the main financial products related to the IBEX 35 index are:

- Exchange-Traded Funds (ETFs): Through ETFs, which are traded like stocks, investors can gain access to the IBEX 35 index. ETFs that follow the Ibex 35 index include the iShares Ibex 35 UCITS ETF and the Amundi ETF Ibex 35.

- Options and Futures Contracts: Investors can use options and futures contracts to buy or sell the IBEX 35 index at a predetermined price and date in the future. This is typically done to generate income through trading strategies, hedge against market volatility, or predict the index’s performance.

- Mutual Funds and Index Funds: Some mutual funds and index funds concentrate on investing in businesses that are part of the IBEX 35 index or seek to replicate the performance of the index by acquiring the same stocks that comprise the index.

Historical data for the IBEX 35 index

How to get the data?

The IBEX 35 index is the most common index used in finance, and historical data for the IBEX 35 index can be easily downloaded from the internet.

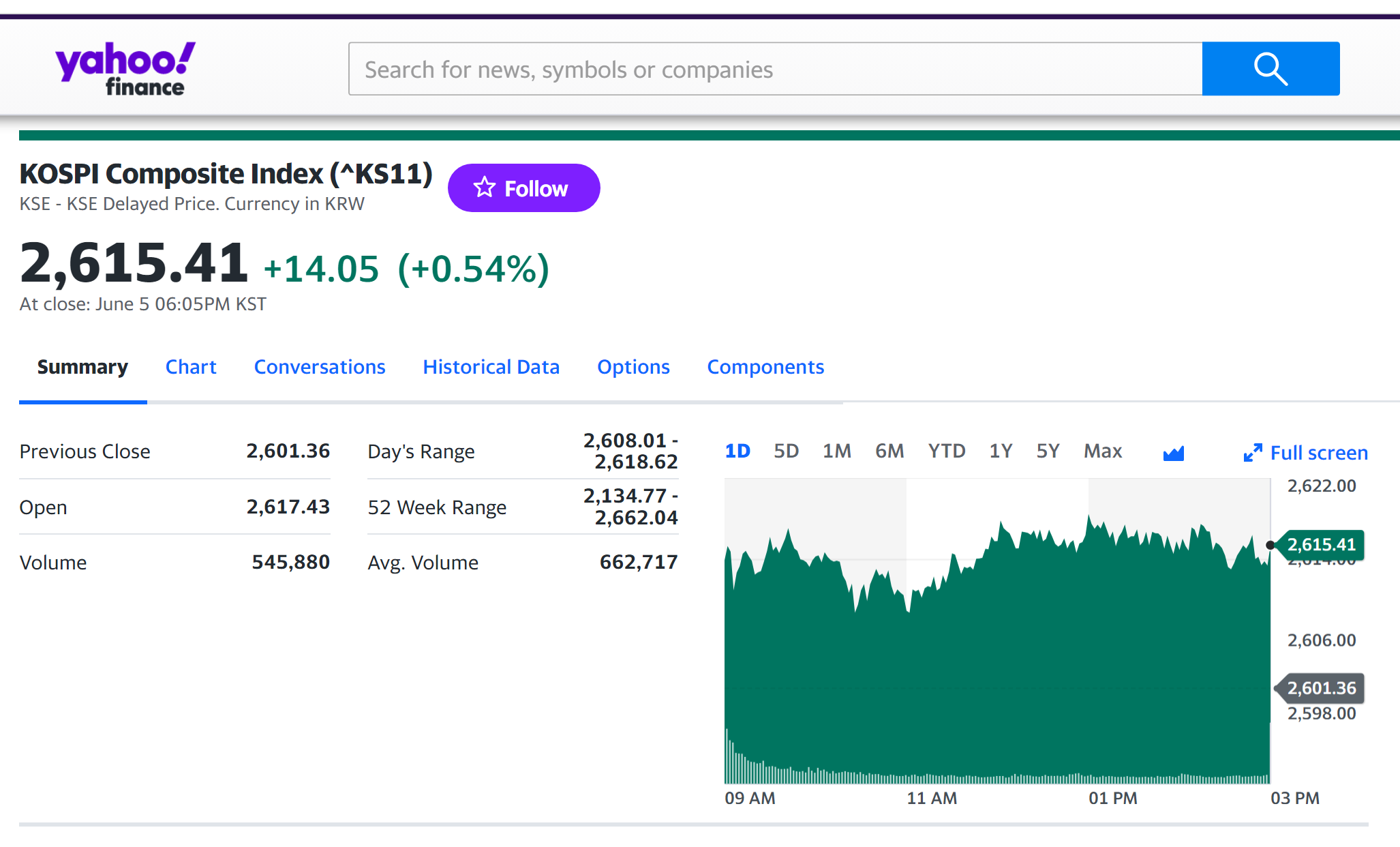

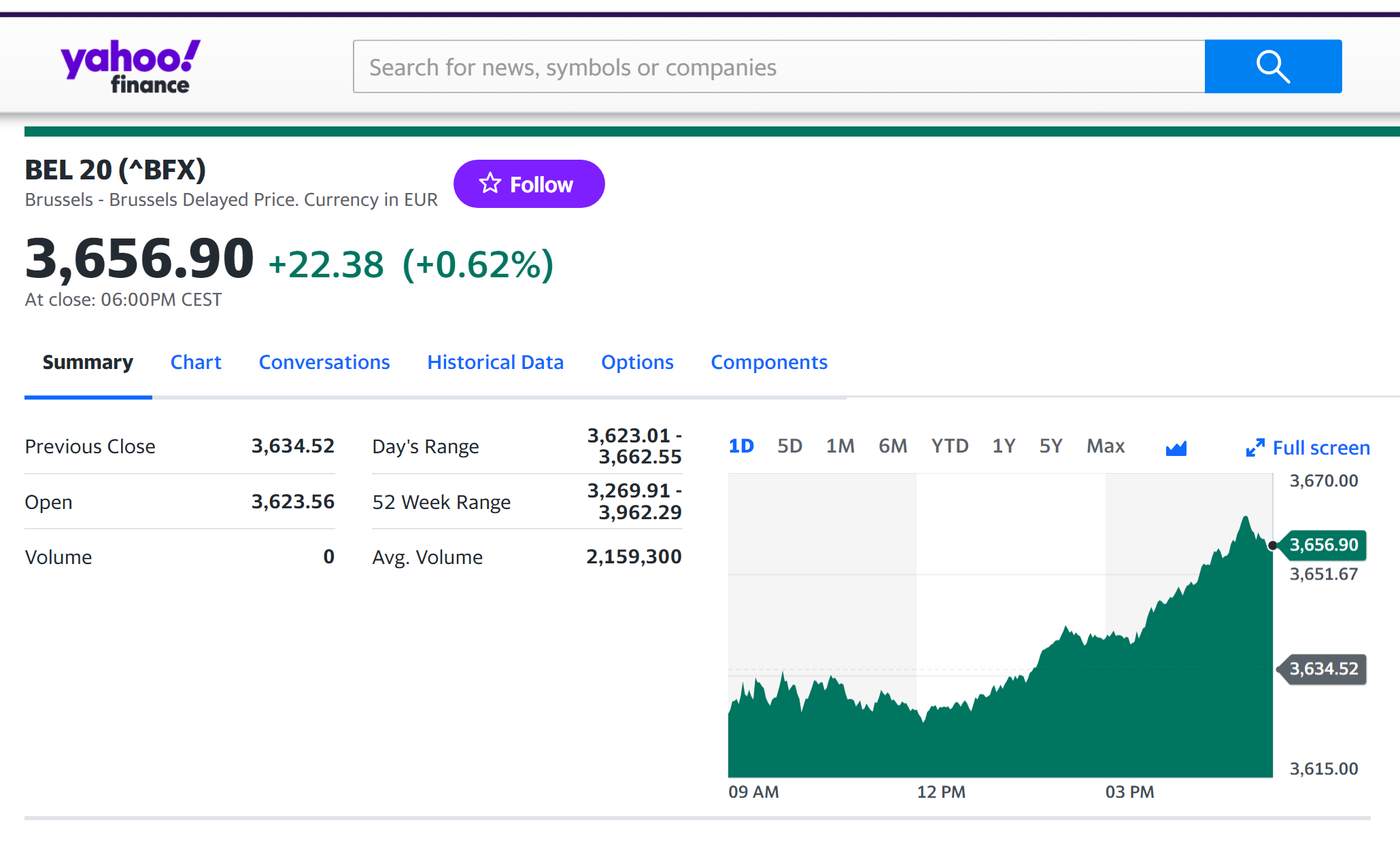

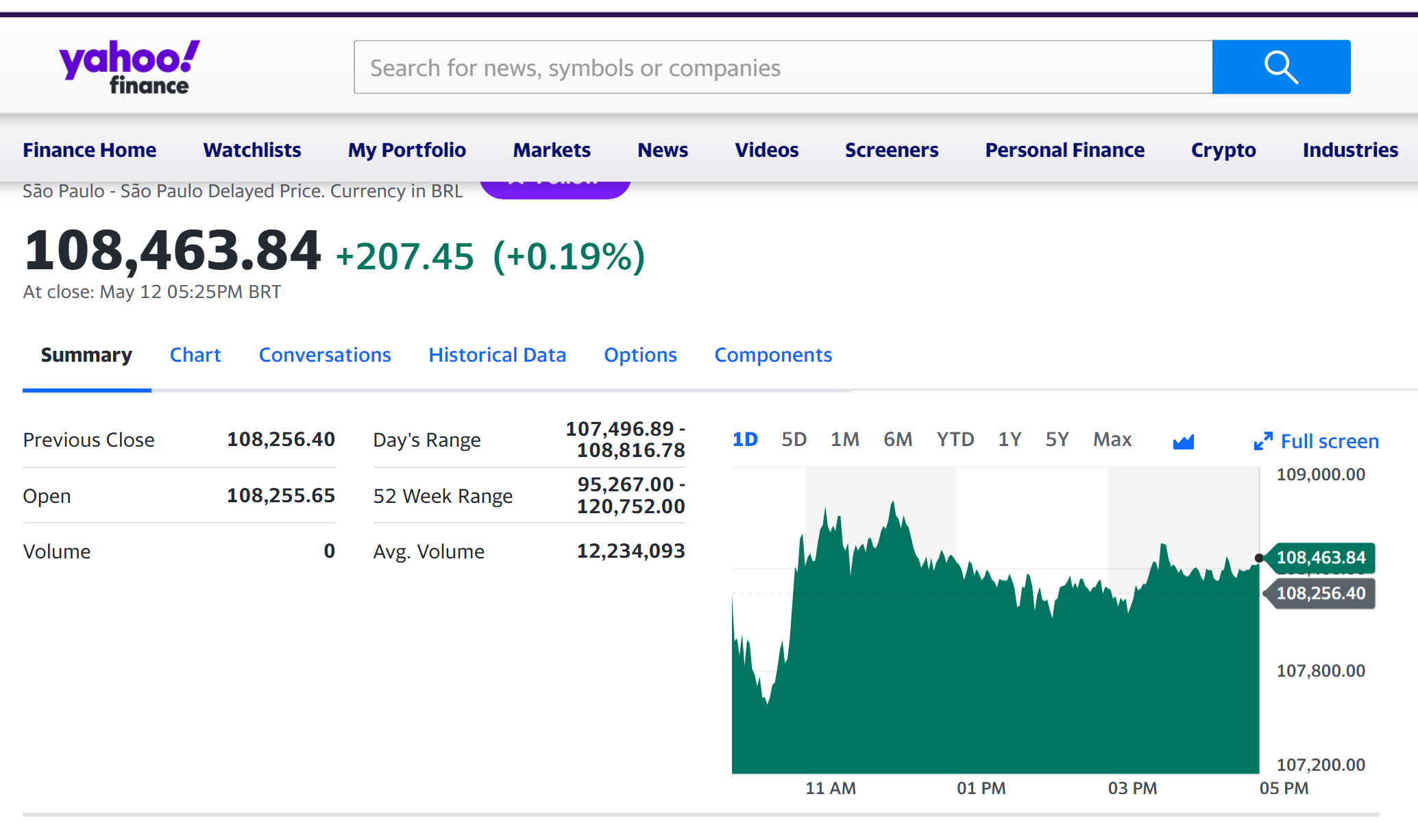

For example, you can download data for the IBEX 35 index from July 12, 1993 on Yahoo! Finance (the Yahoo! code for IBEX 35 index is ^IBEX).

Source: Yahoo! Finance.

You can also download the same data from a Bloomberg terminal.

R program

The R program below written by Shengyu ZHENG allows you to download the data from Yahoo! Finance website and to compute summary statistics and risk measures about the IBEX 35 index.

Data file

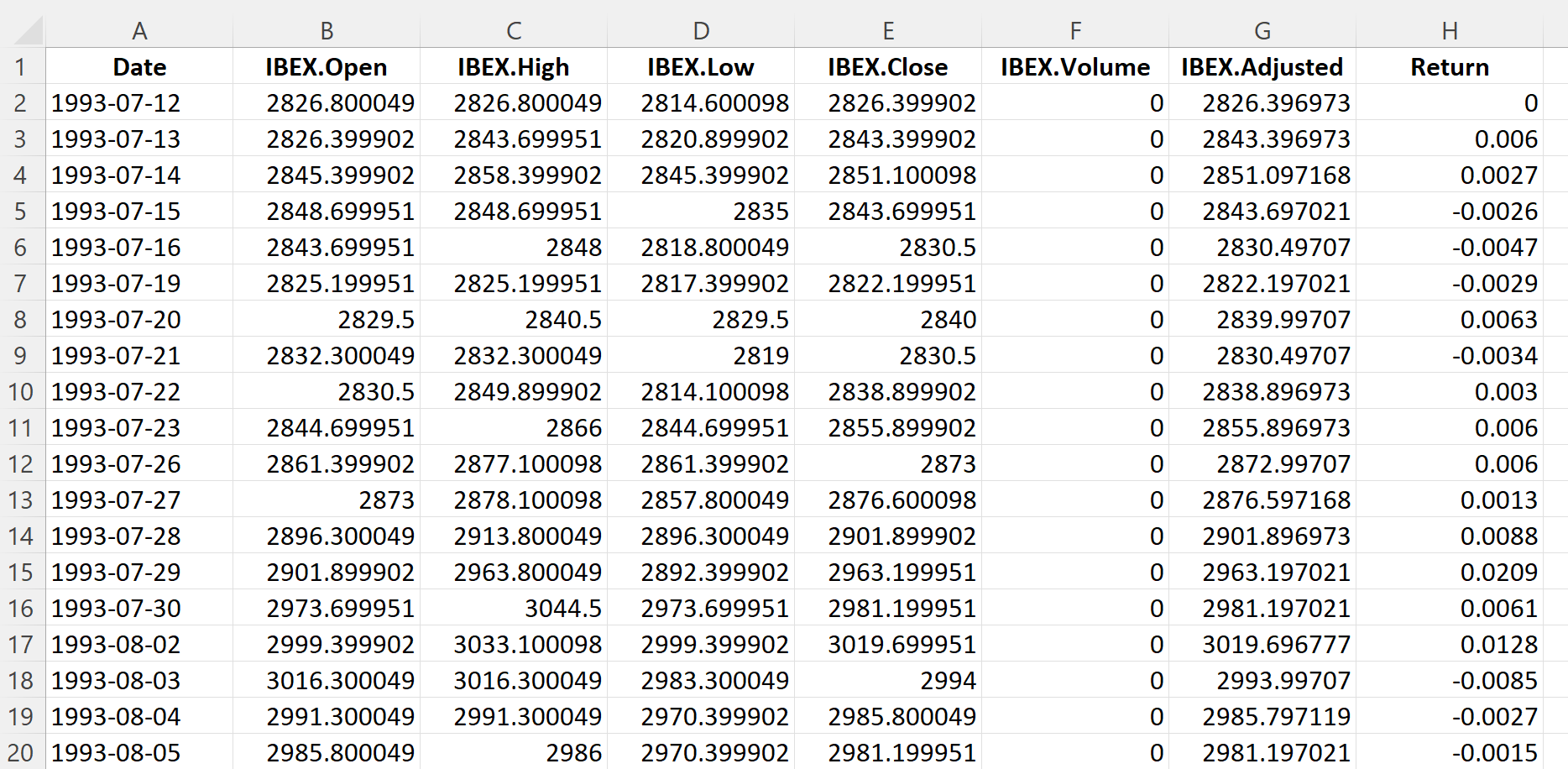

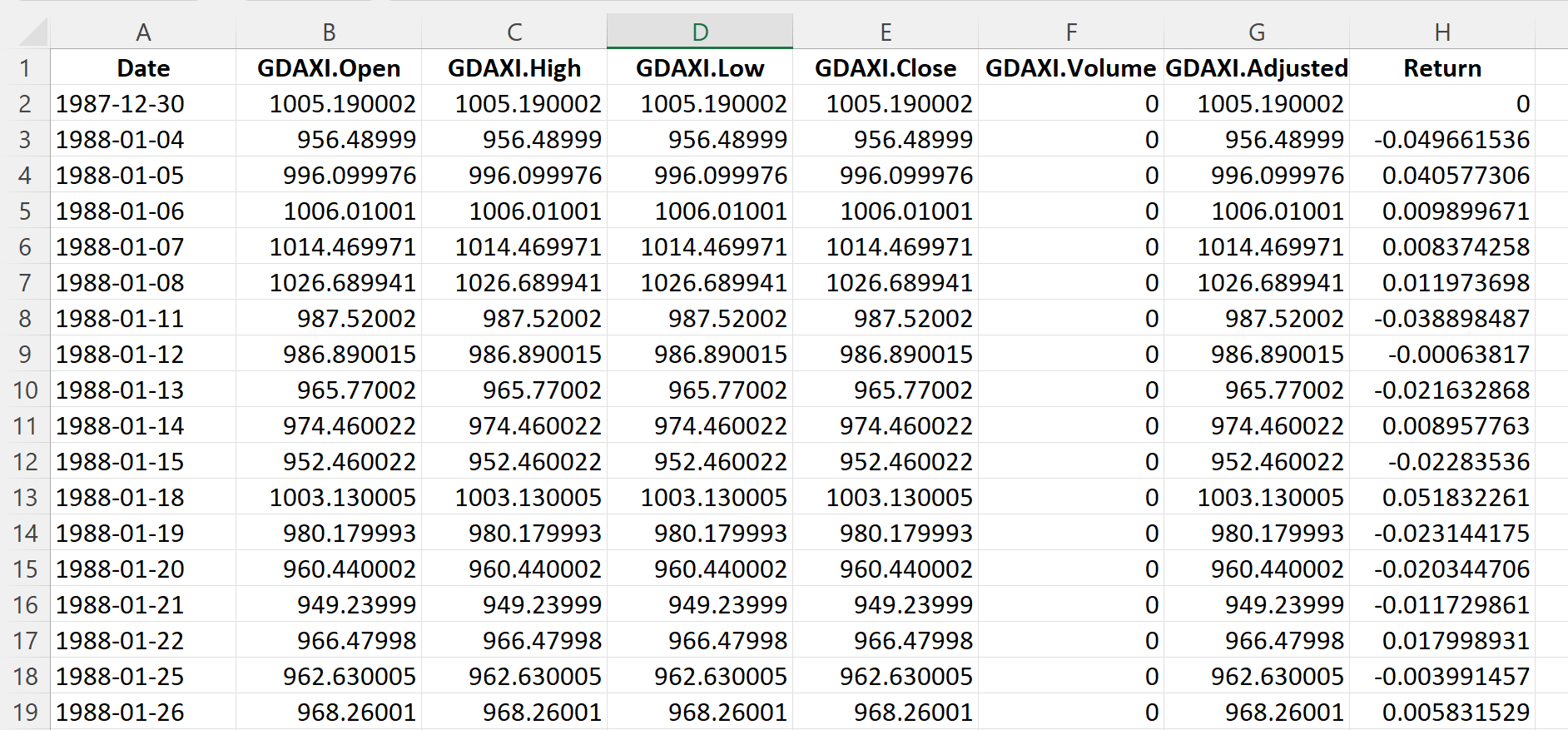

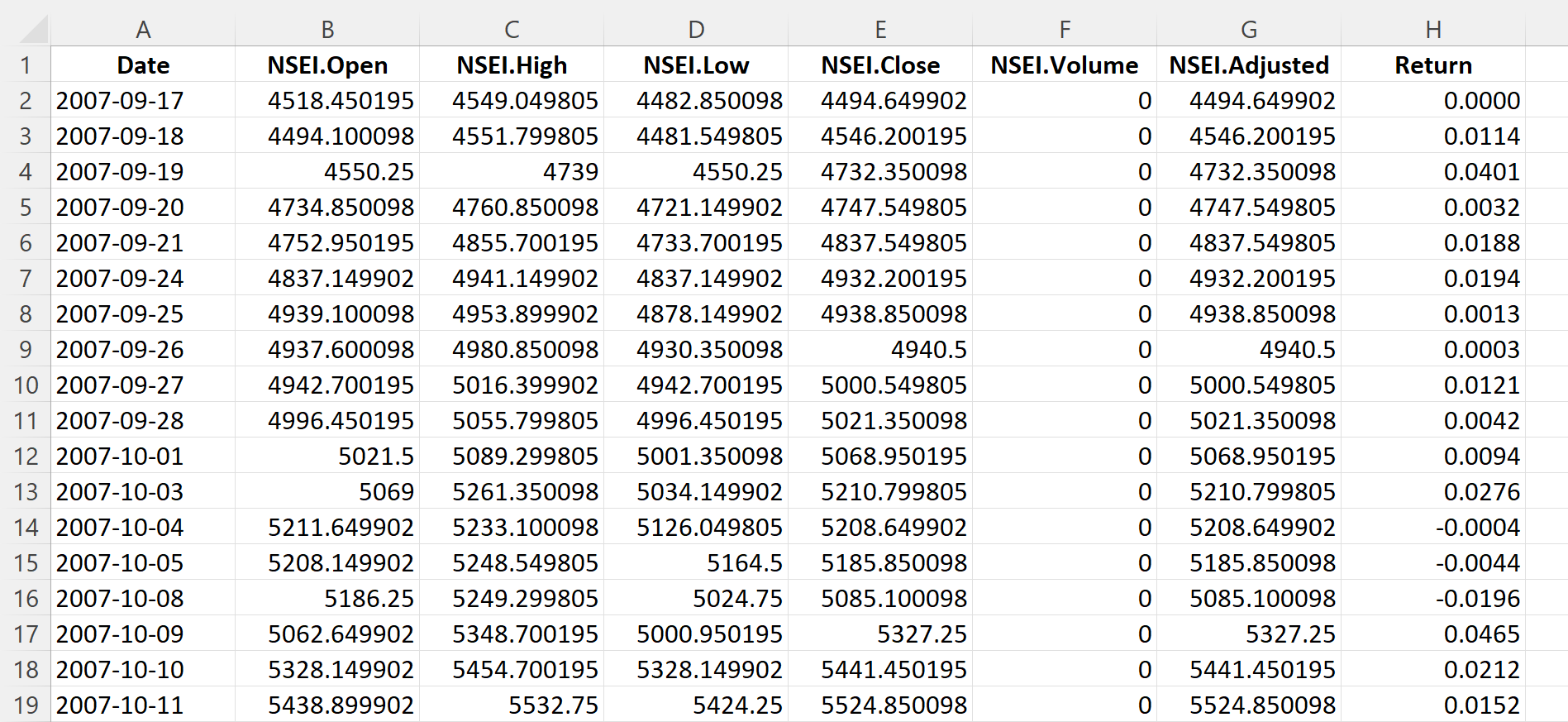

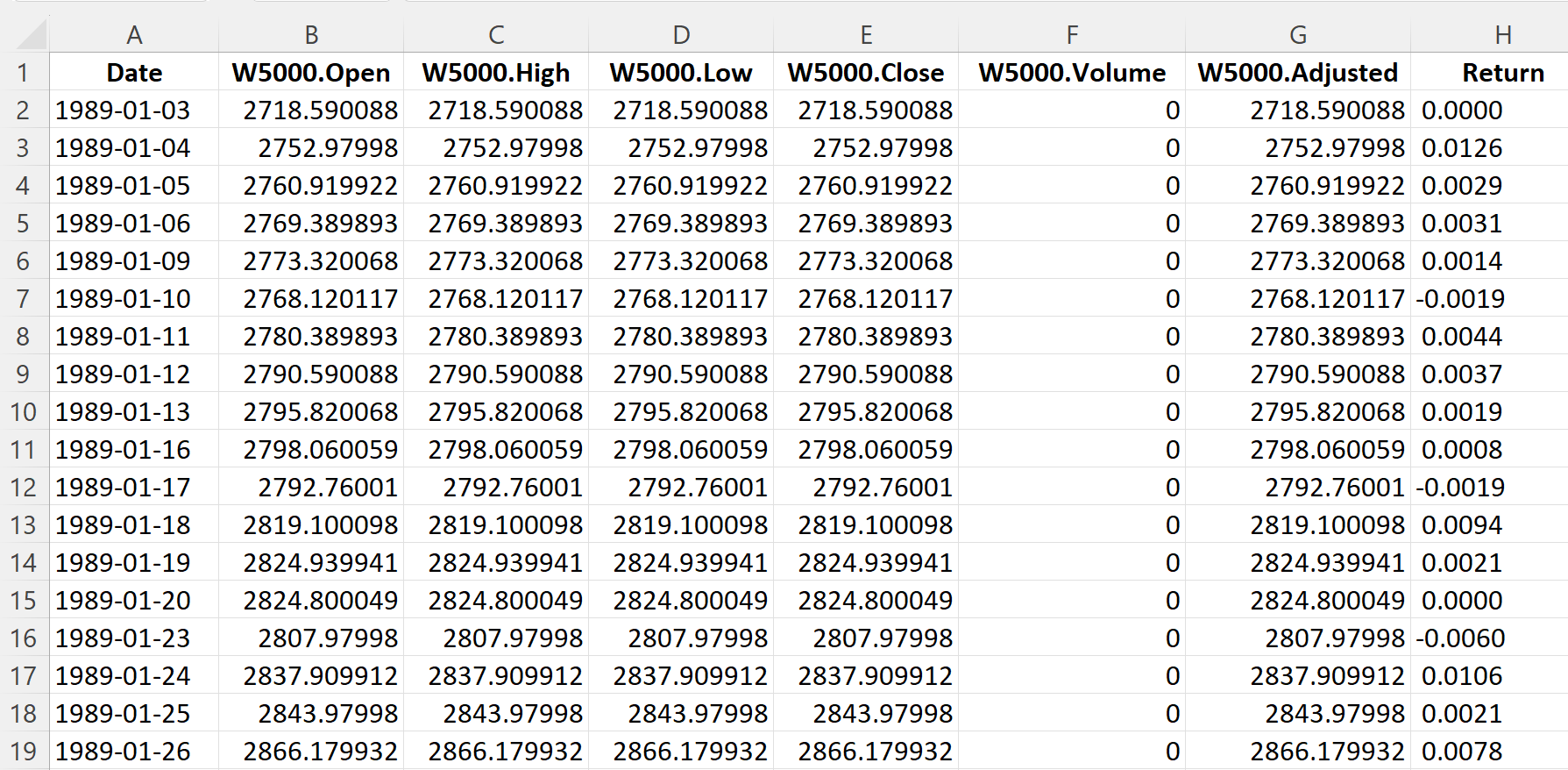

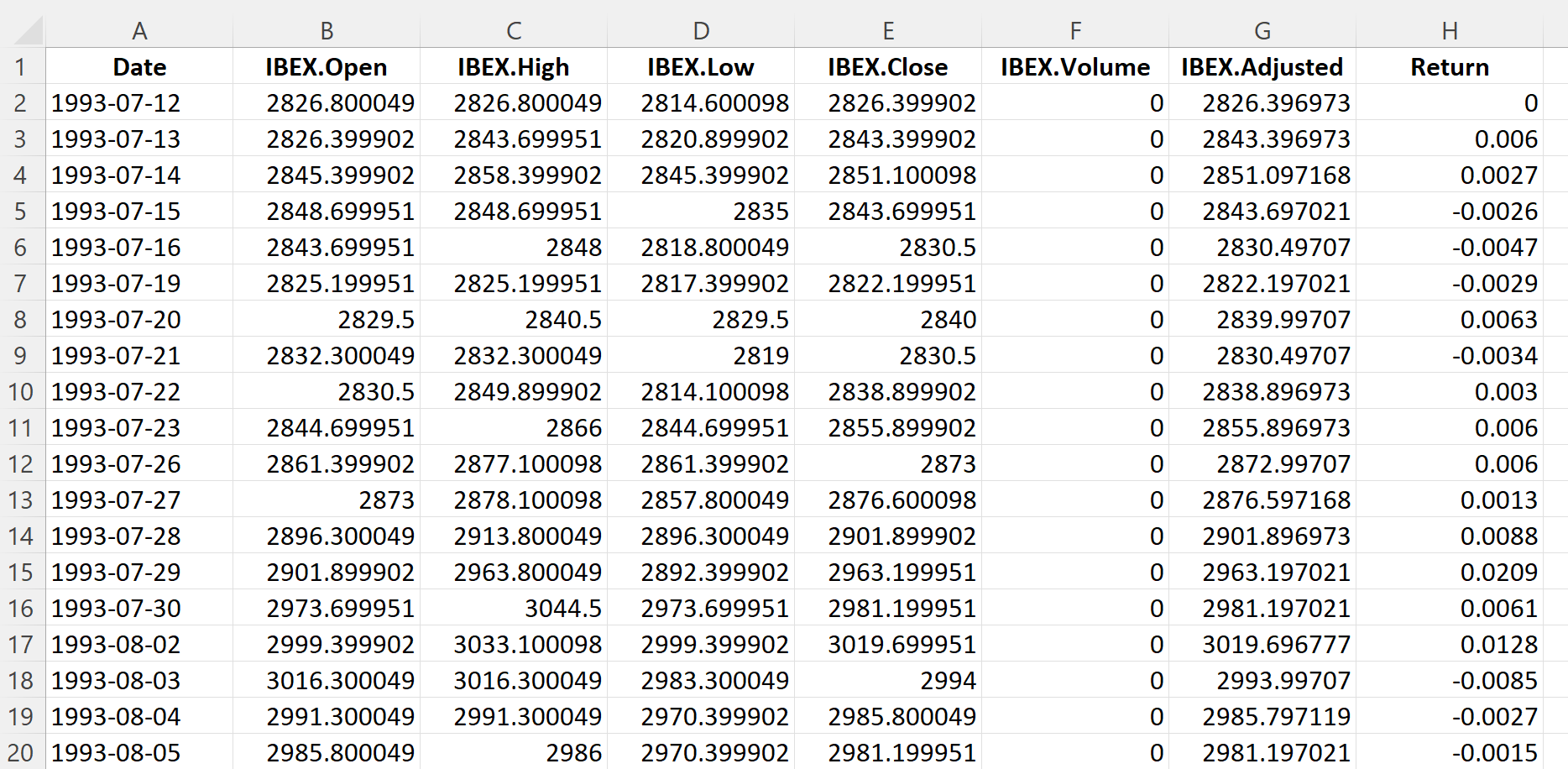

The R program that you can download above allows you to download the data for the IBEX 35 index from the Yahoo! Finance website. The database starts on July 12, 1993. It also computes the returns (logarithmic returns) from closing prices.

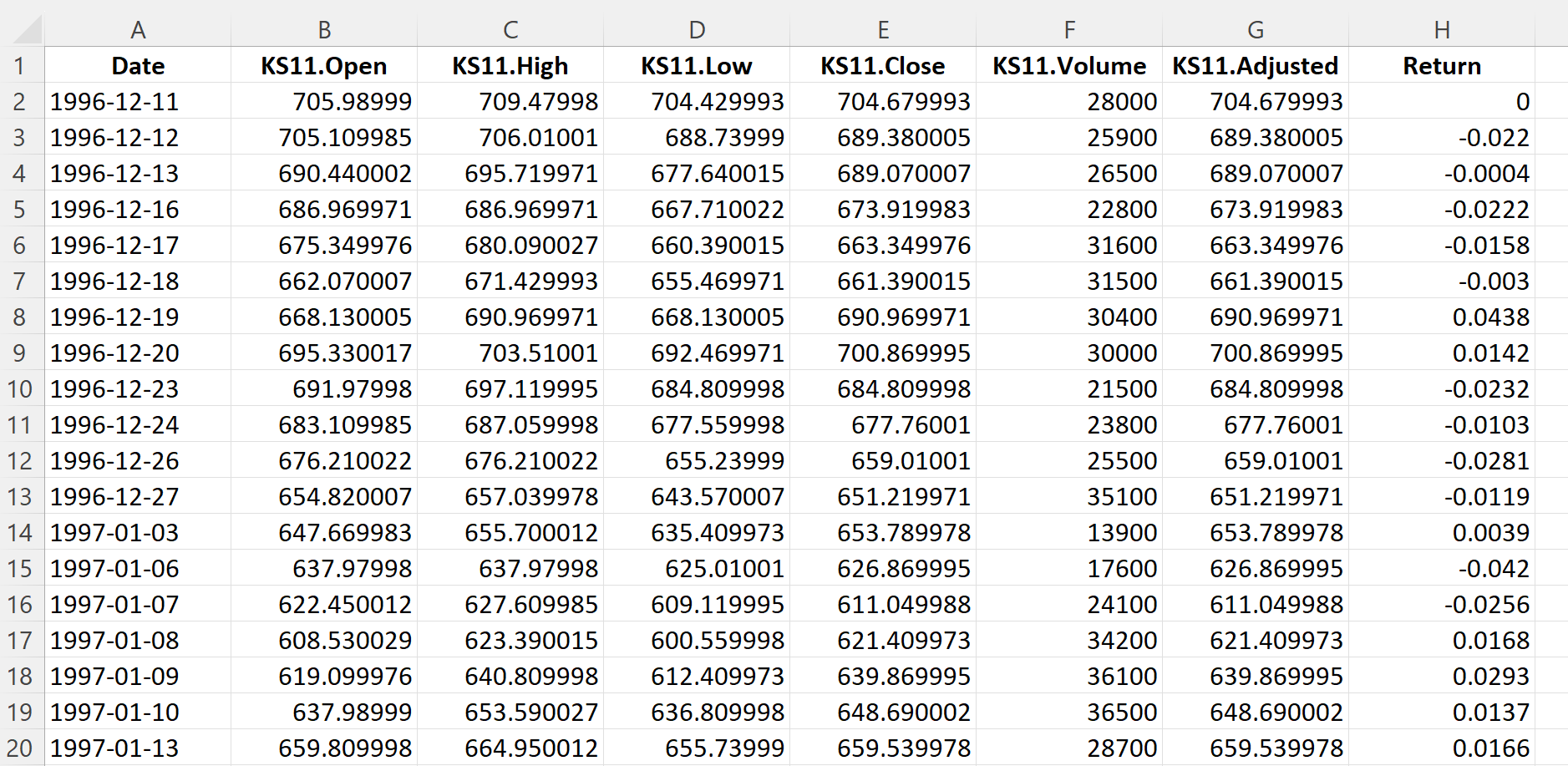

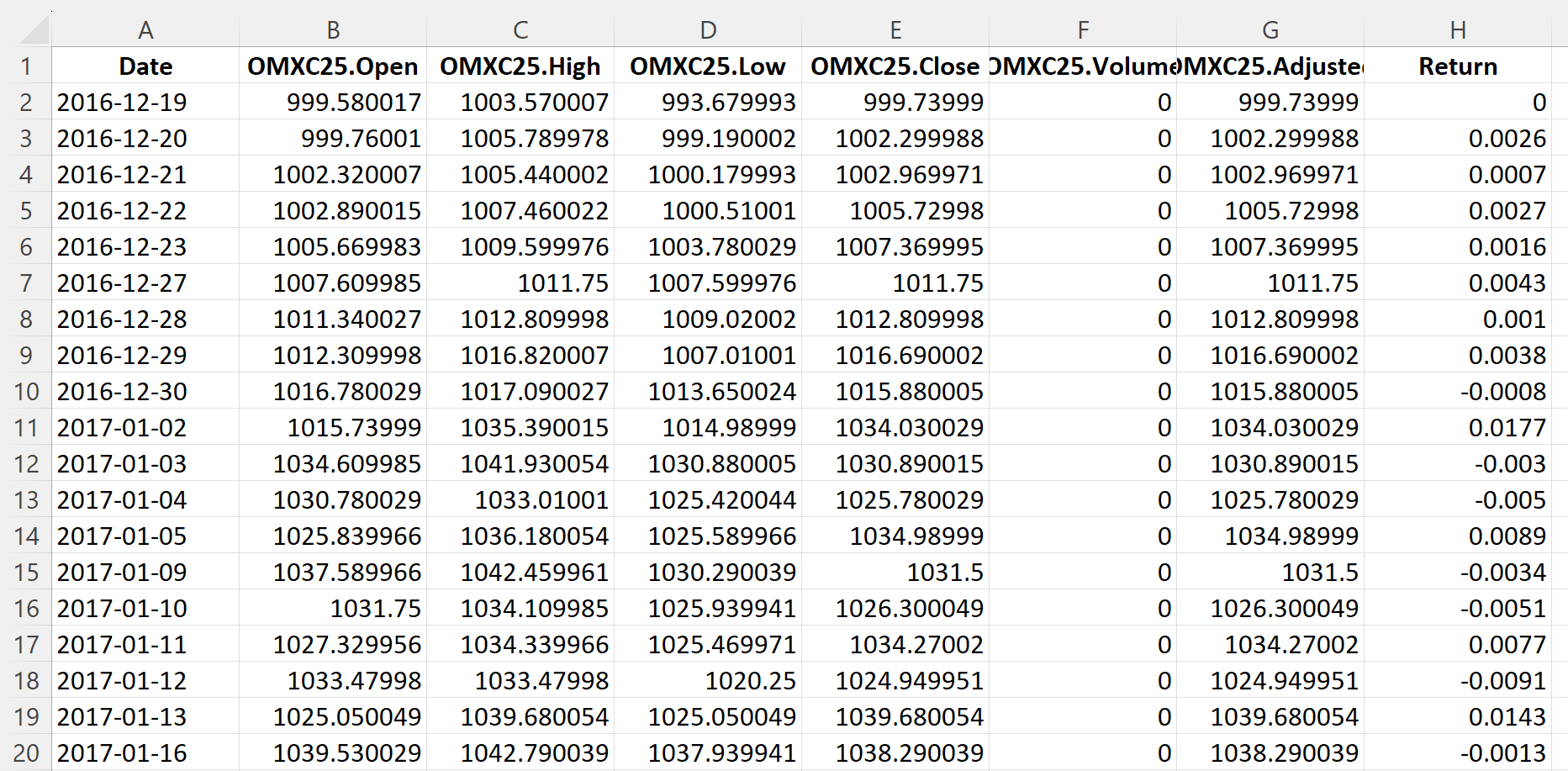

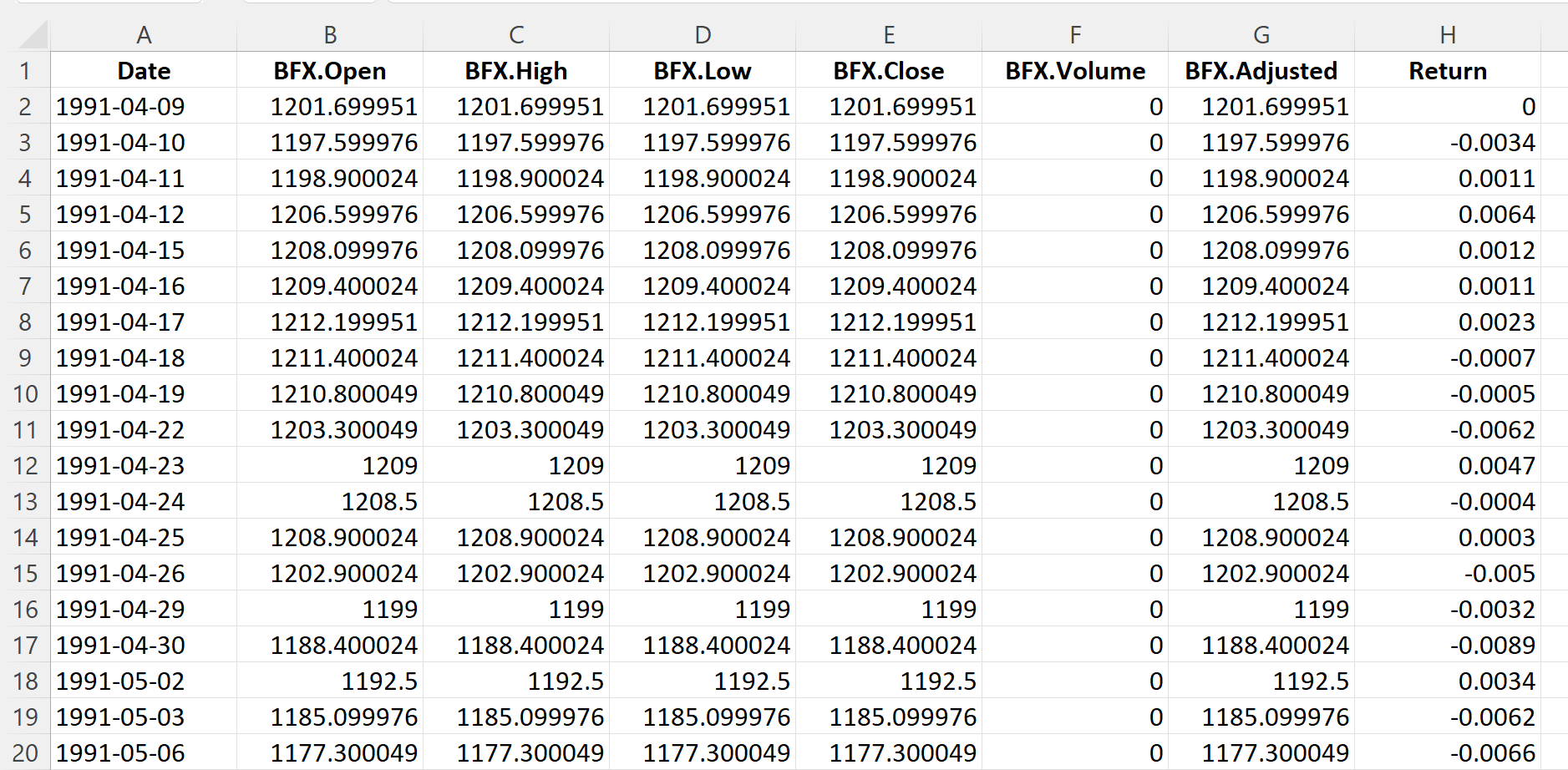

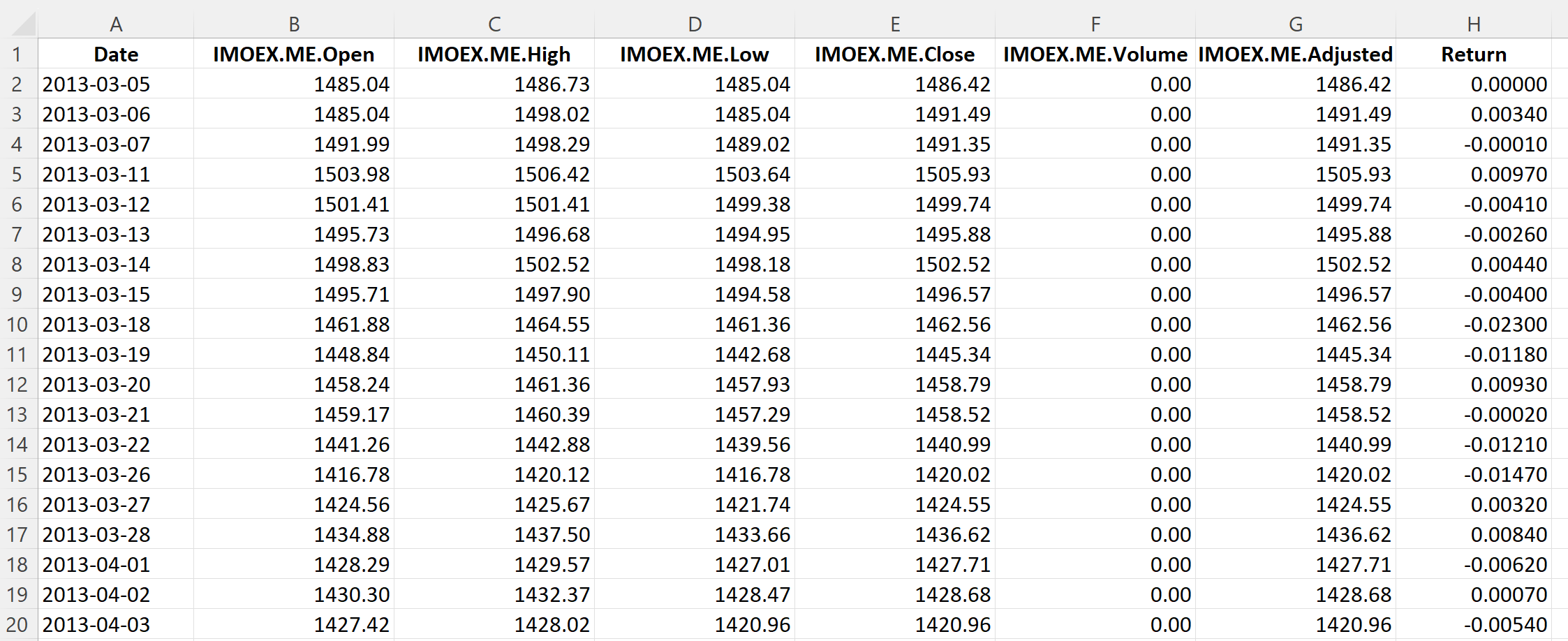

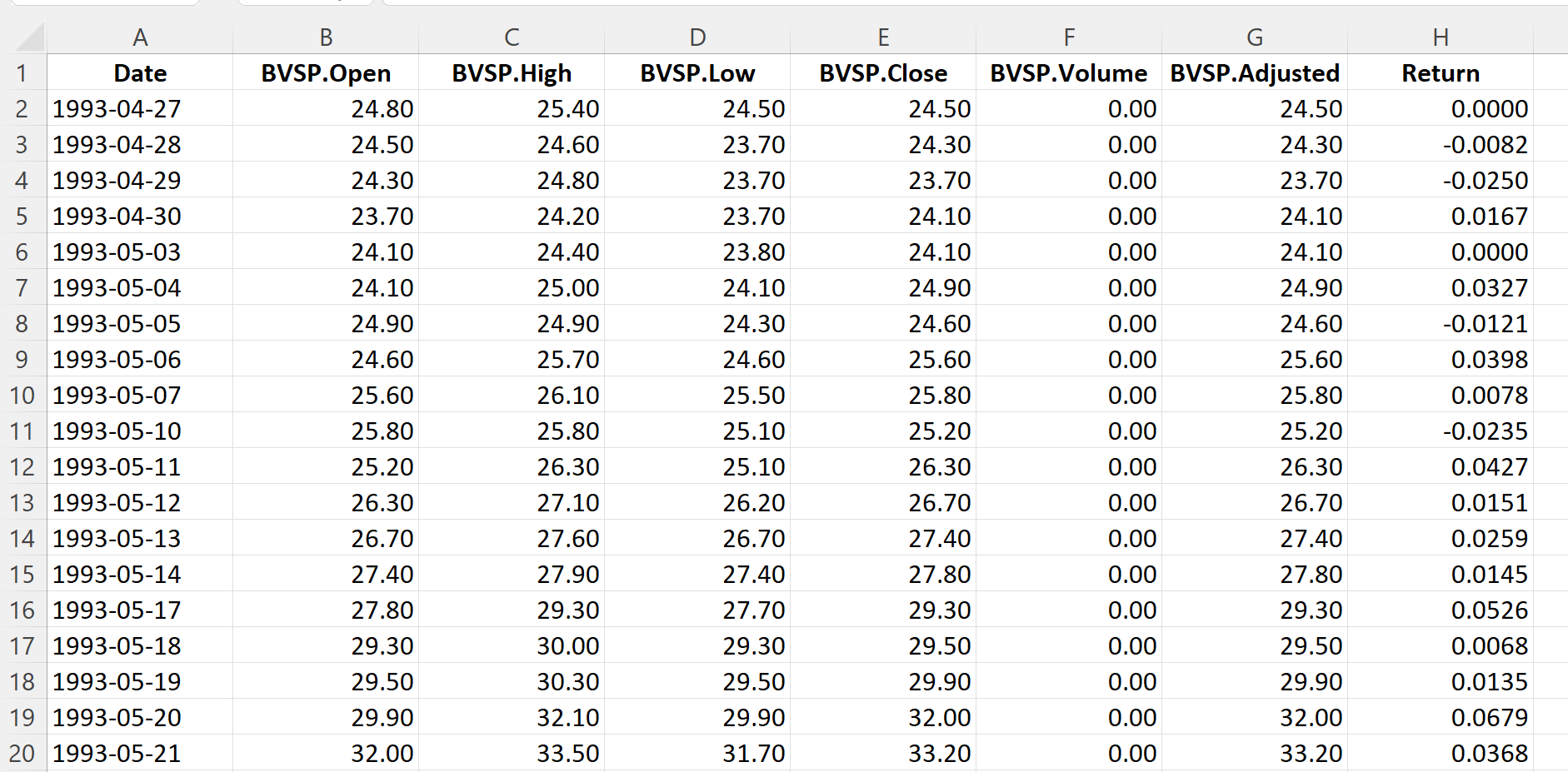

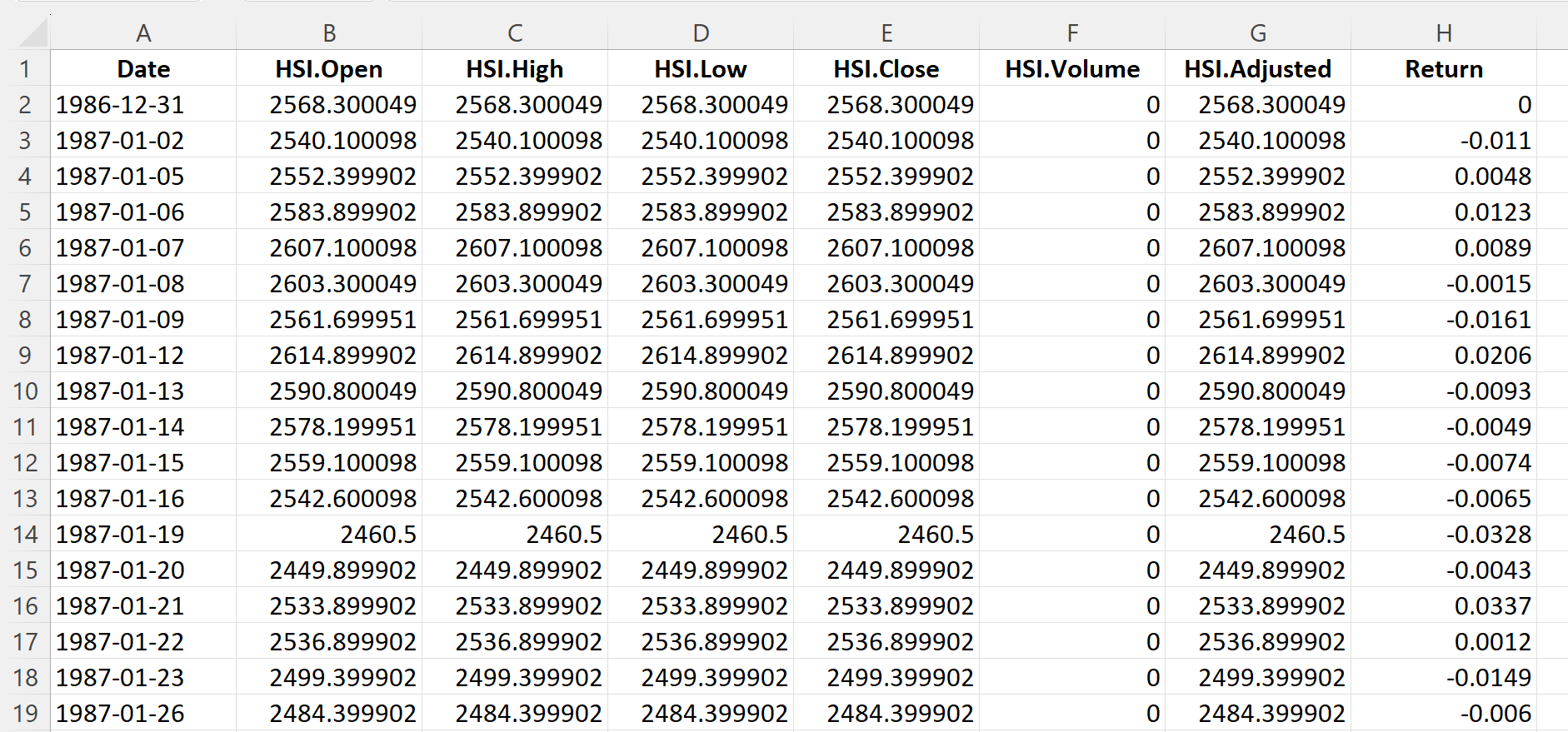

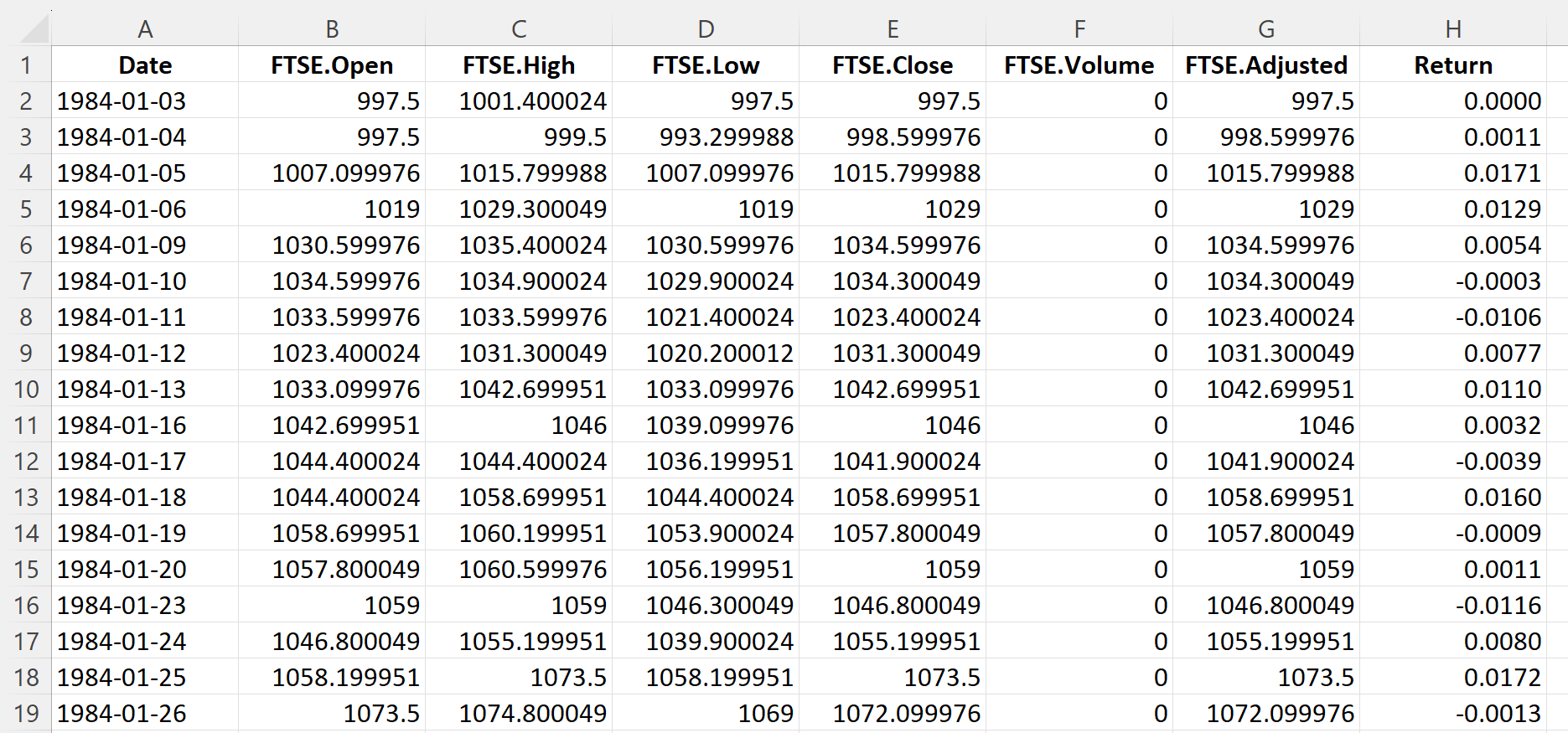

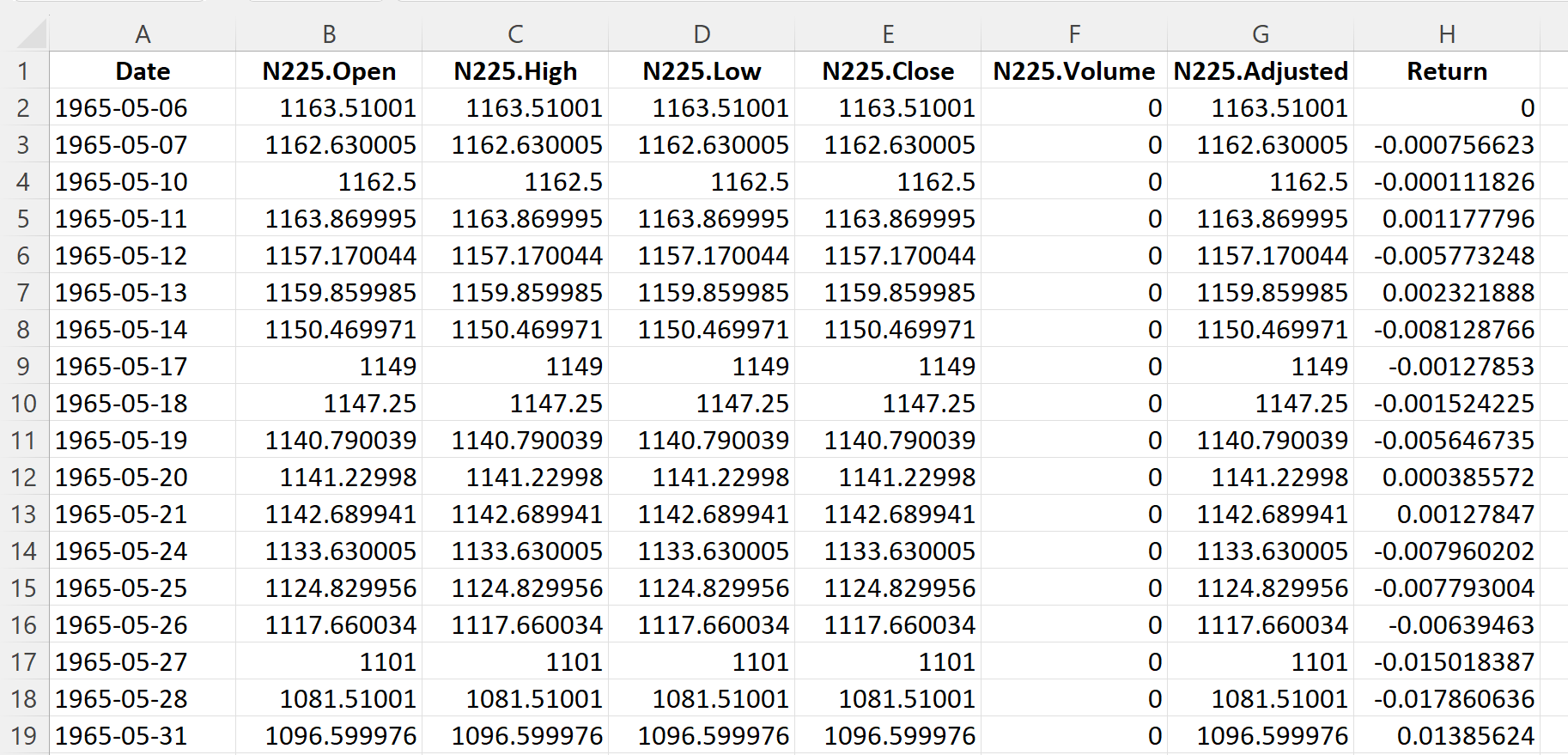

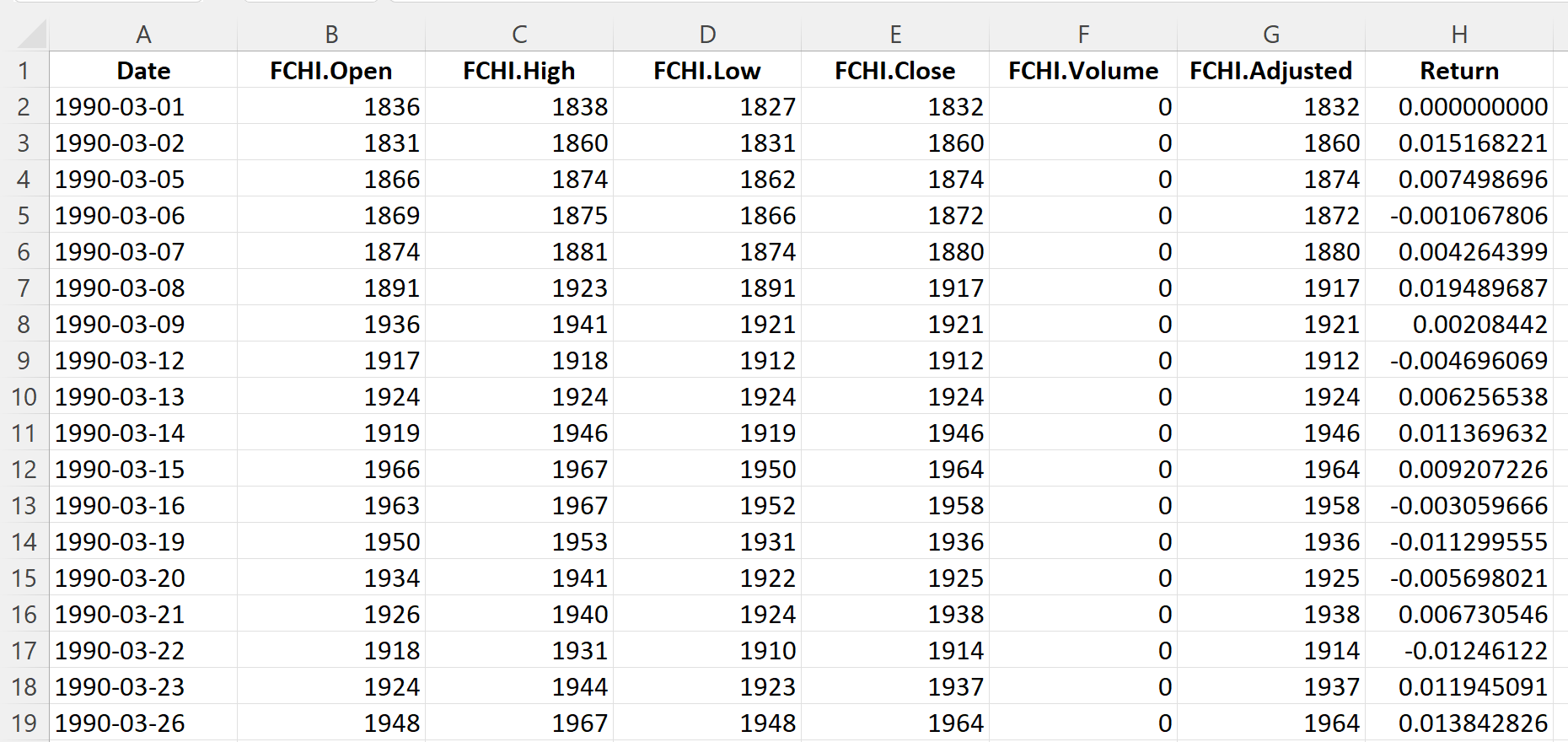

Table 3 below represents the top of the data file for the IBEX 35 index downloaded from the Yahoo! Finance website with the R program.

Table 3. Top of the data file for the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance website).

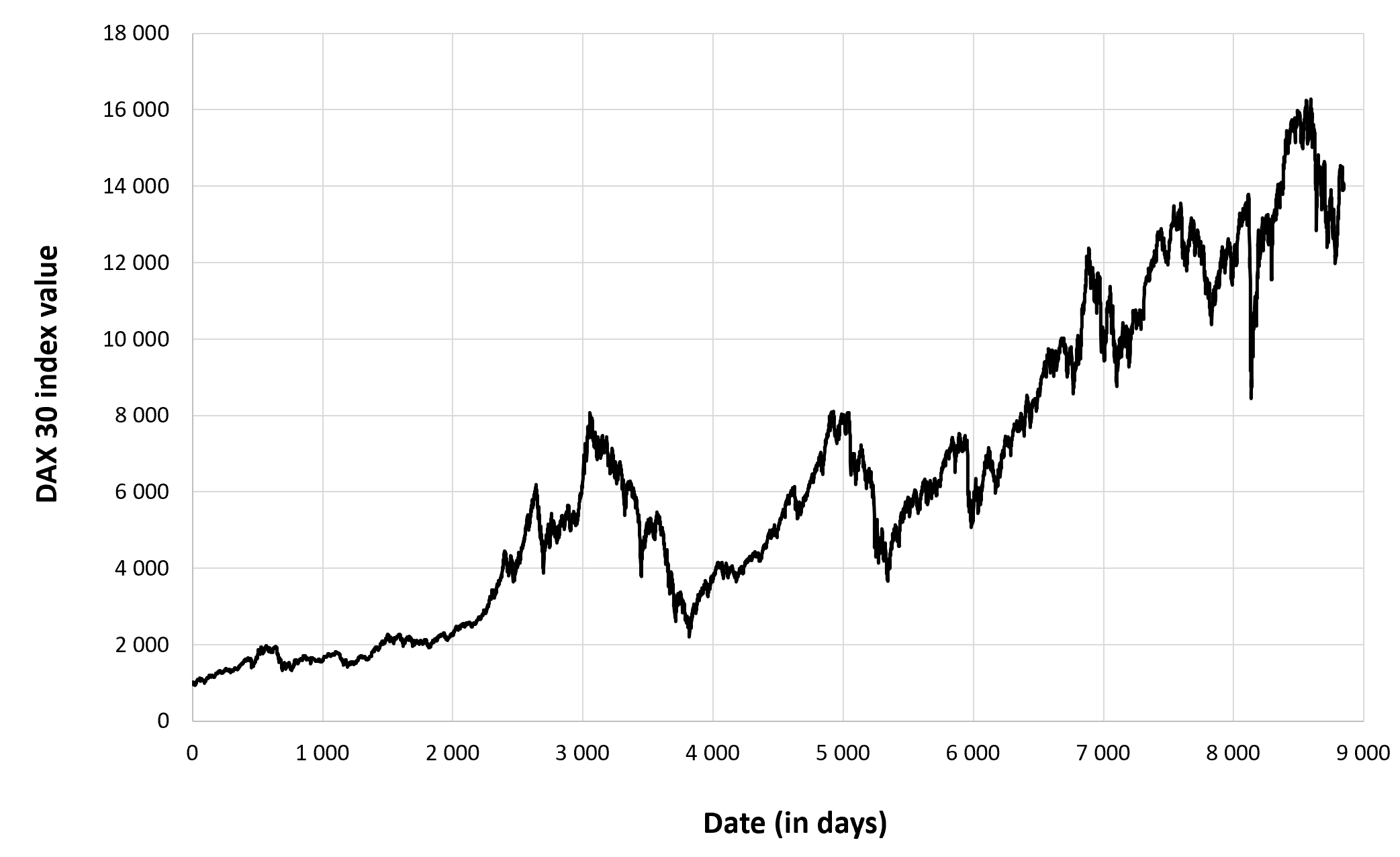

Evolution of the IBEX 35 index

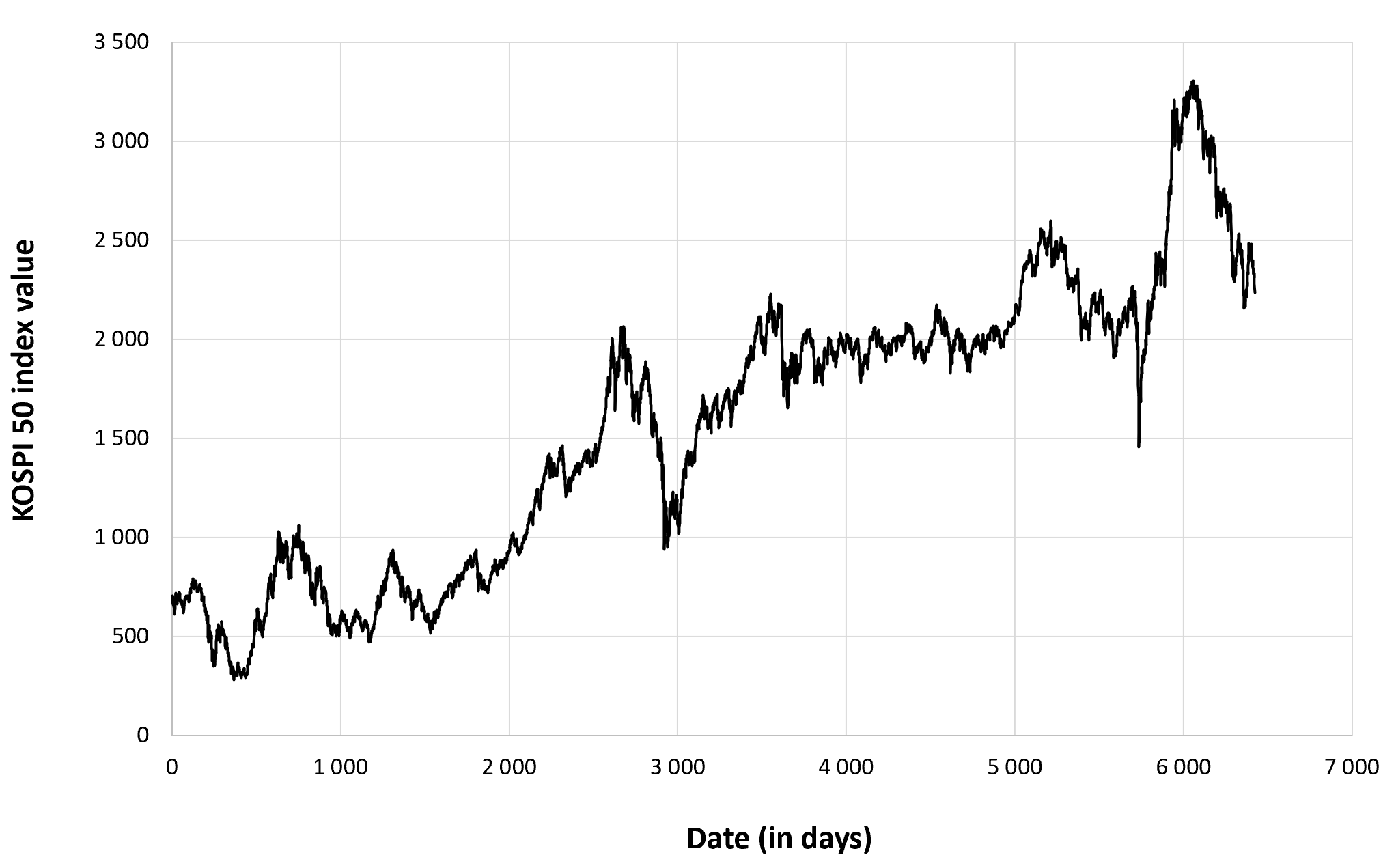

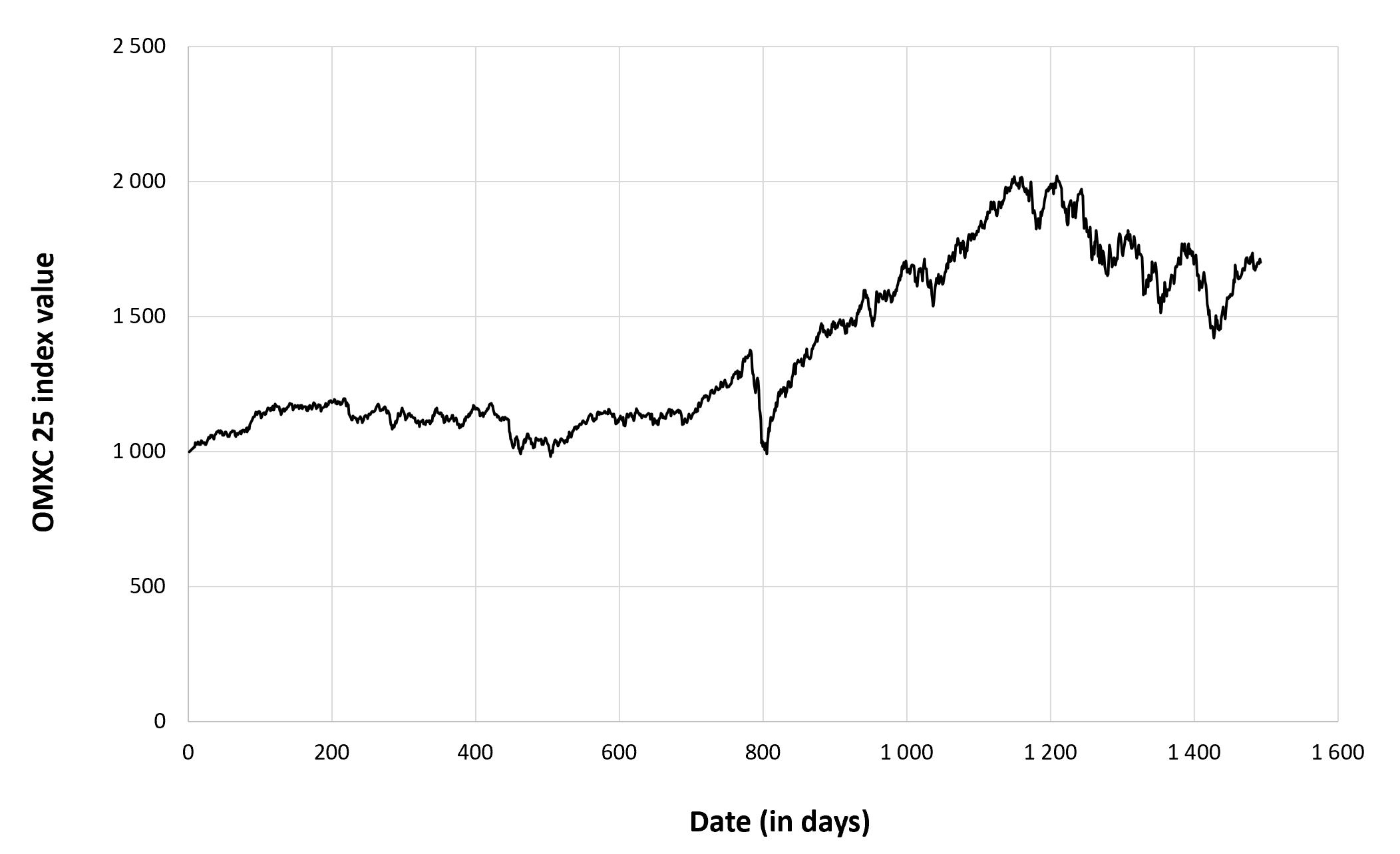

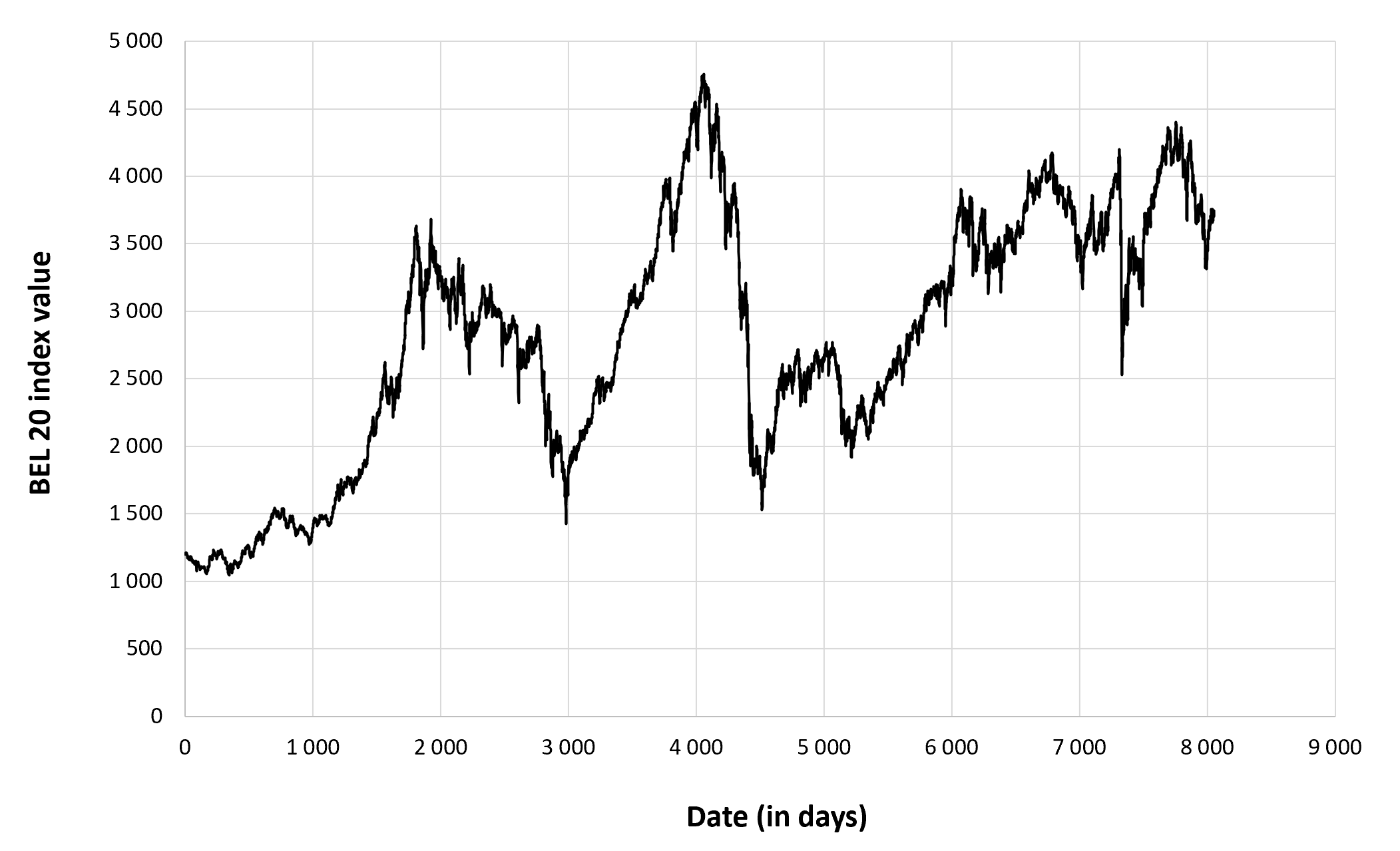

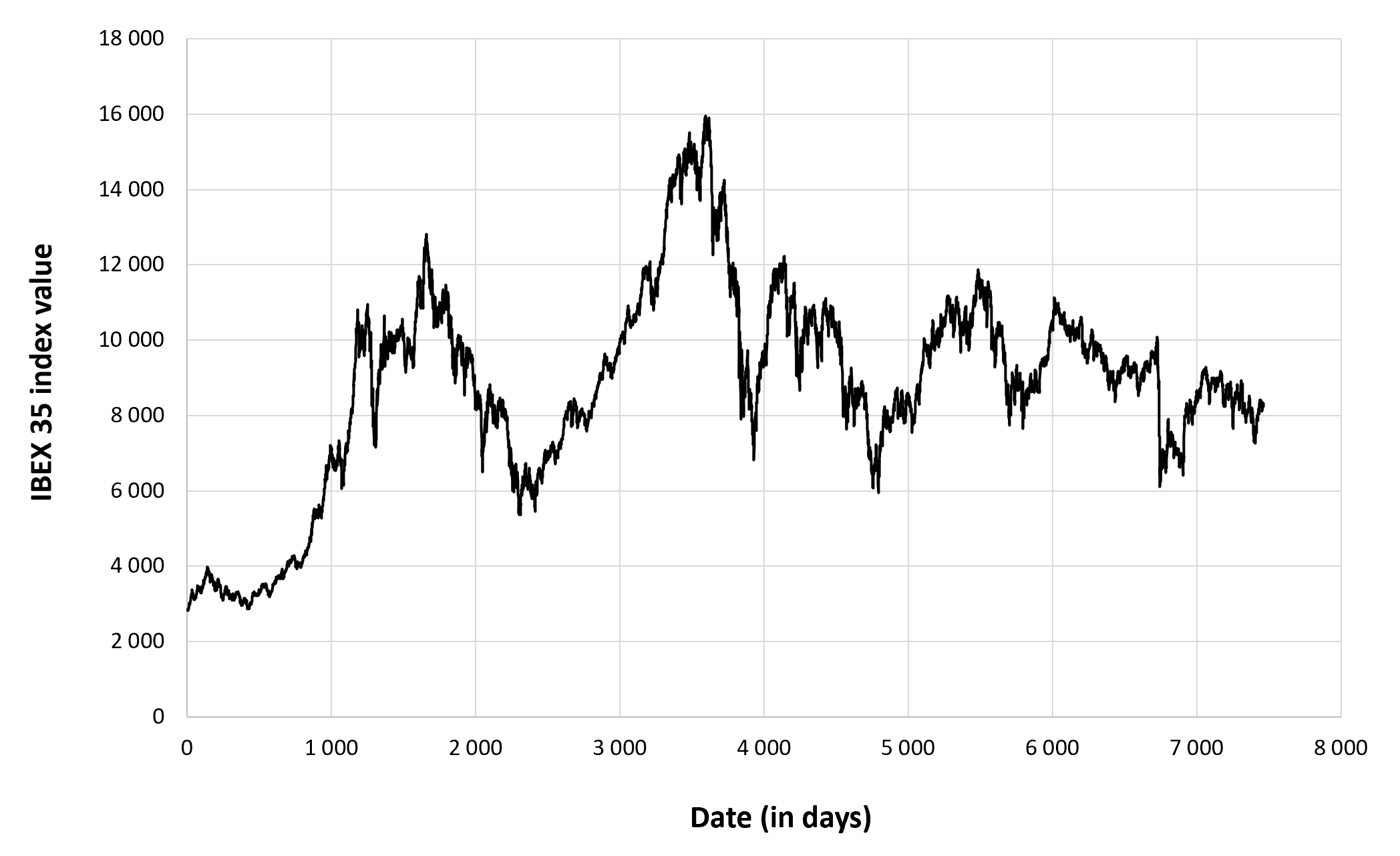

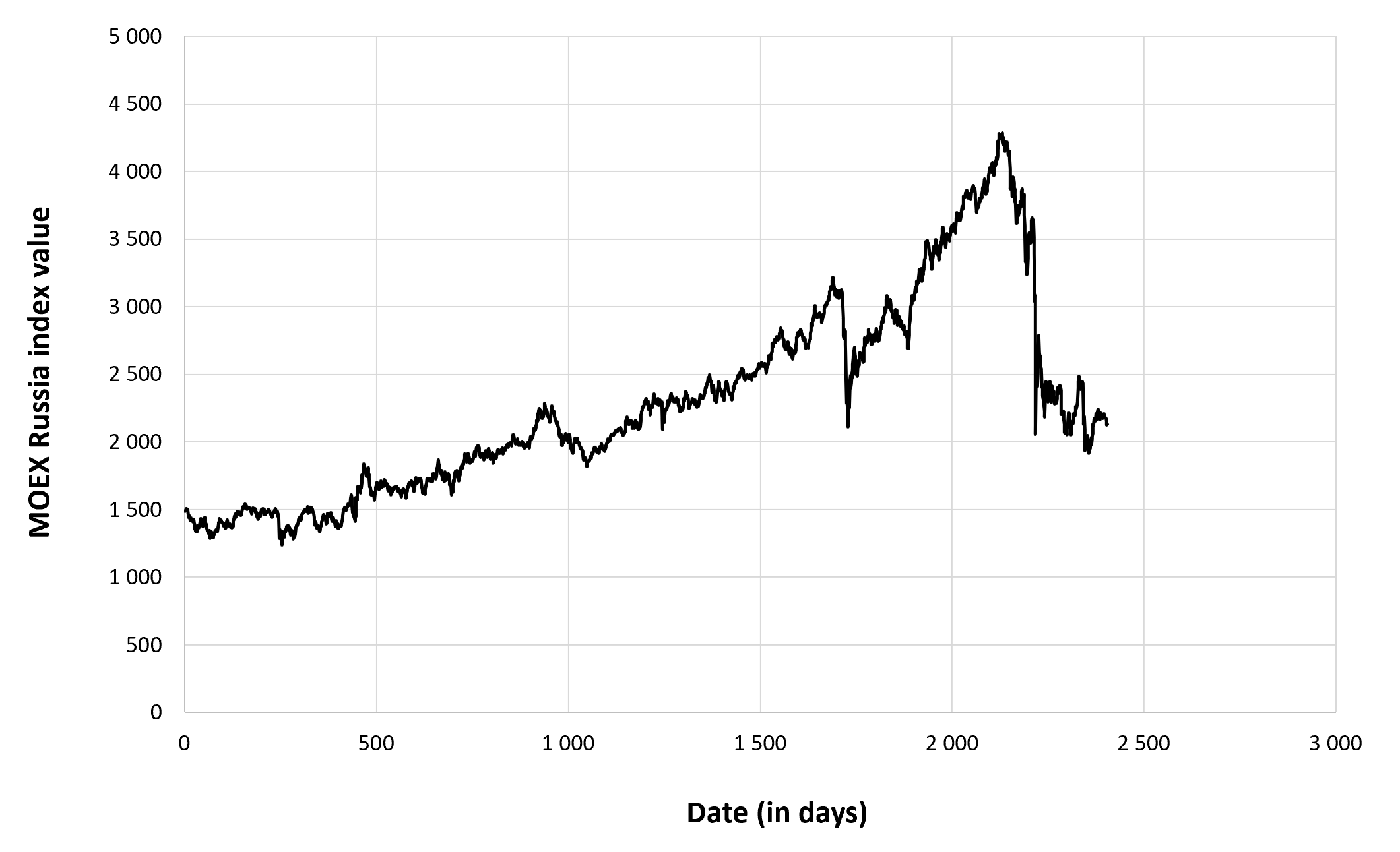

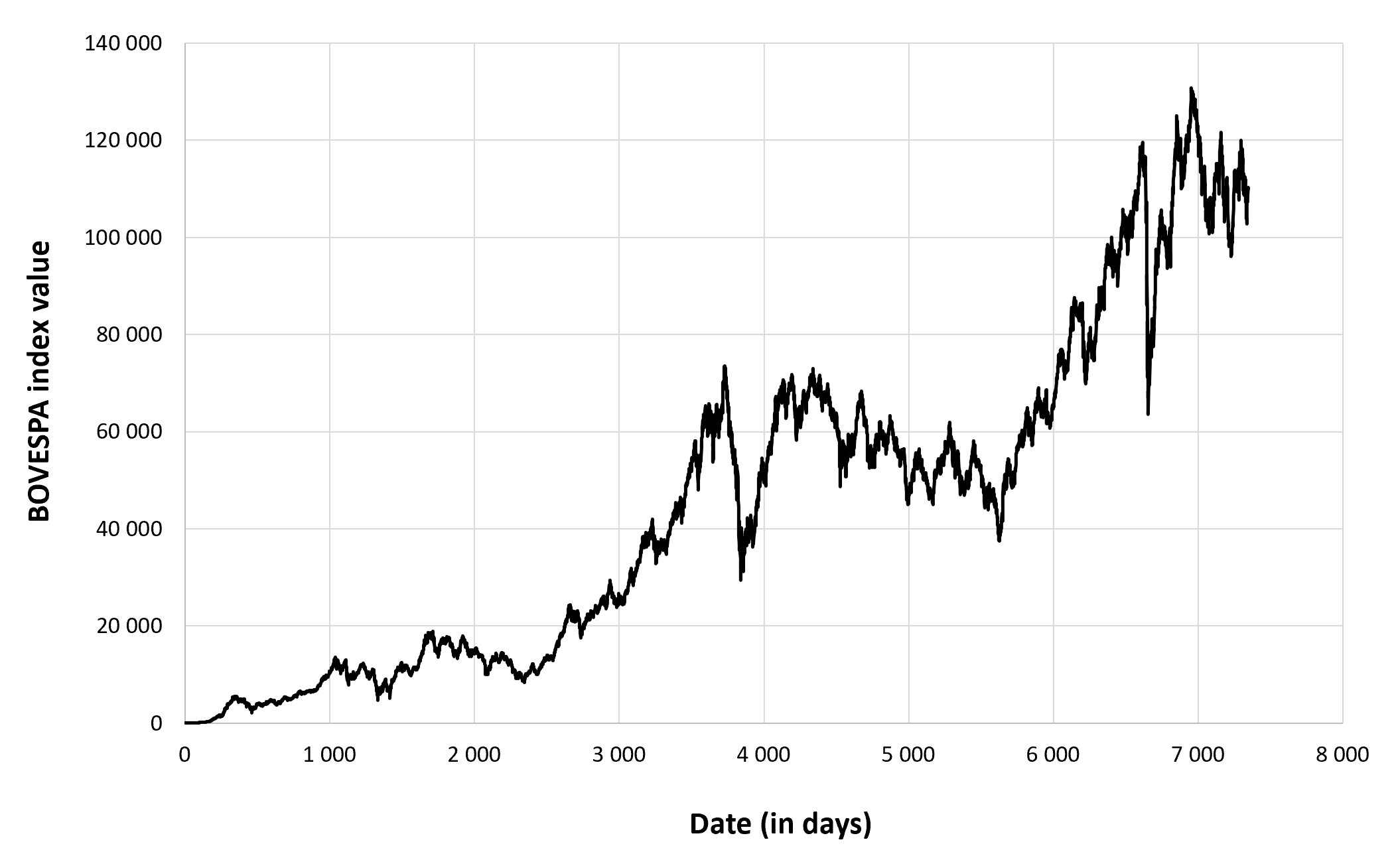

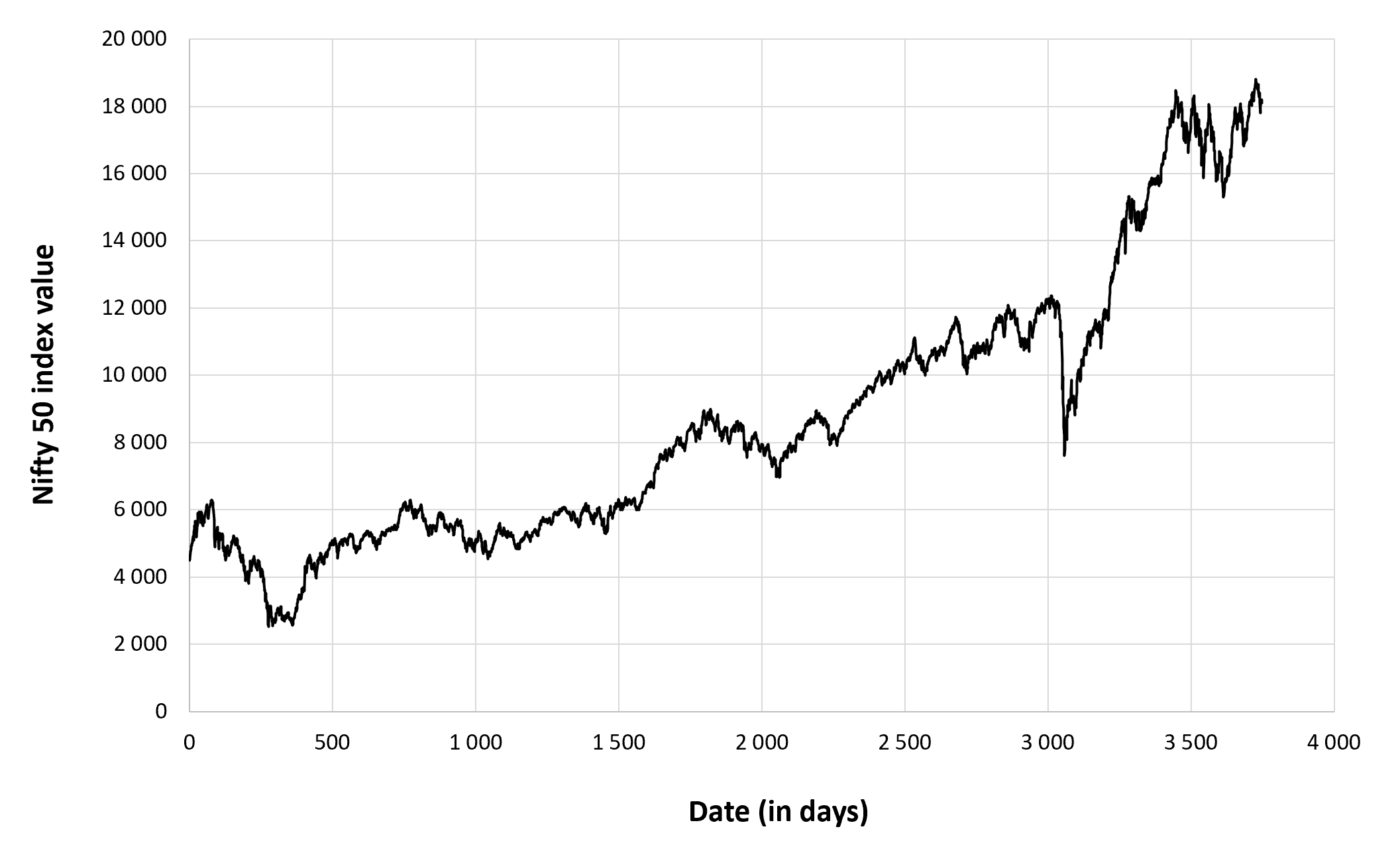

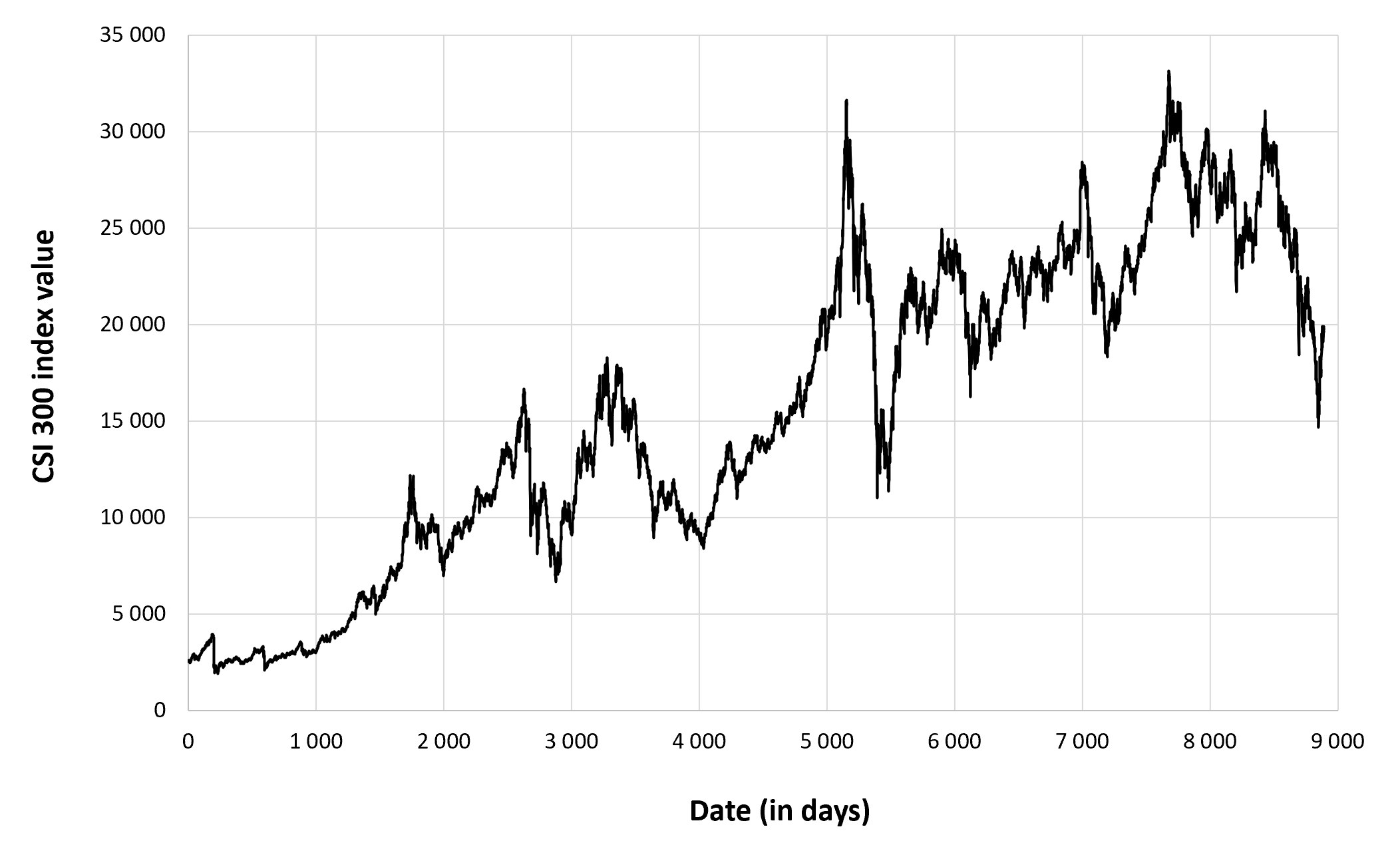

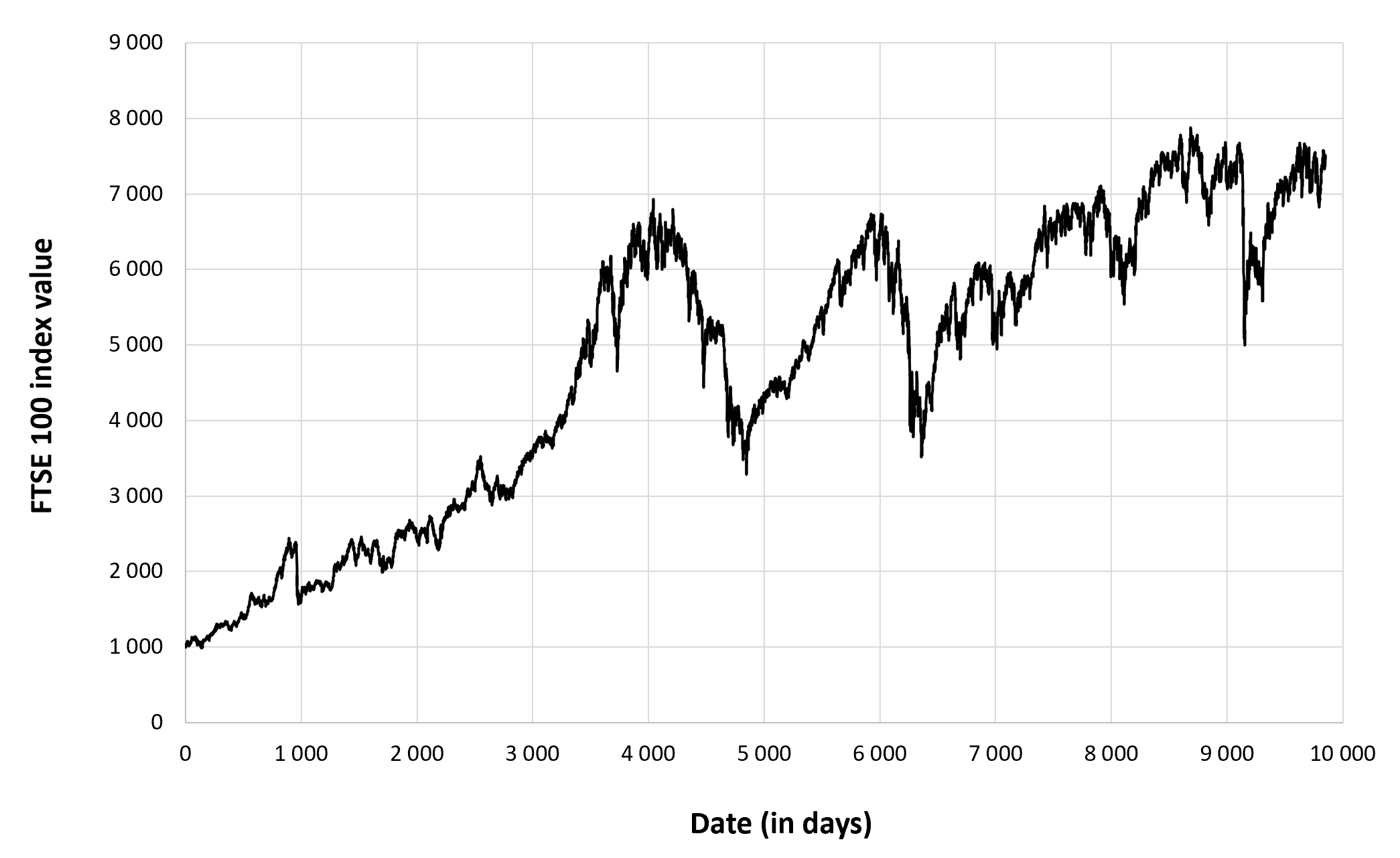

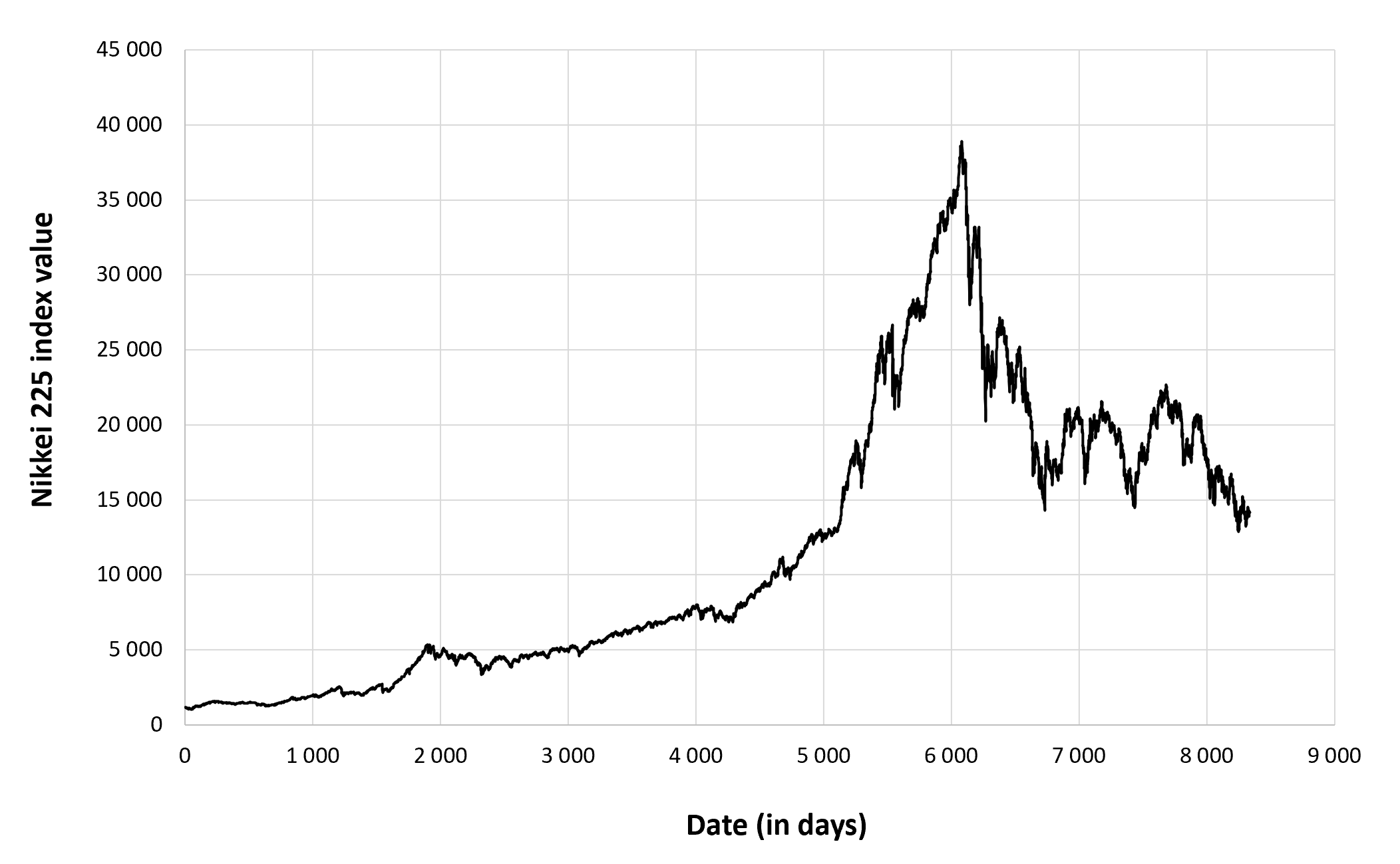

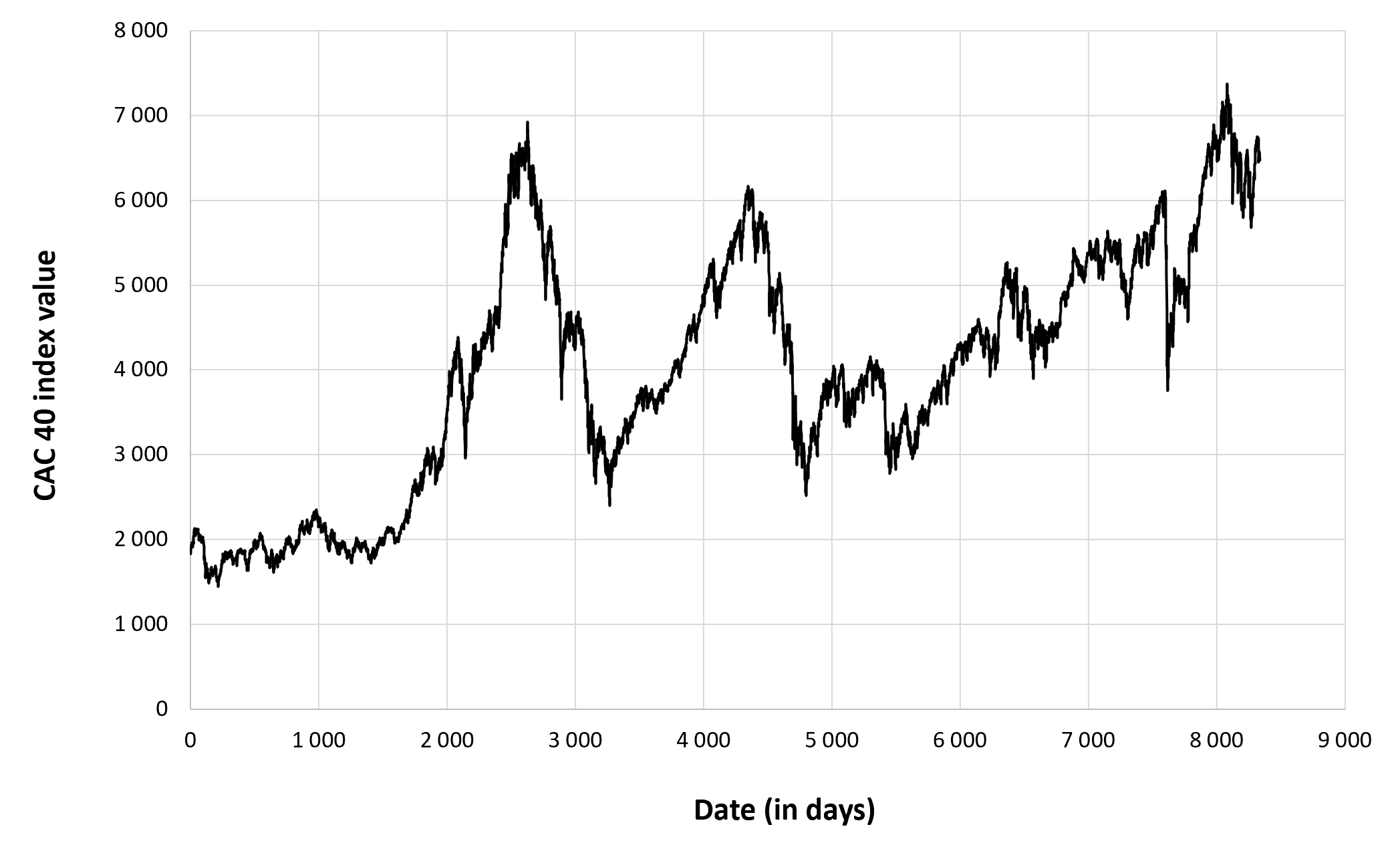

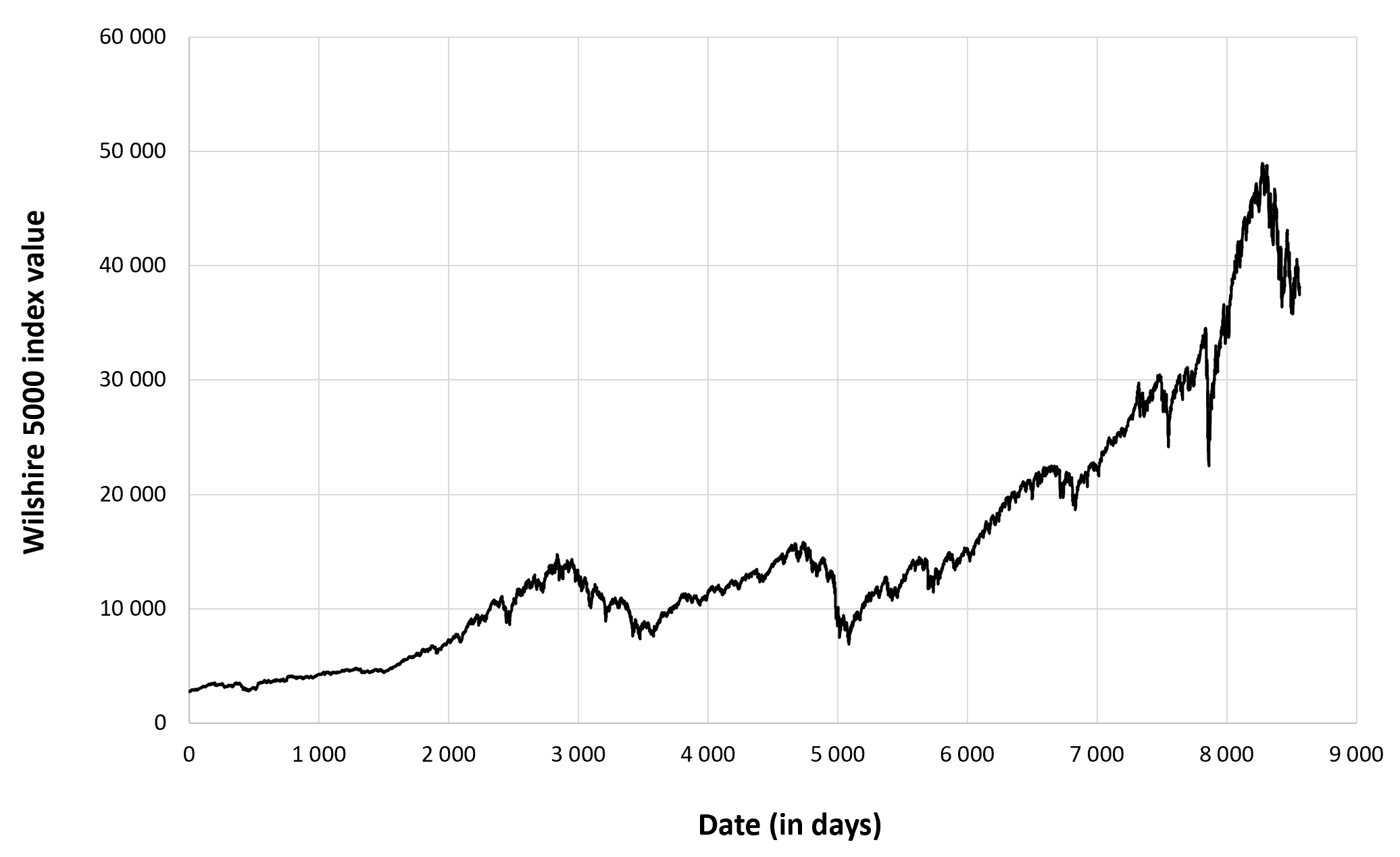

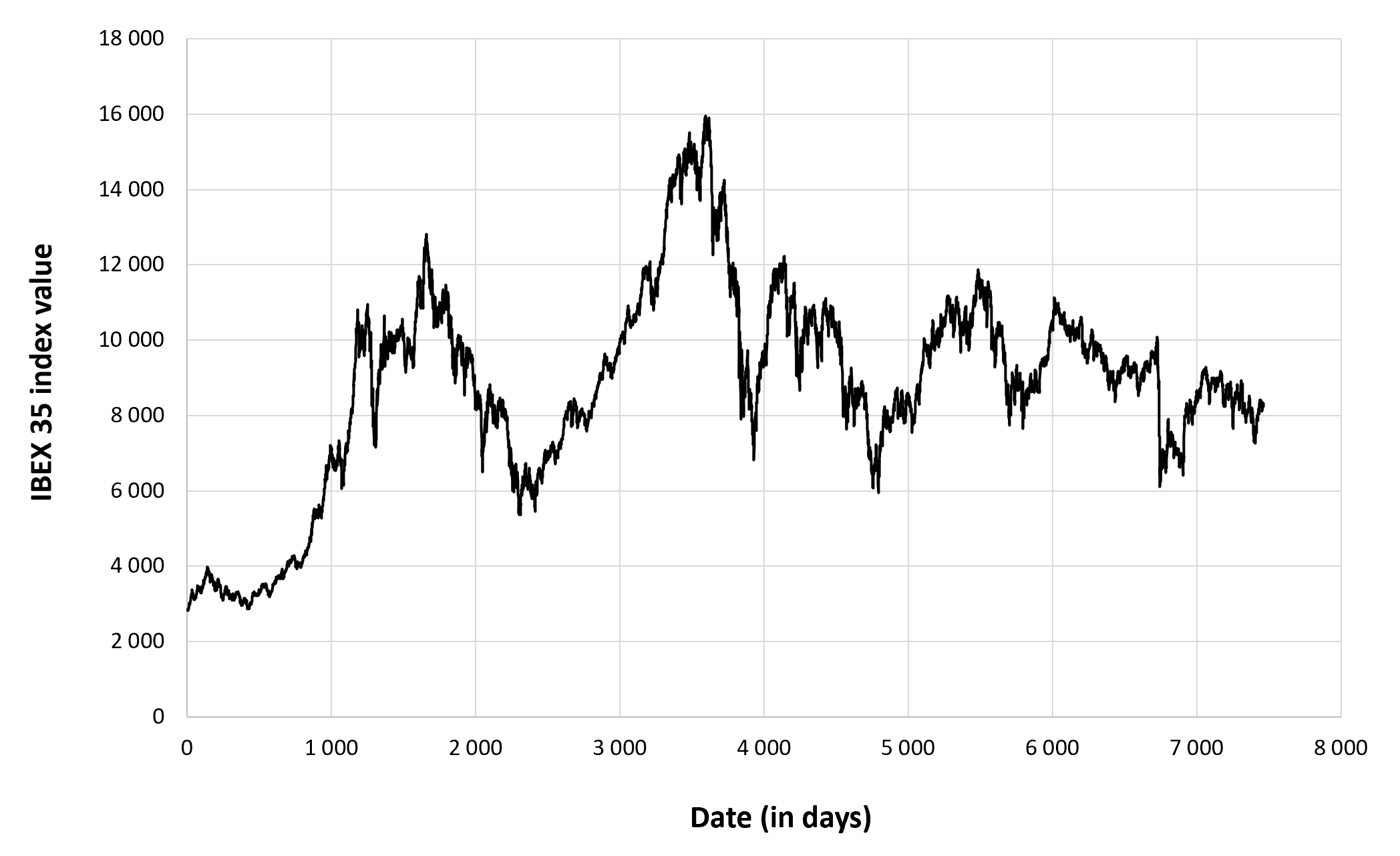

Figure 1 below gives the evolution of the IBEX 35 index from July 12, 1993 to December 30, 2022 on a daily basis.

Figure 1. Evolution of the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance website).

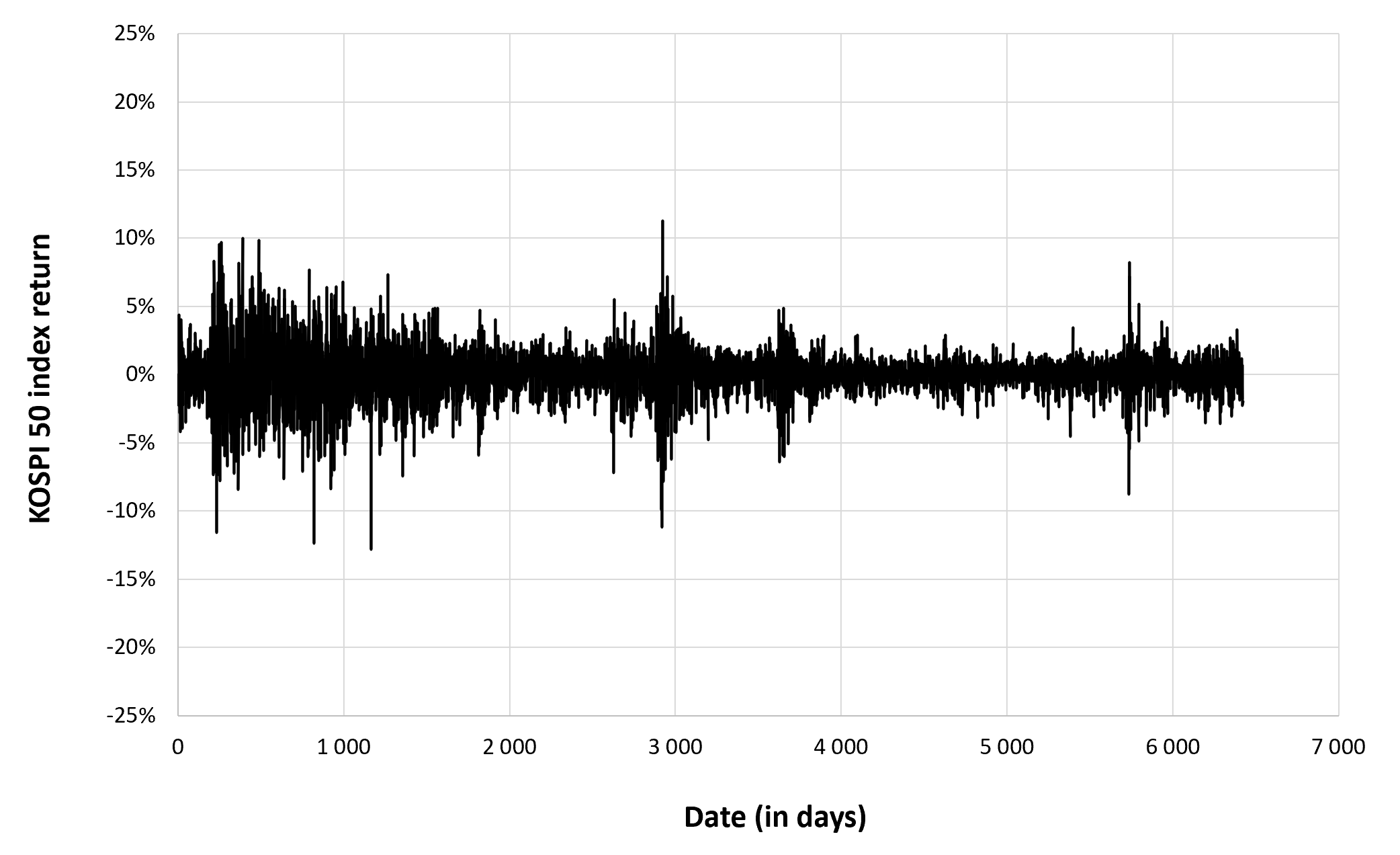

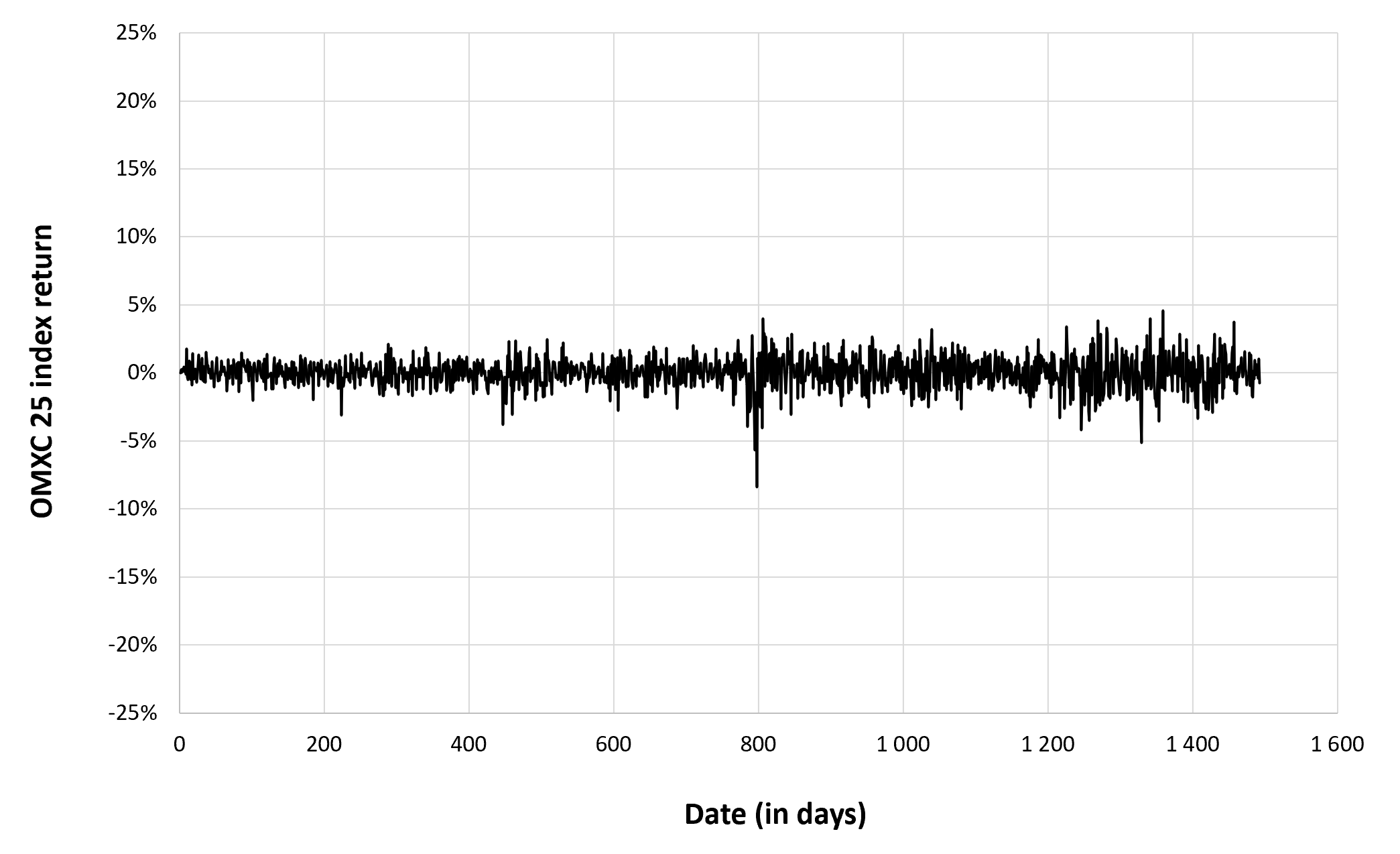

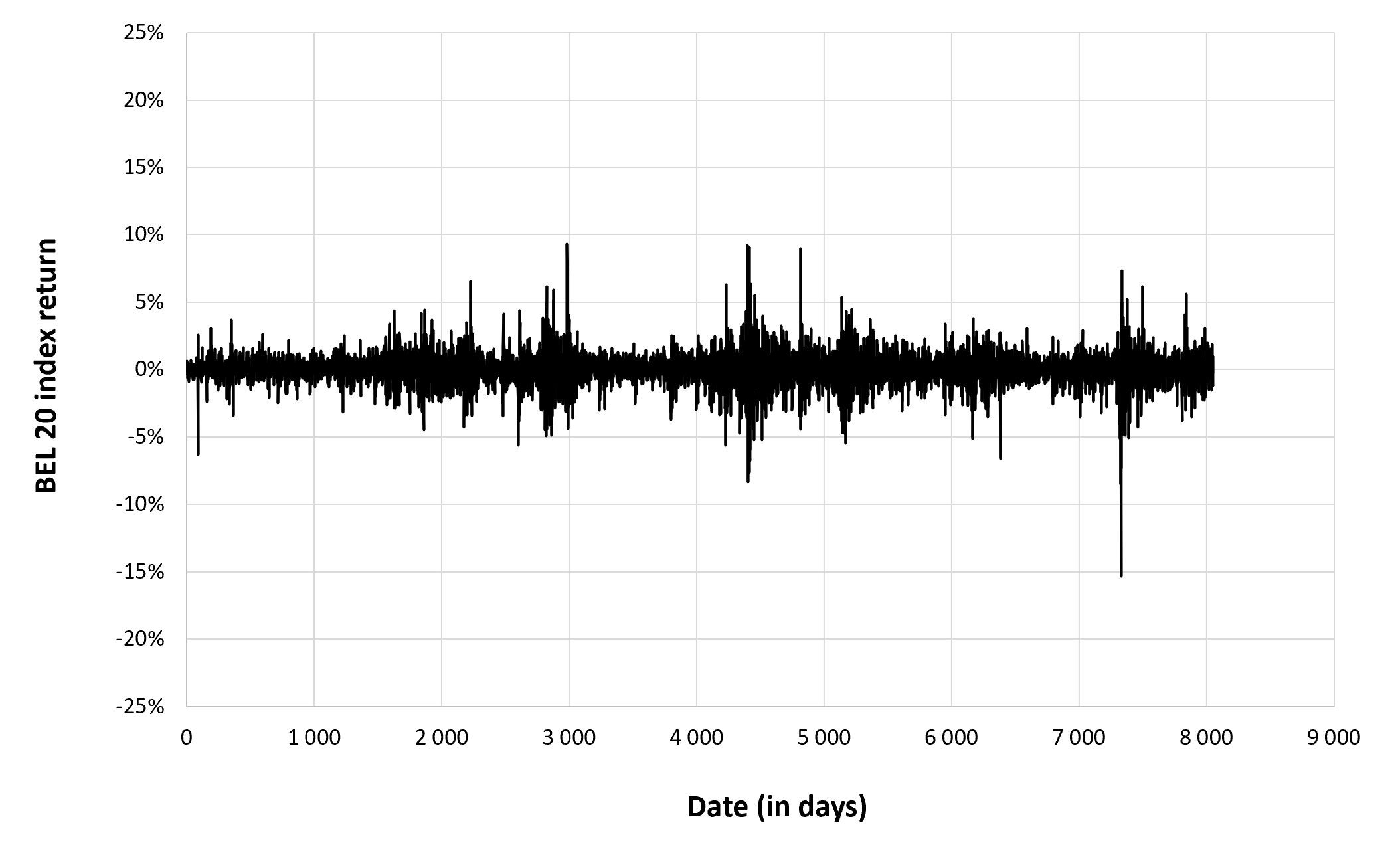

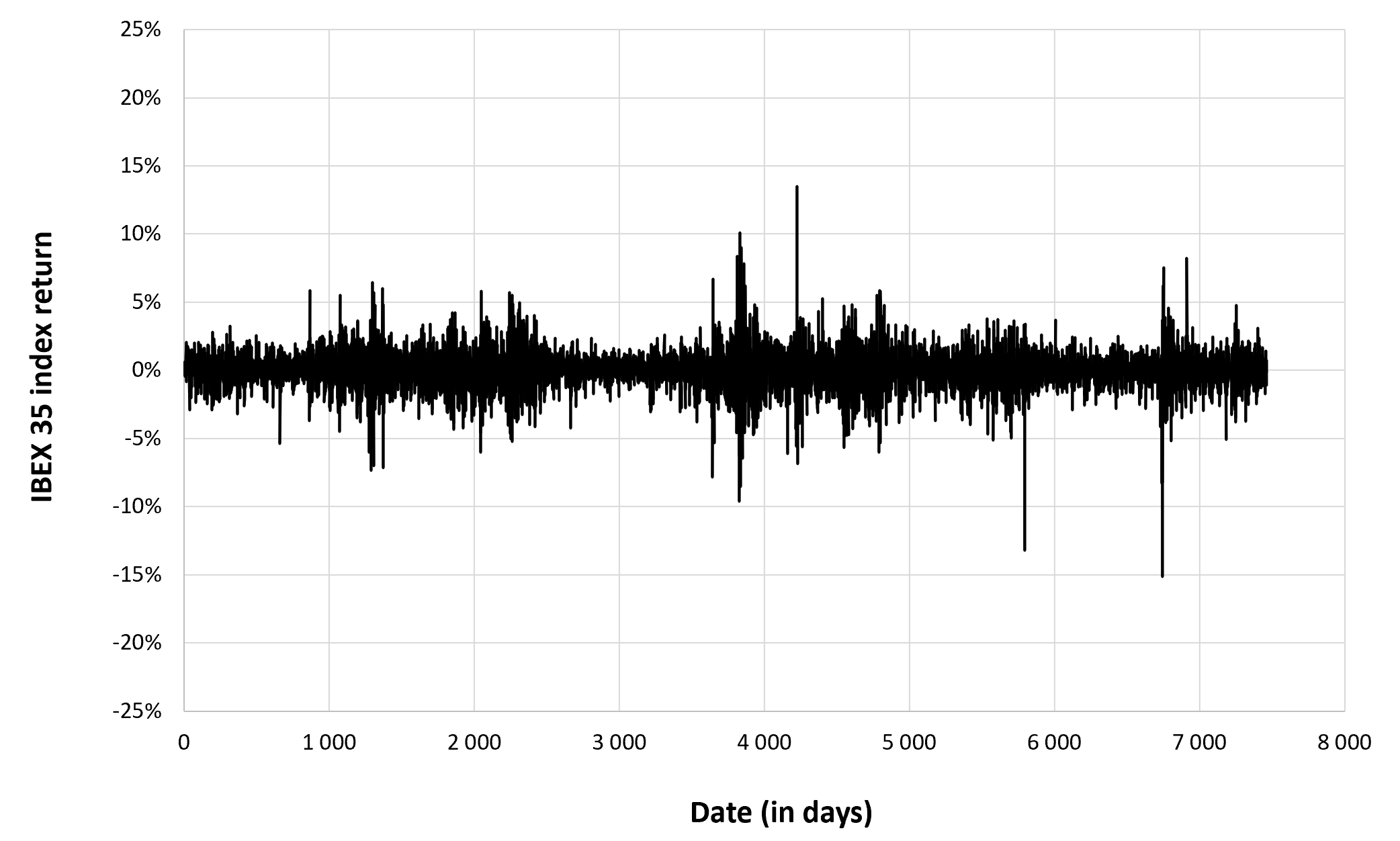

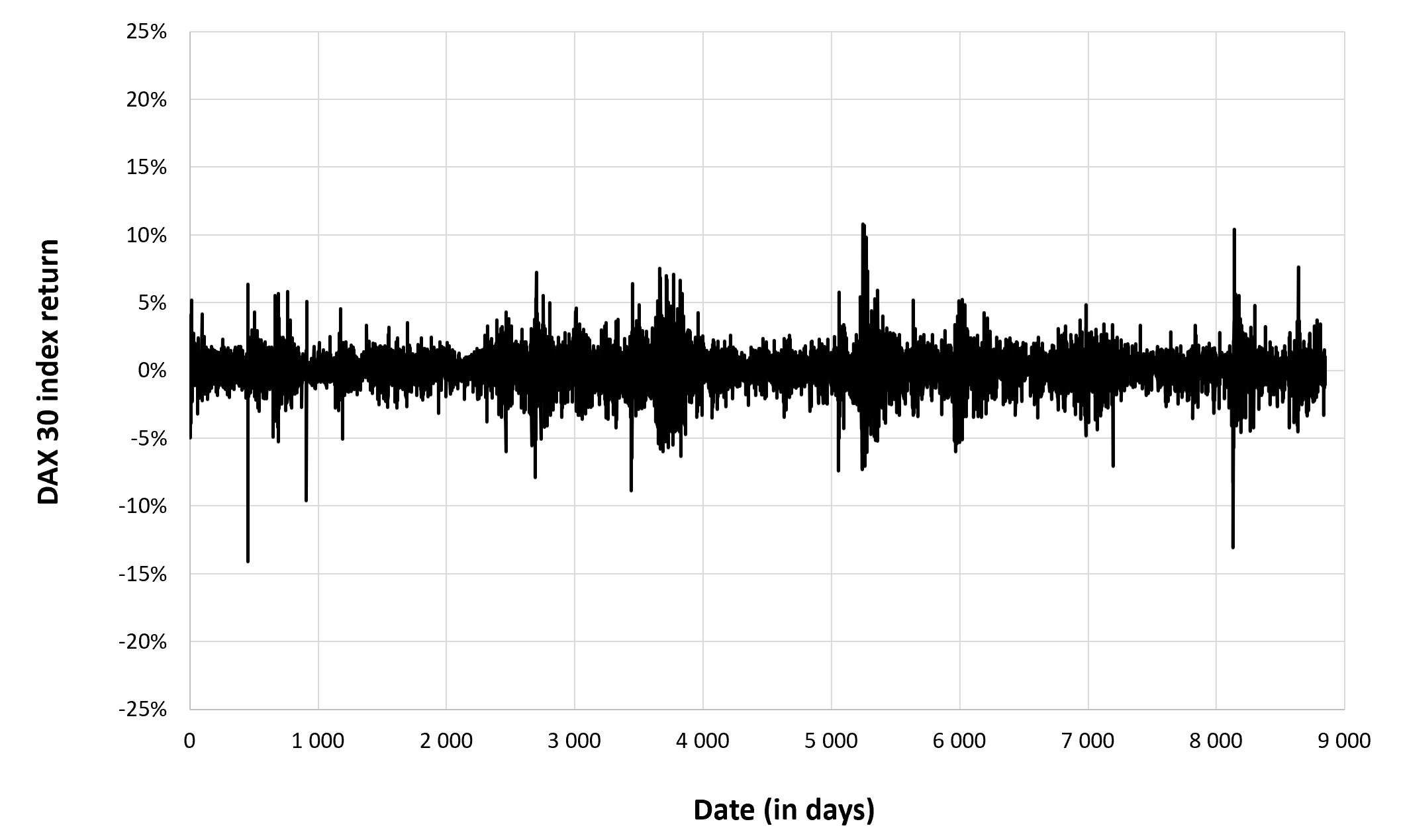

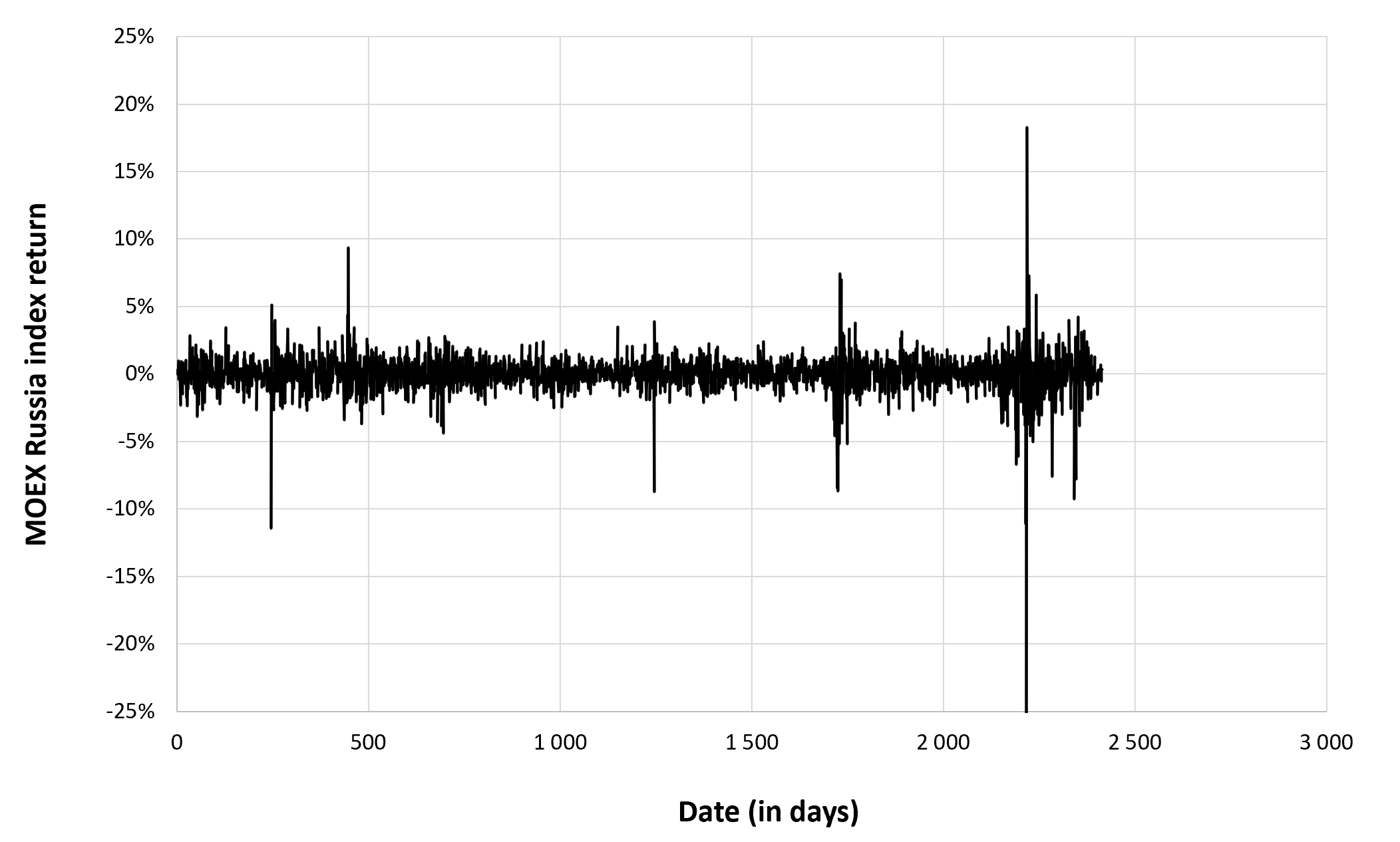

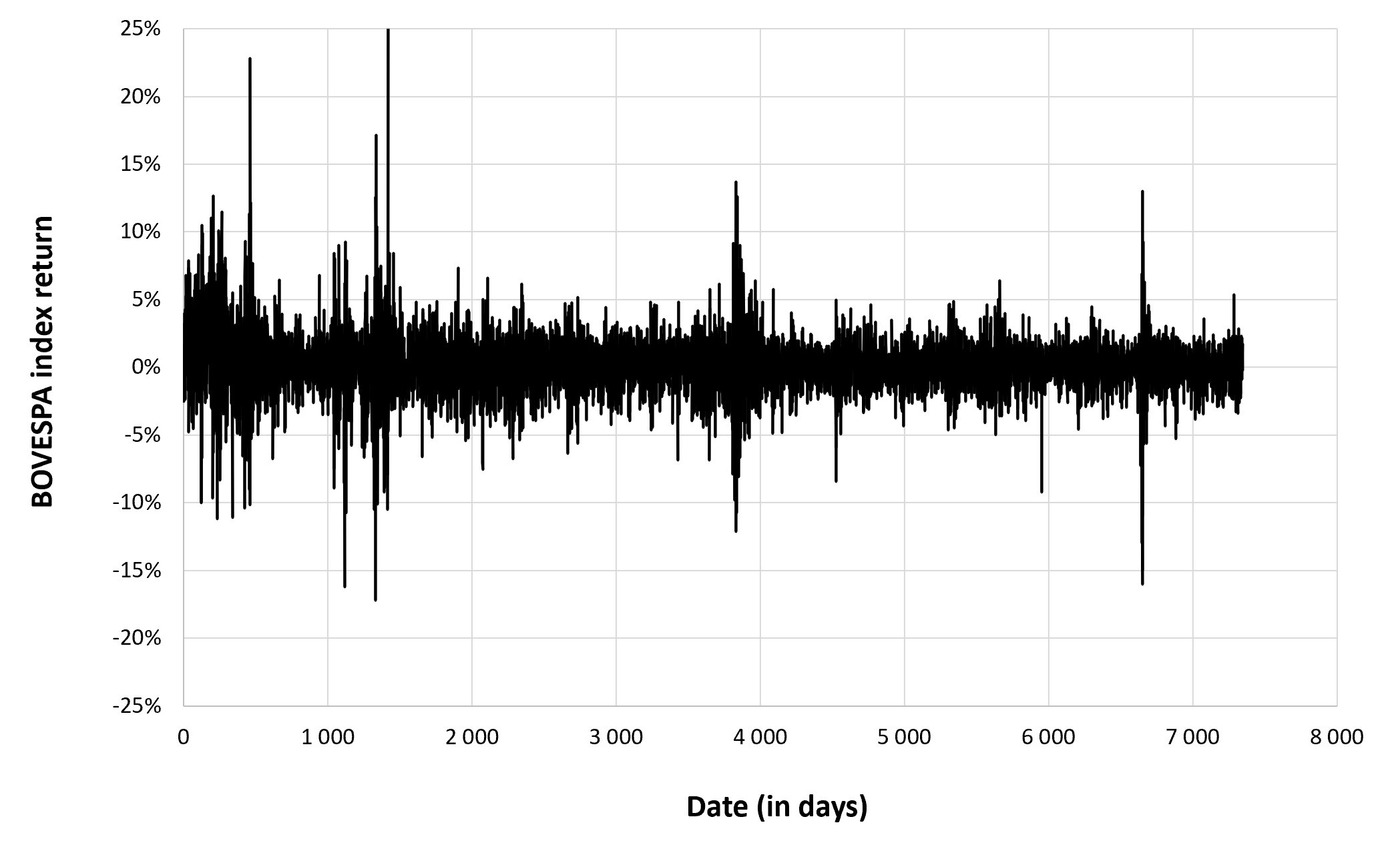

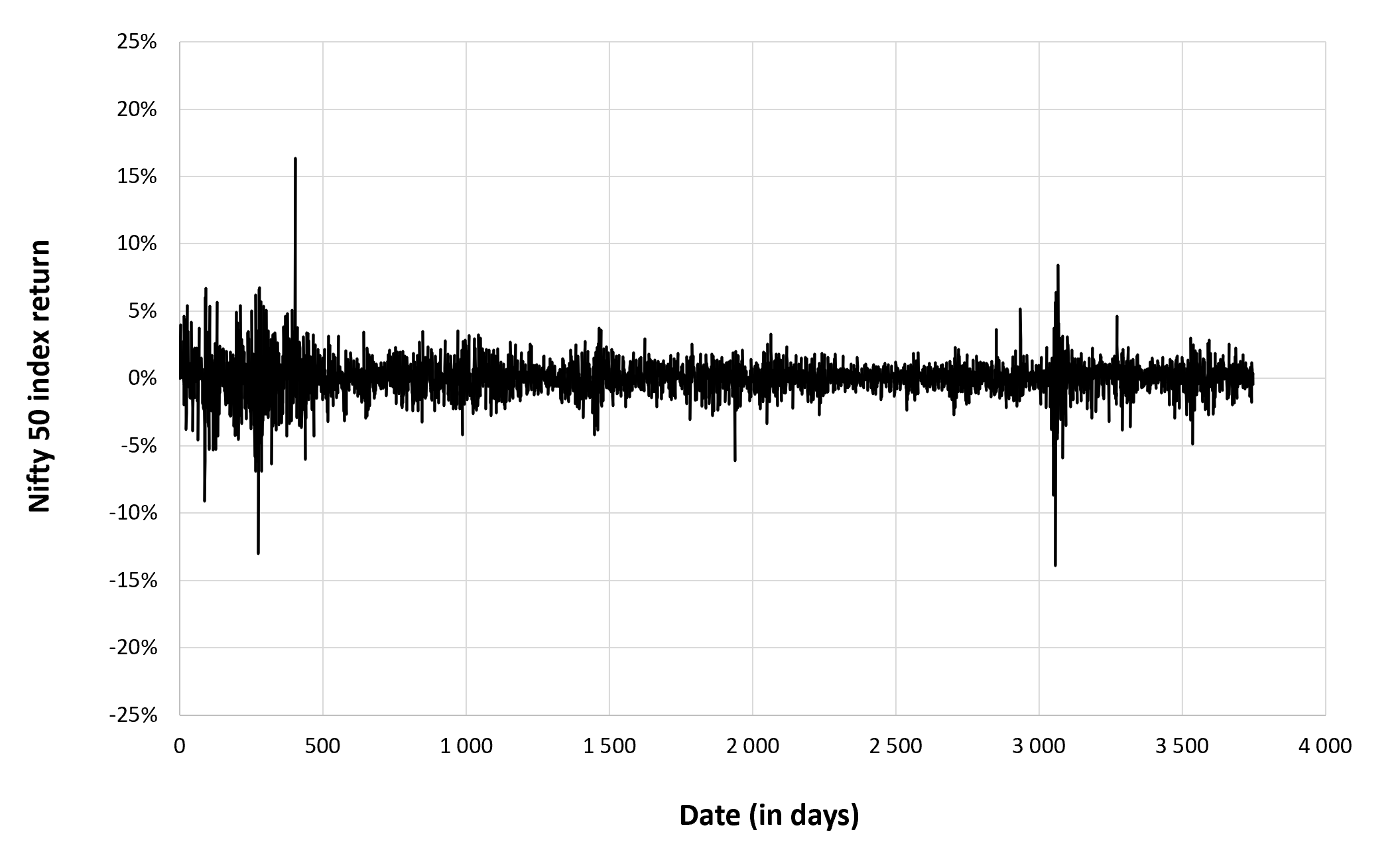

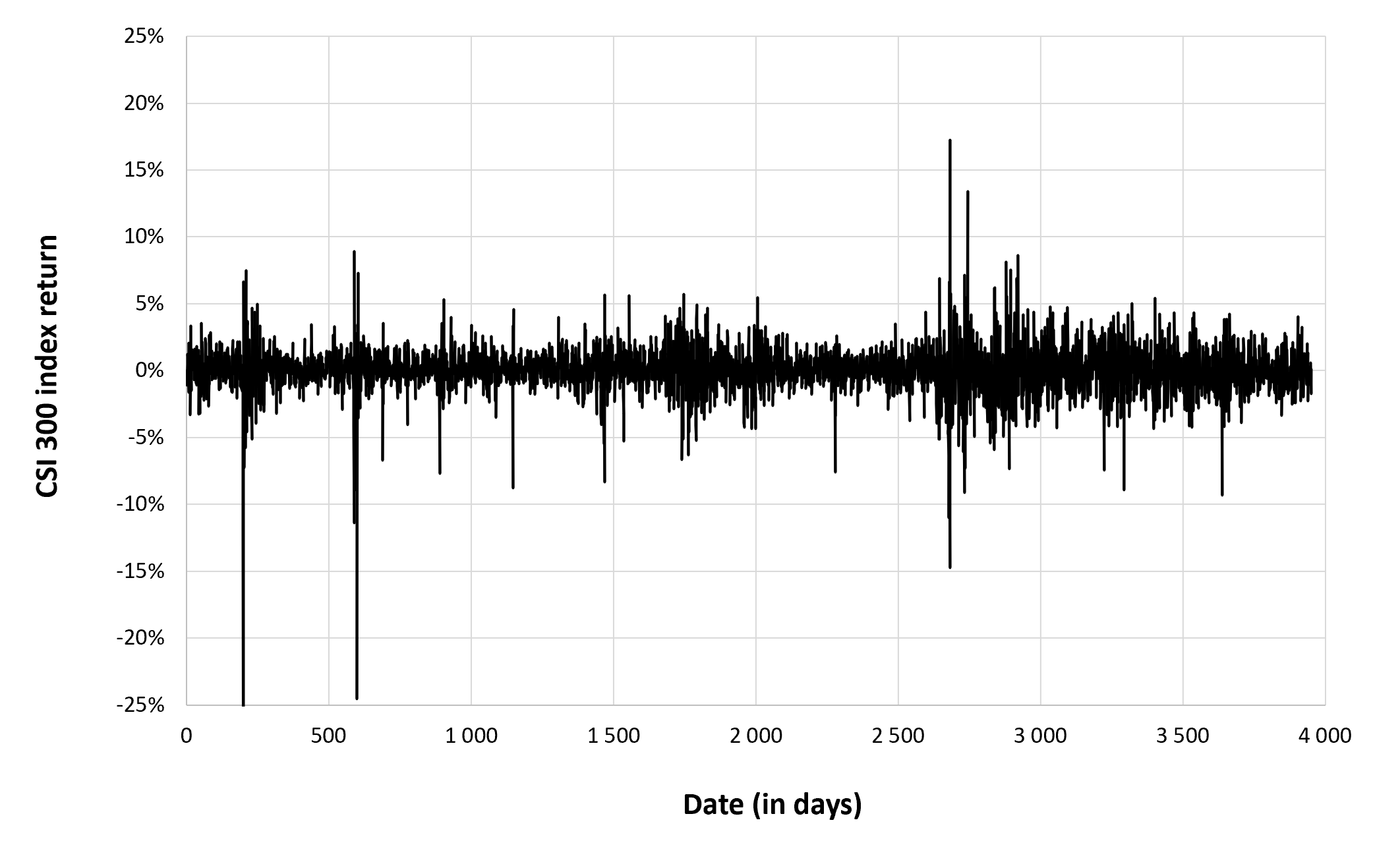

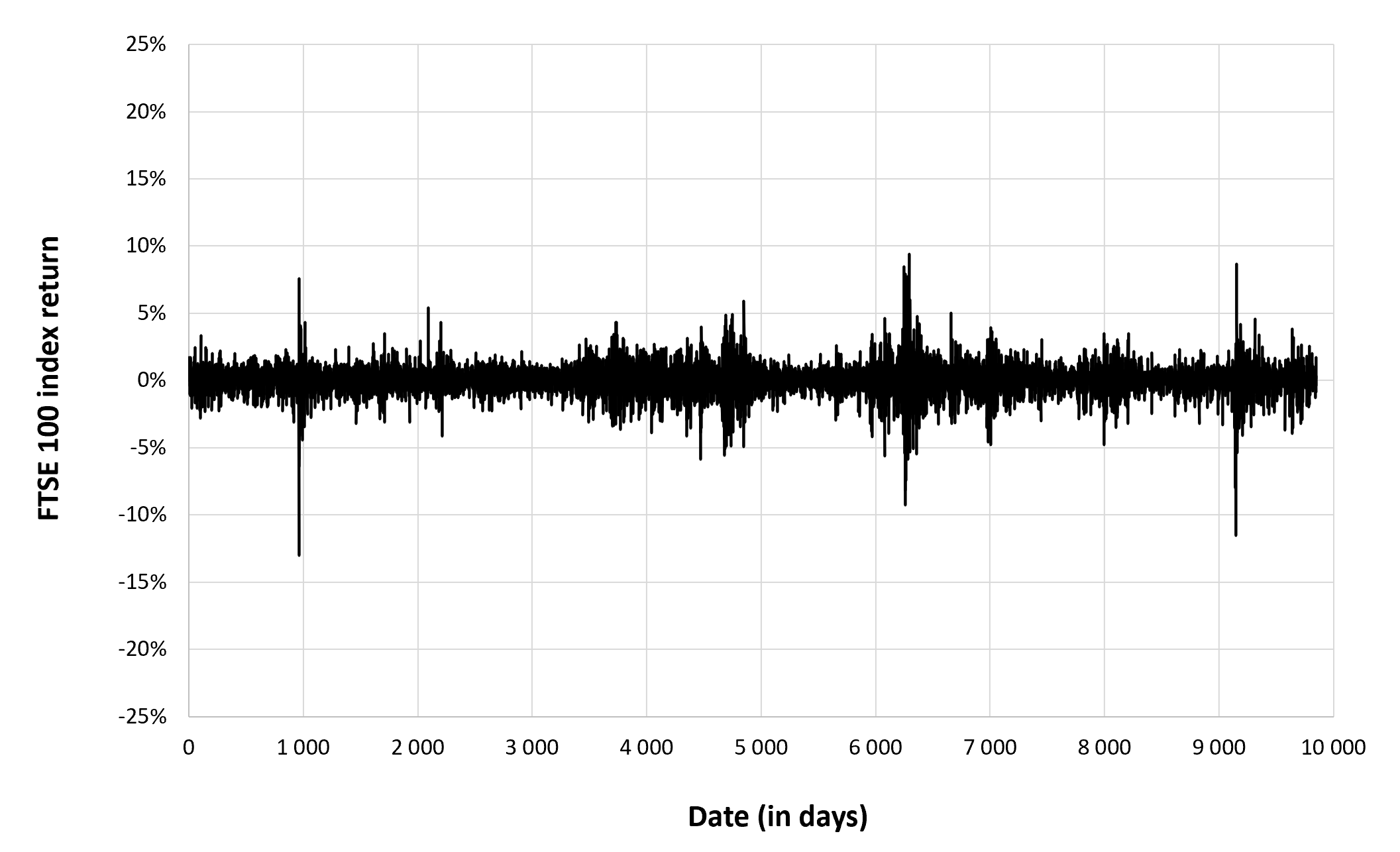

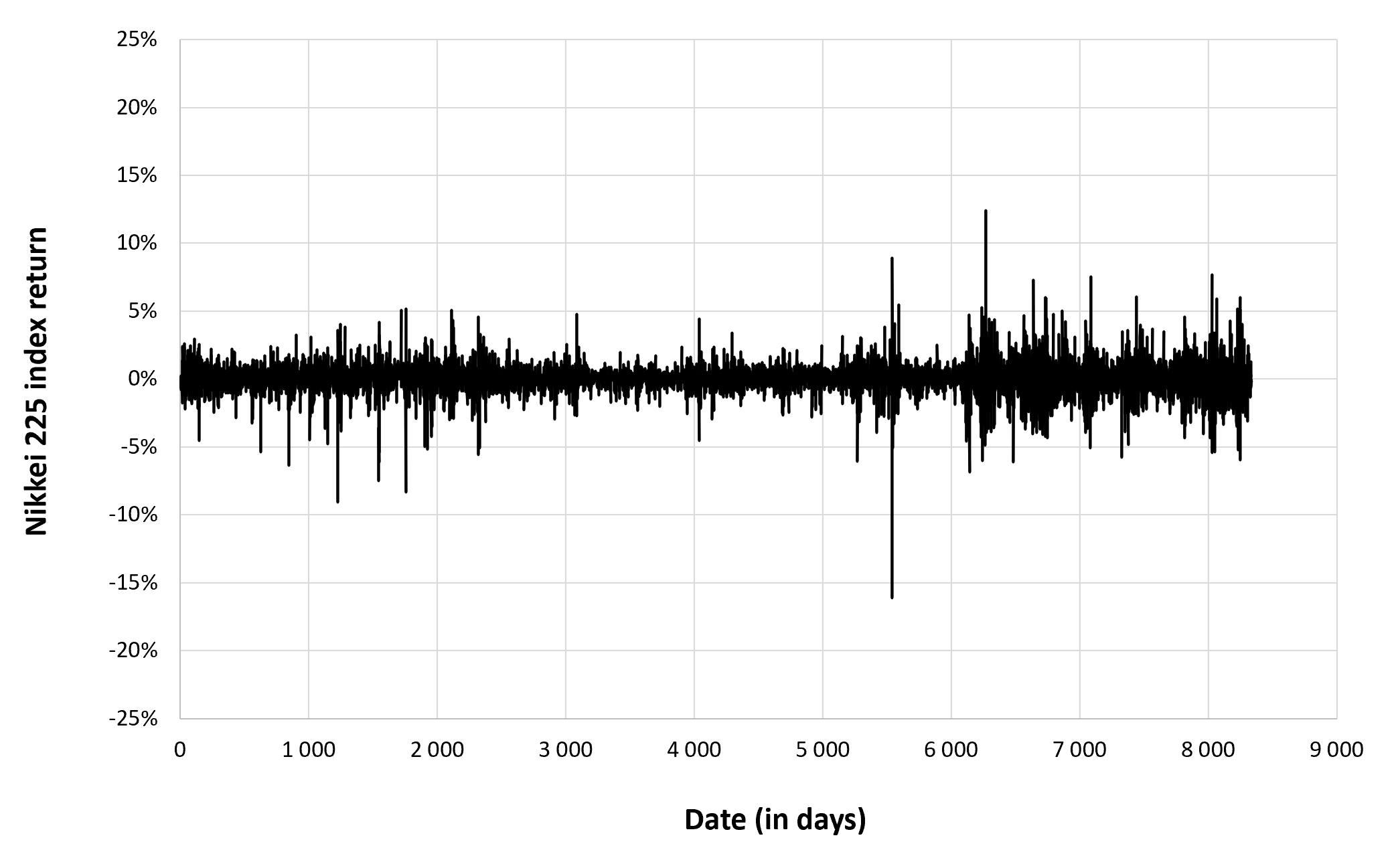

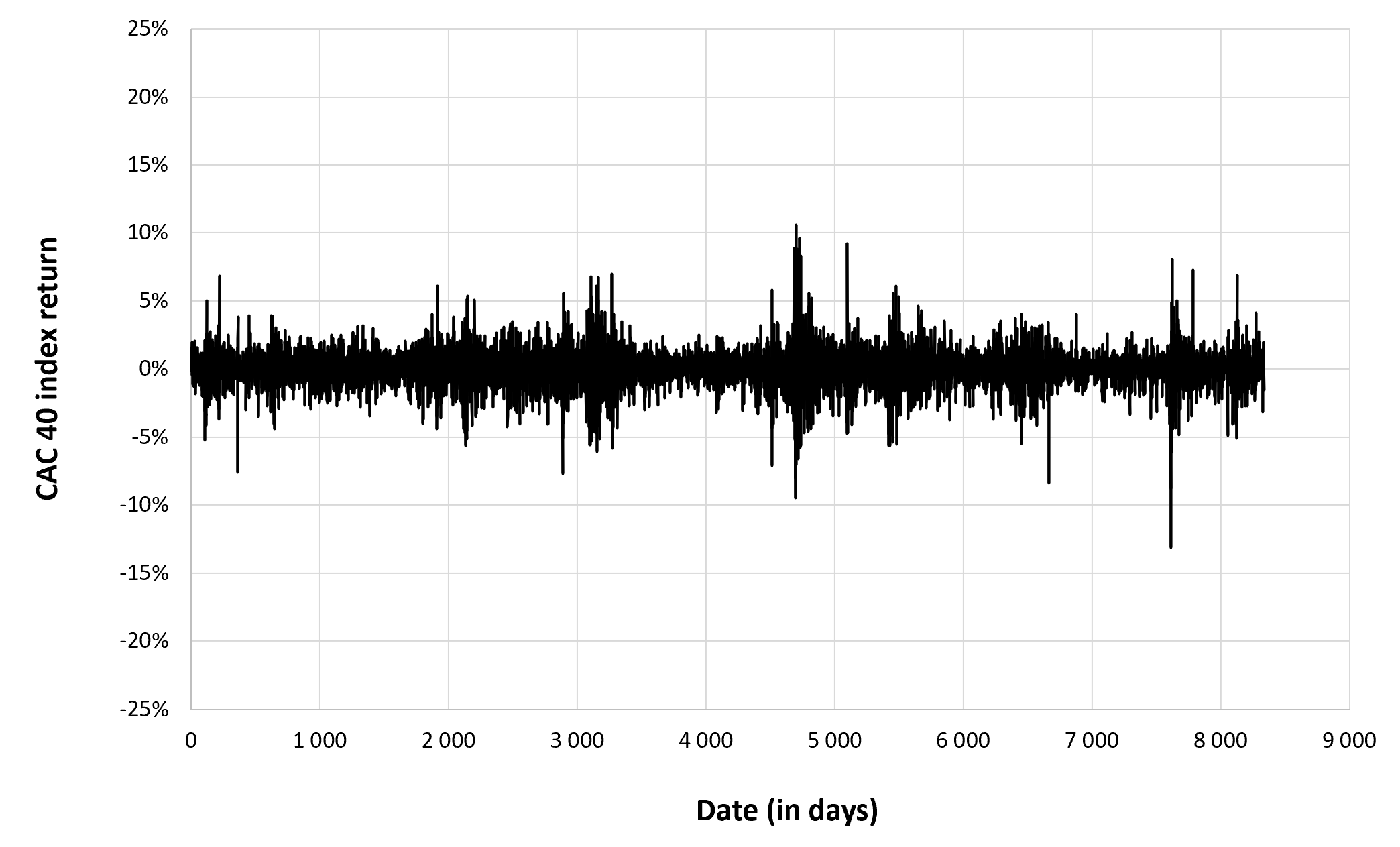

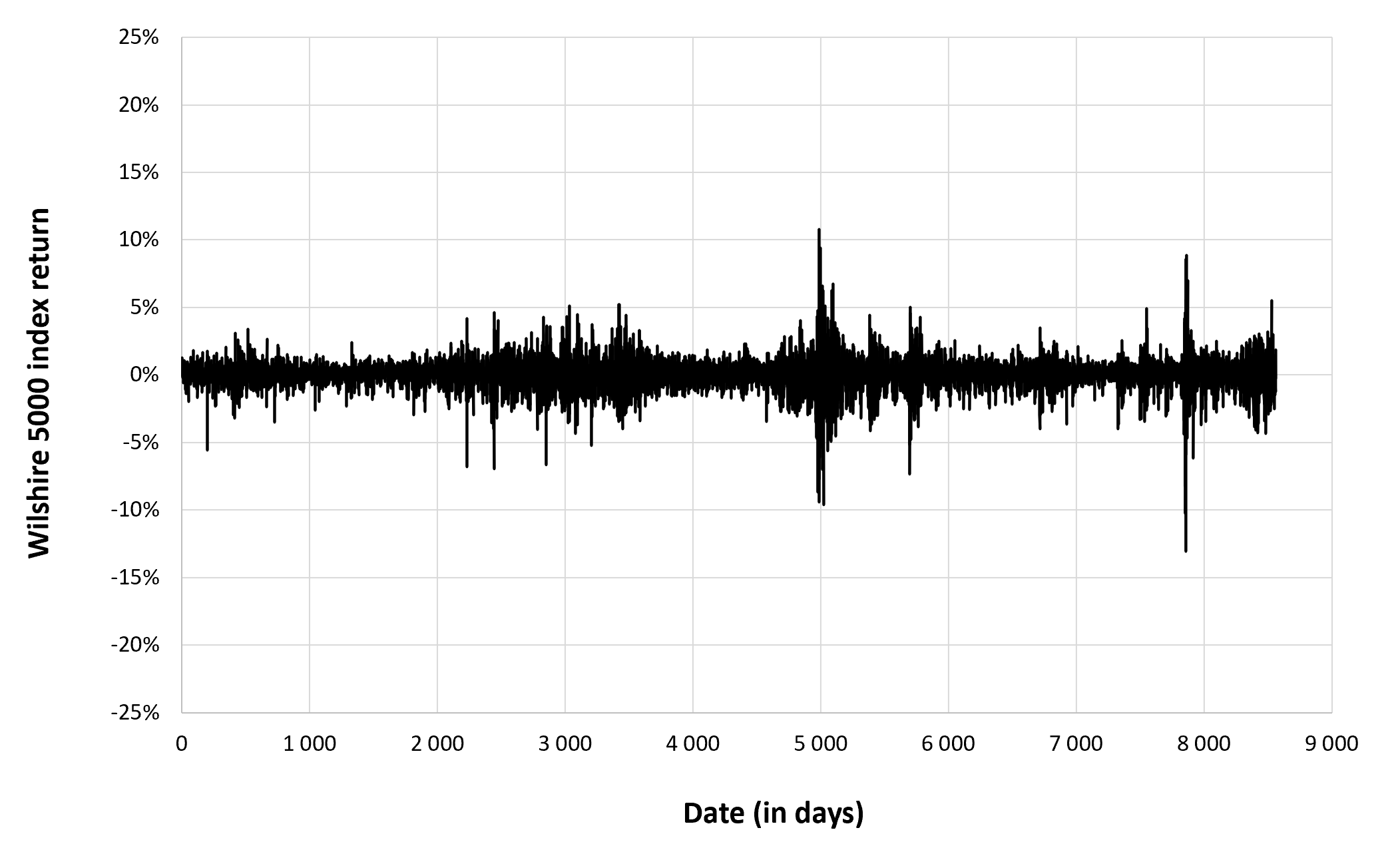

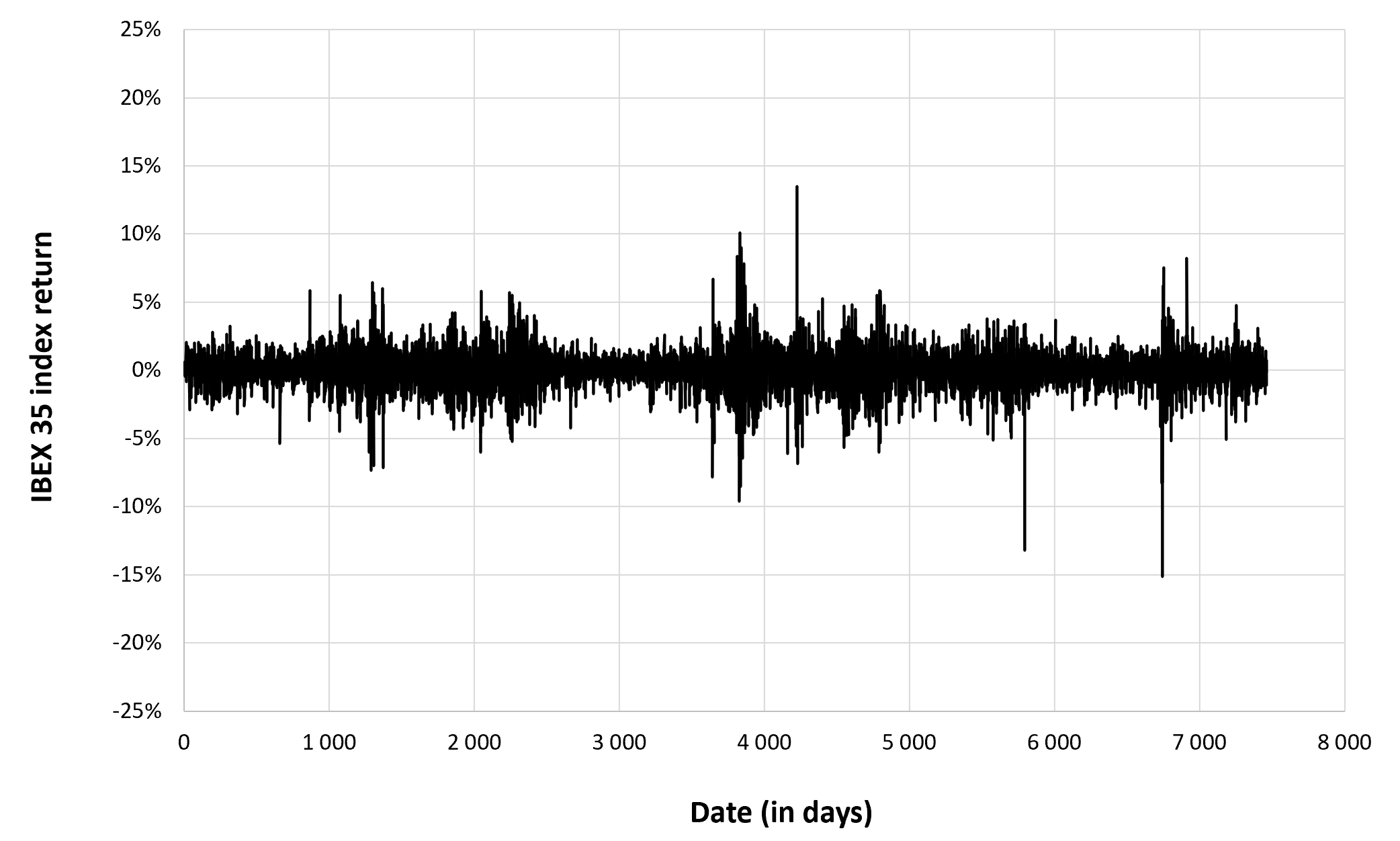

Figure 2 below gives the evolution of the IBEX 35 index returns from July 12, 1993 to December 30, 2022 on a daily basis.

Figure 2. Evolution of the IBEX 35 index returns.

Source: computation by the author (data: Yahoo! Finance website).

Summary statistics for the IBEX 35 index

The R program that you can download above also allows you to compute summary statistics about the returns of the IBEX 35 index.

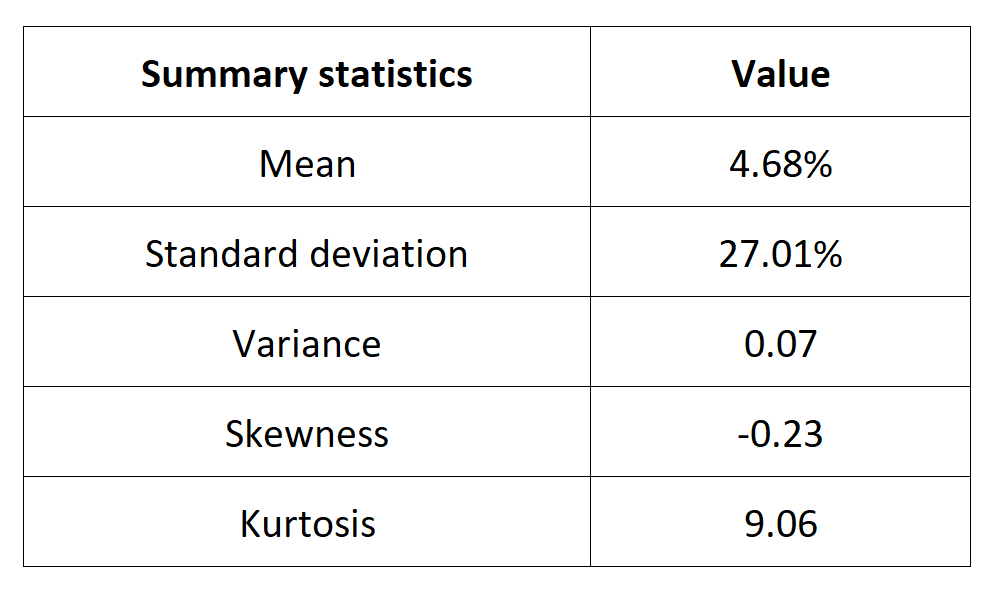

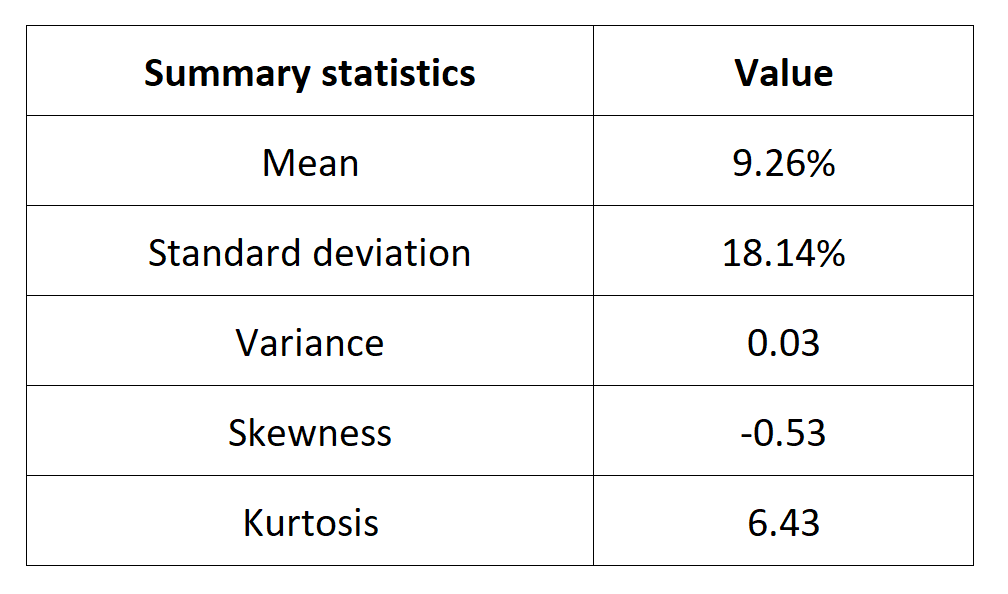

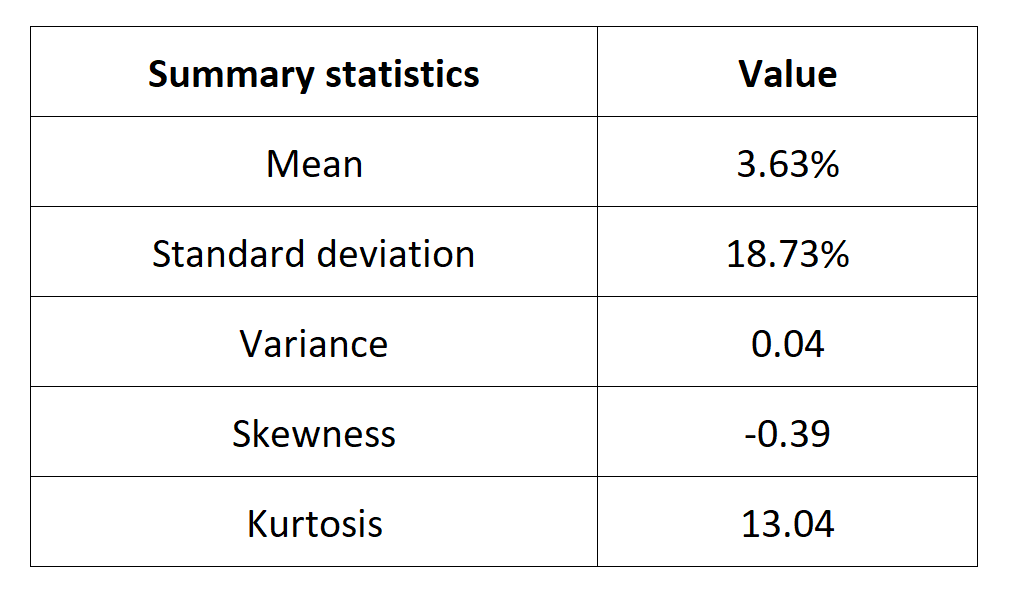

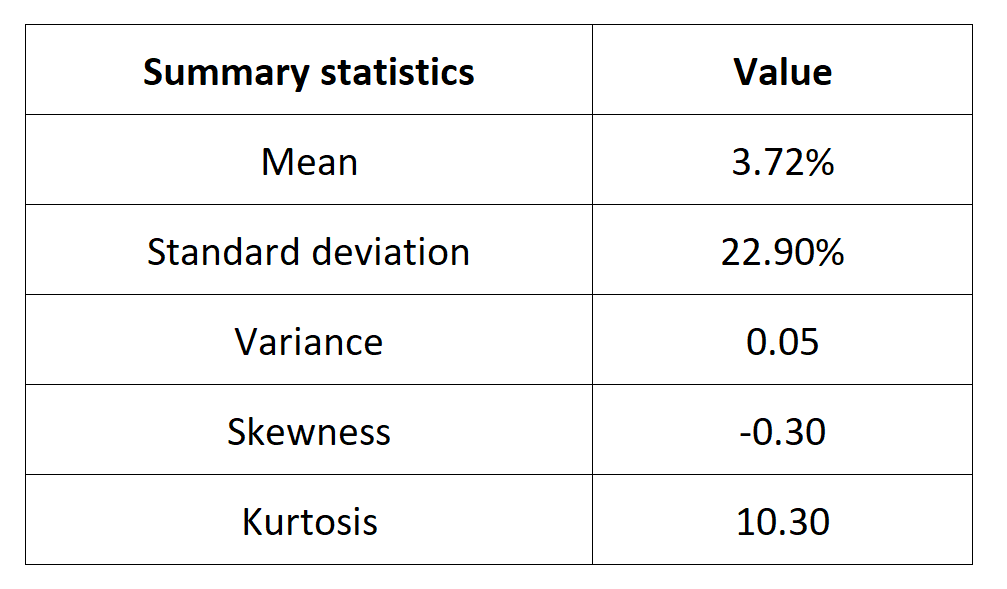

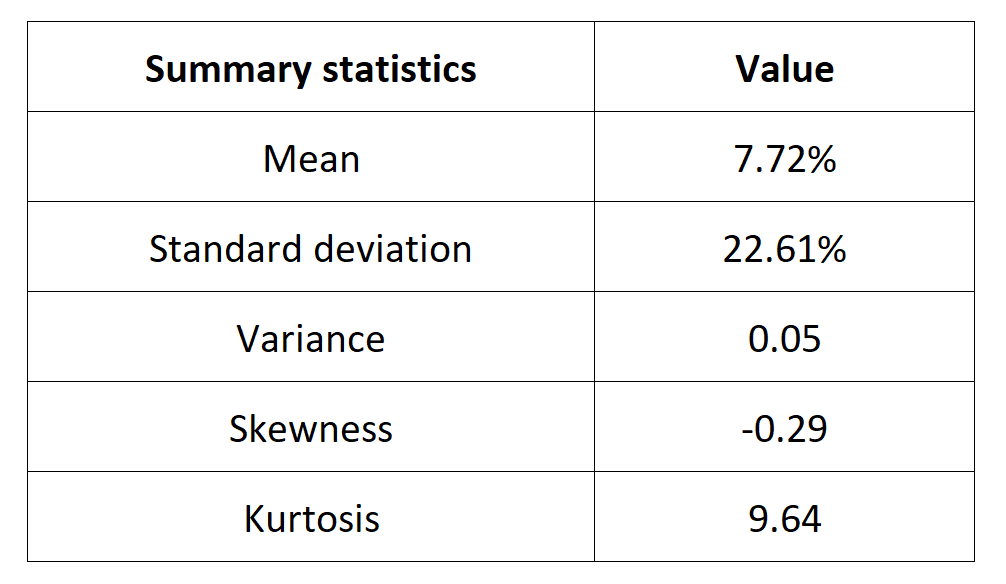

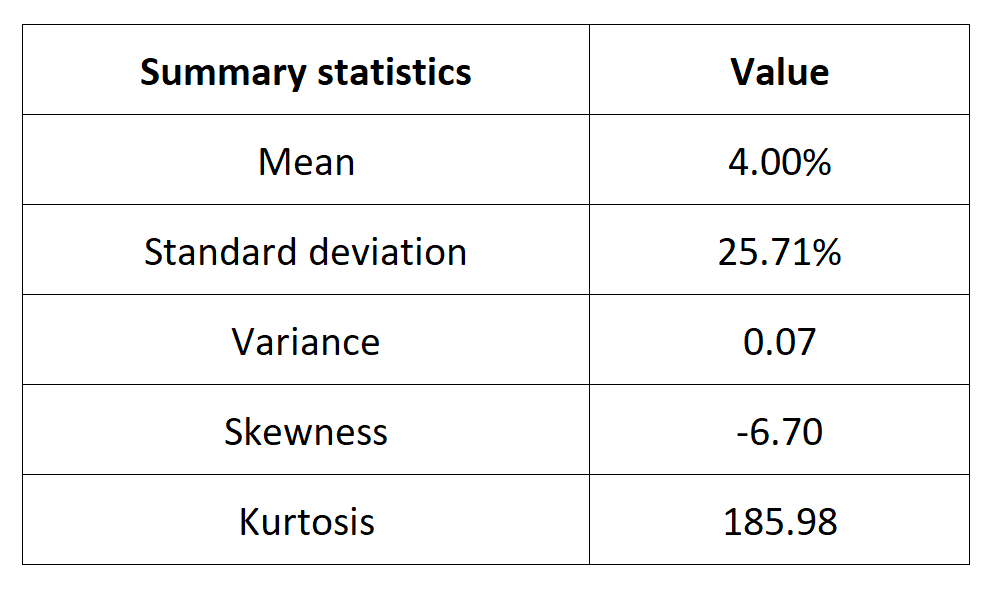

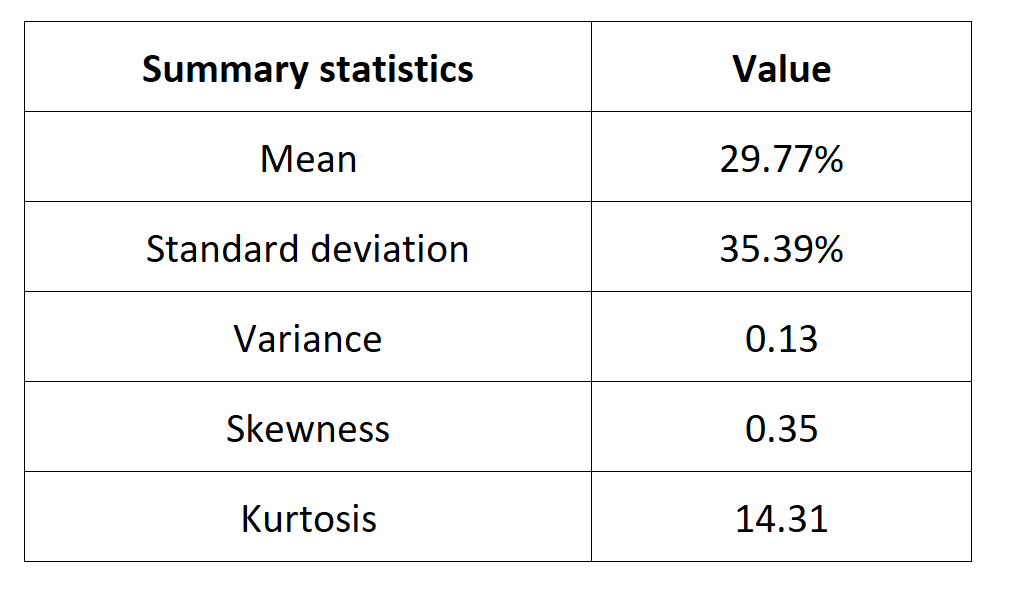

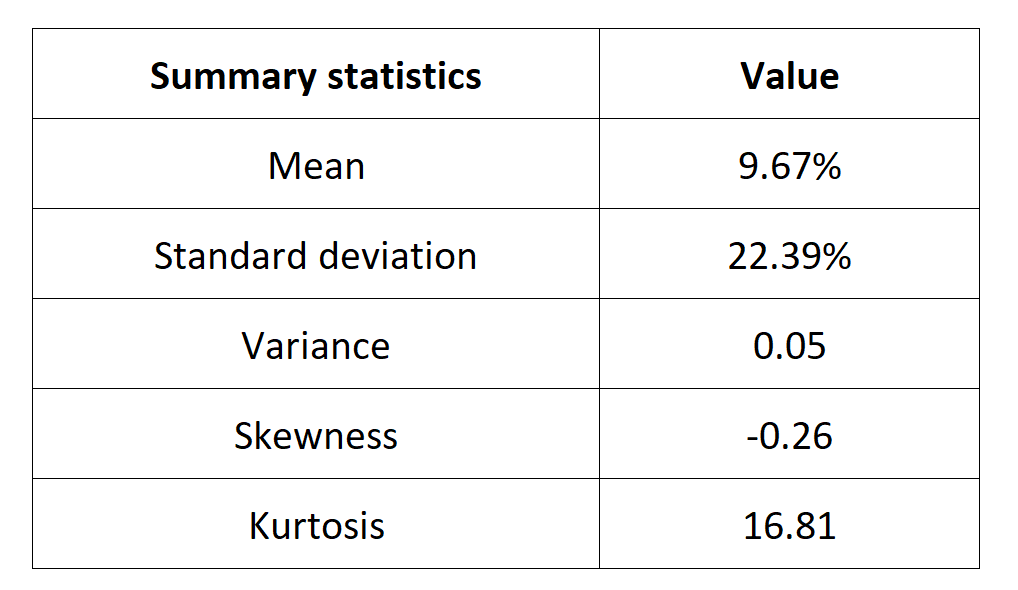

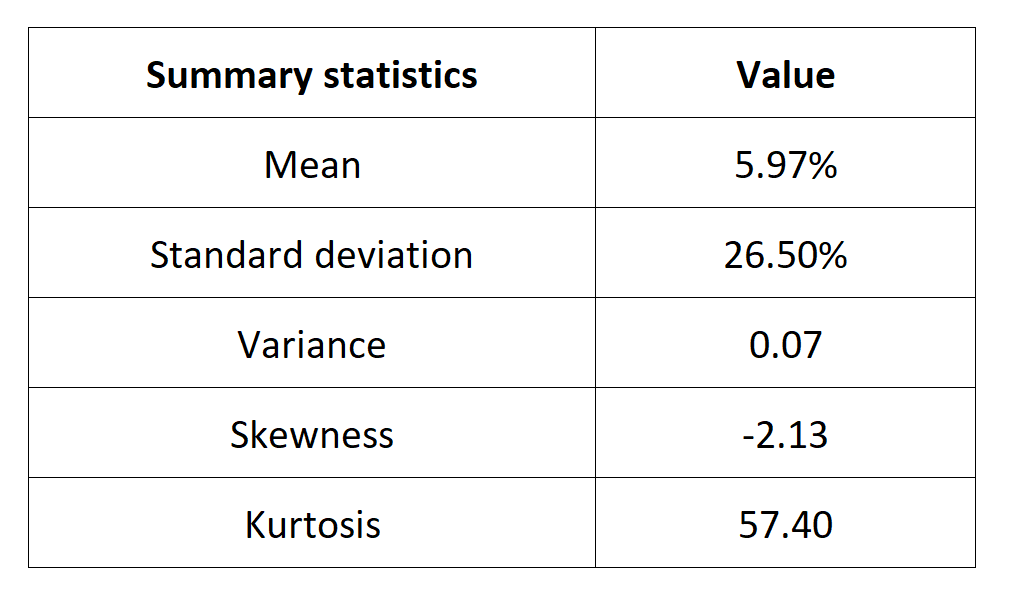

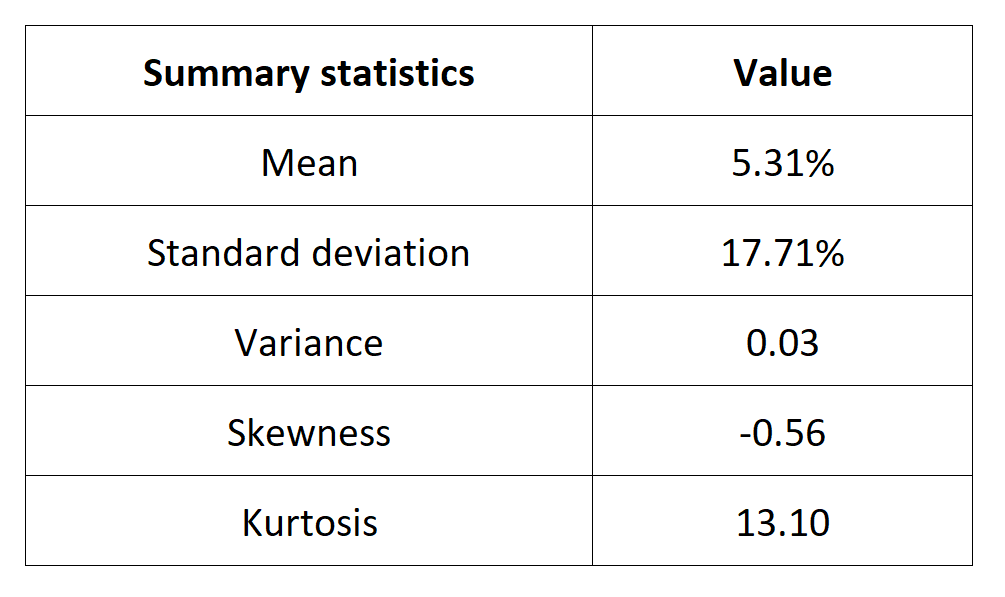

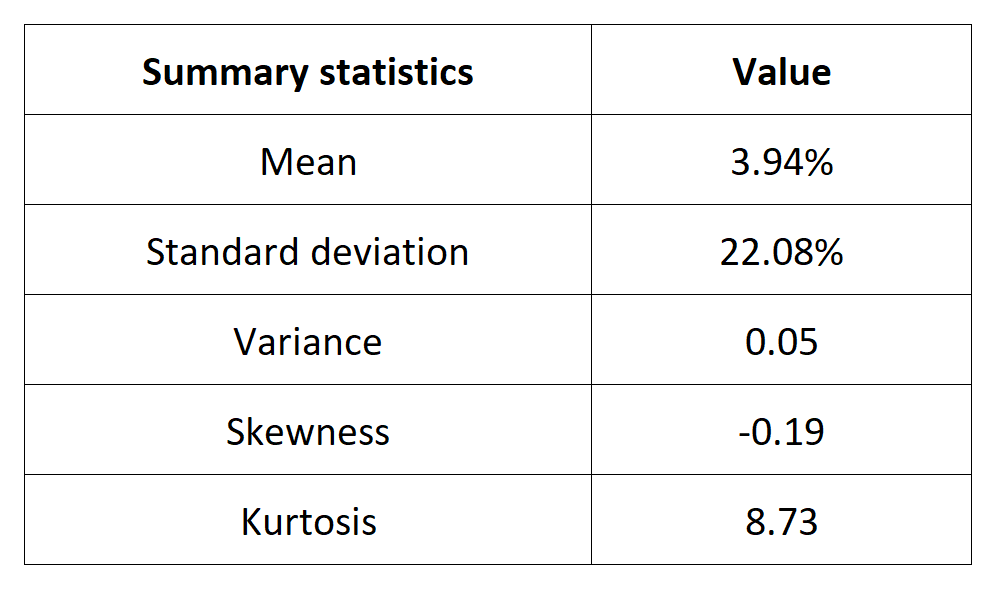

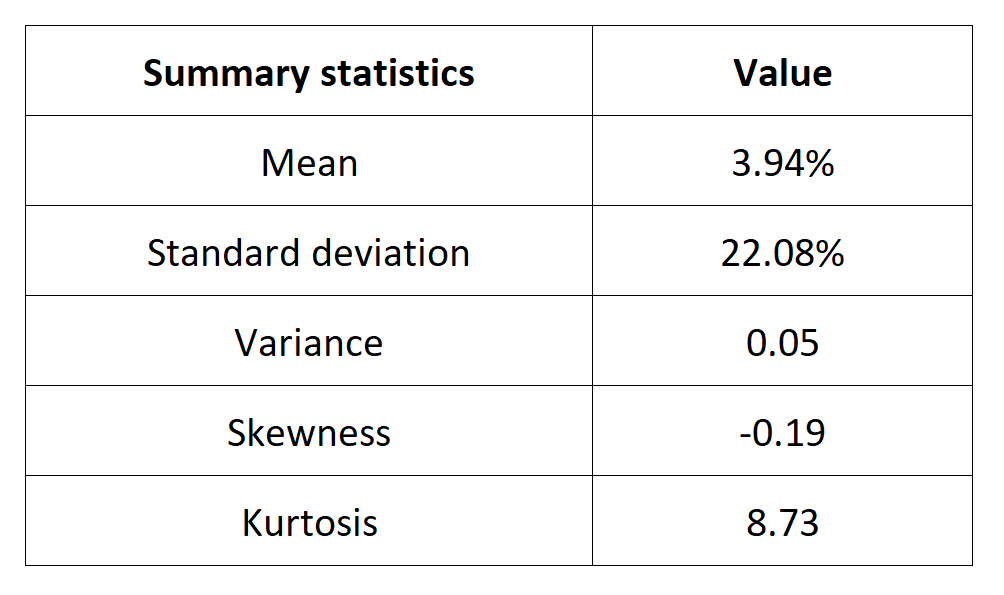

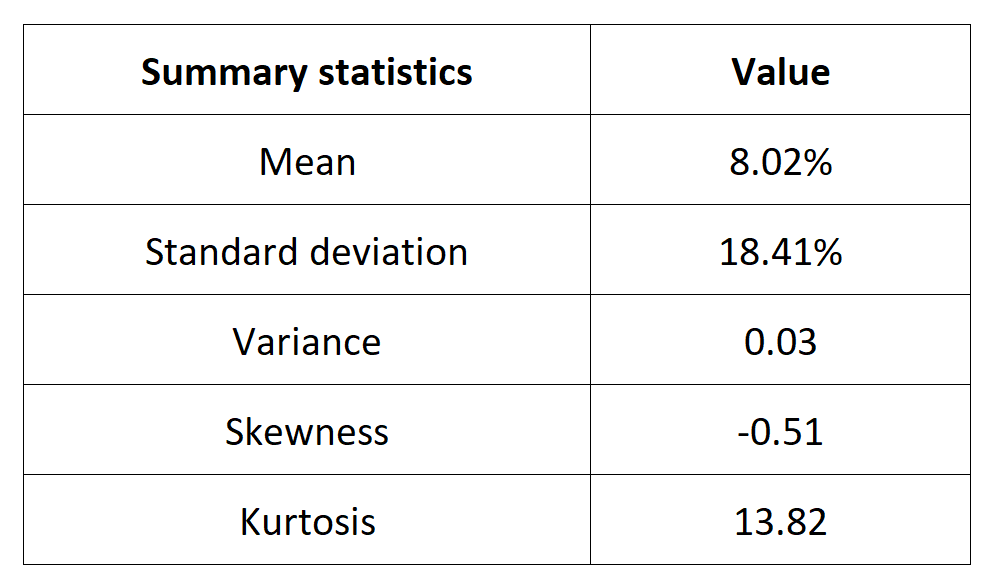

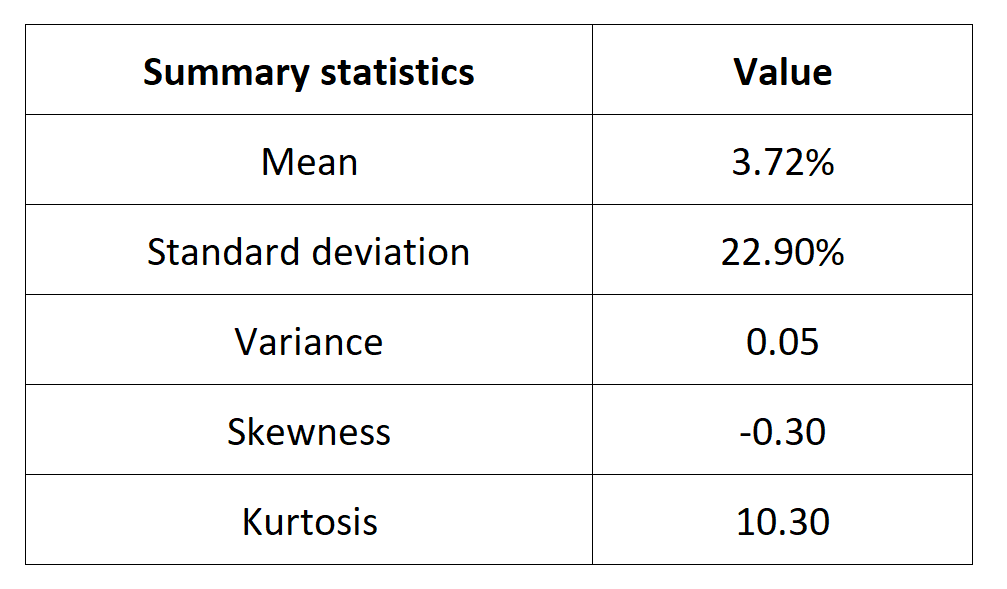

Table 4 below presents the following summary statistics estimated for the IBEX 35 index:

- The mean

- The standard deviation (the squared root of the variance)

- The skewness

- The kurtosis.

The mean, the standard deviation / variance, the skewness, and the kurtosis refer to the first, second, third and fourth moments of statistical distribution of returns respectively.

Table 4. Summary statistics for the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance website).

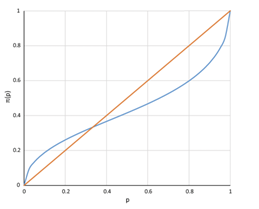

Statistical distribution of the IBEX 35 index returns

Historical distribution

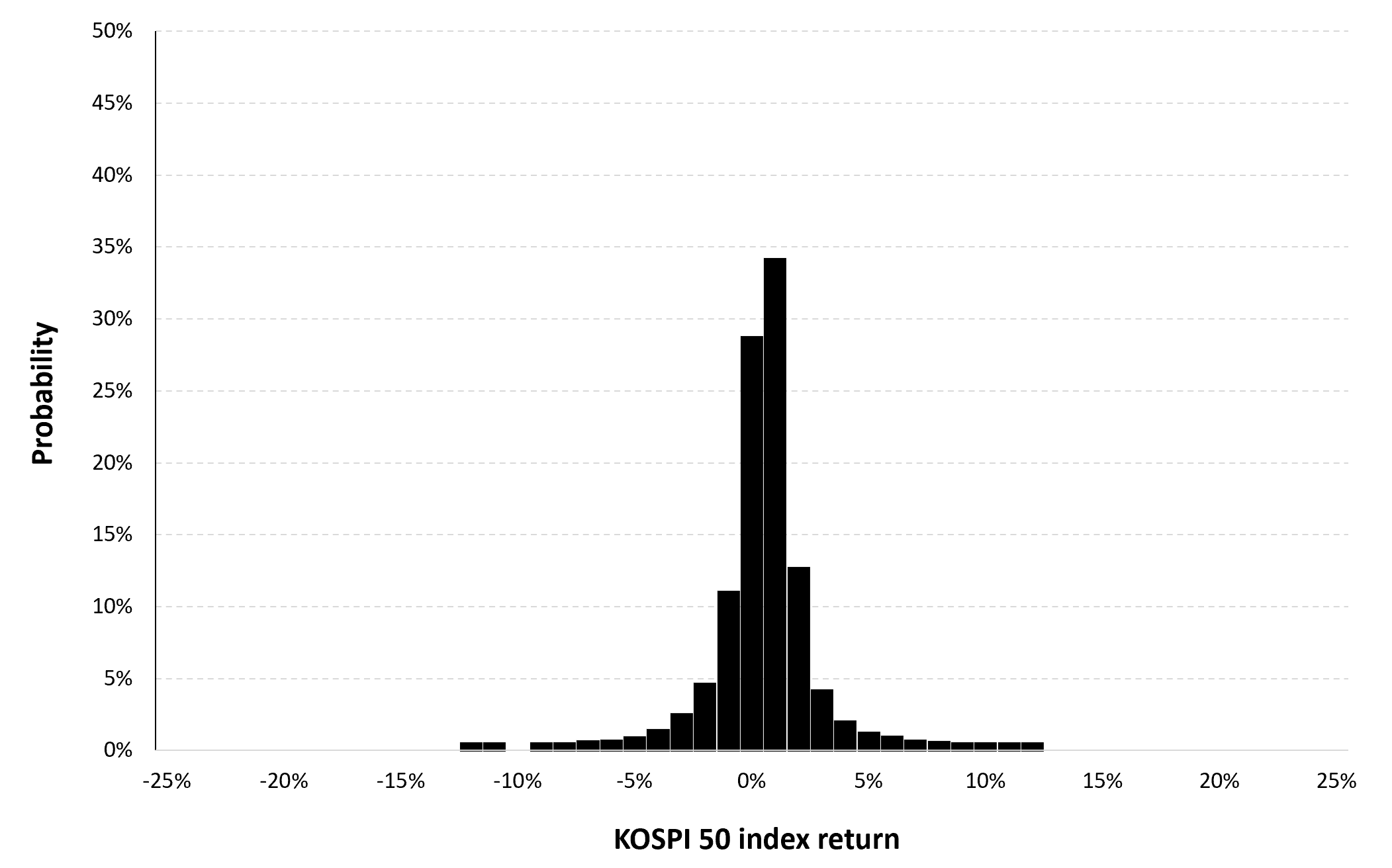

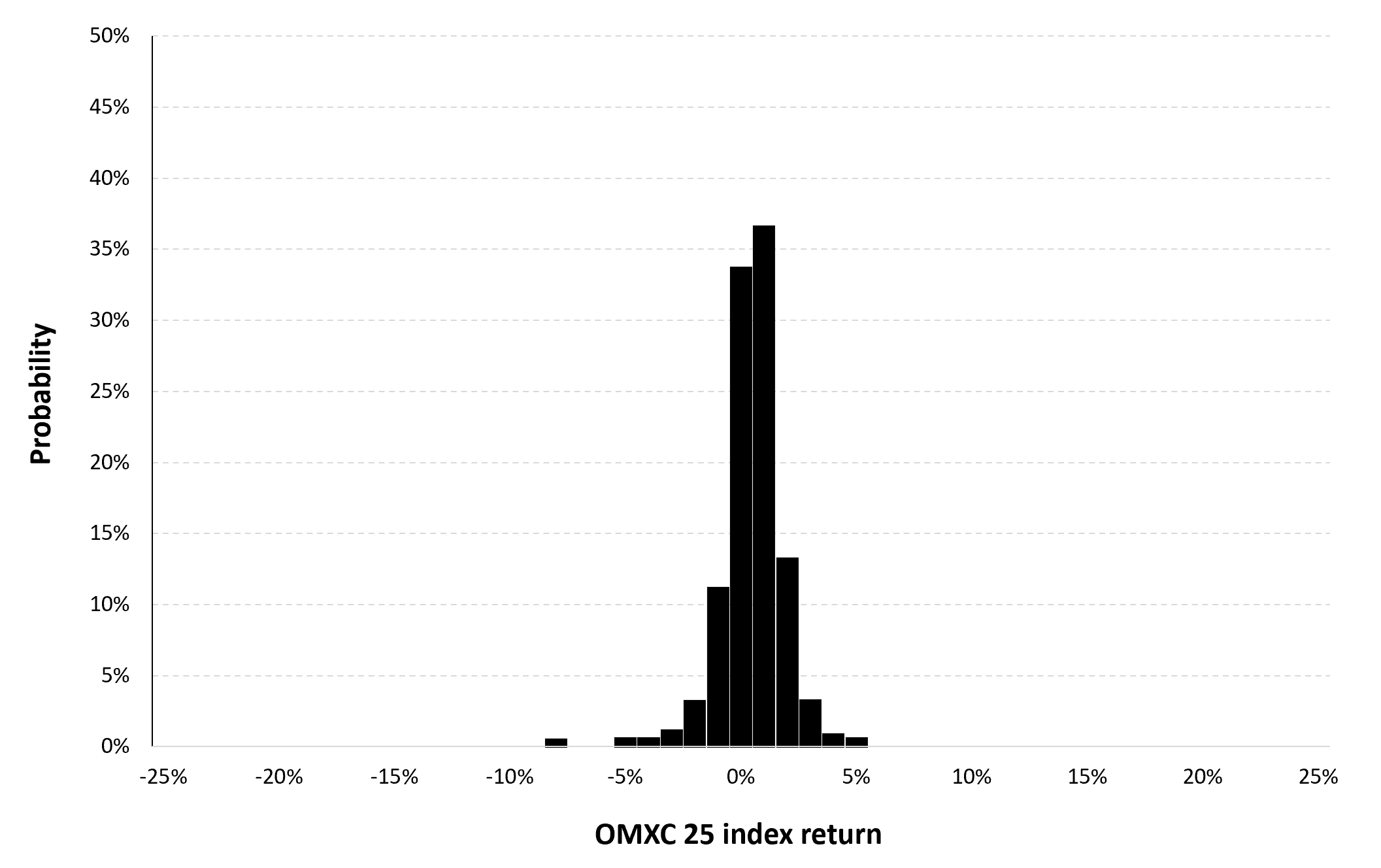

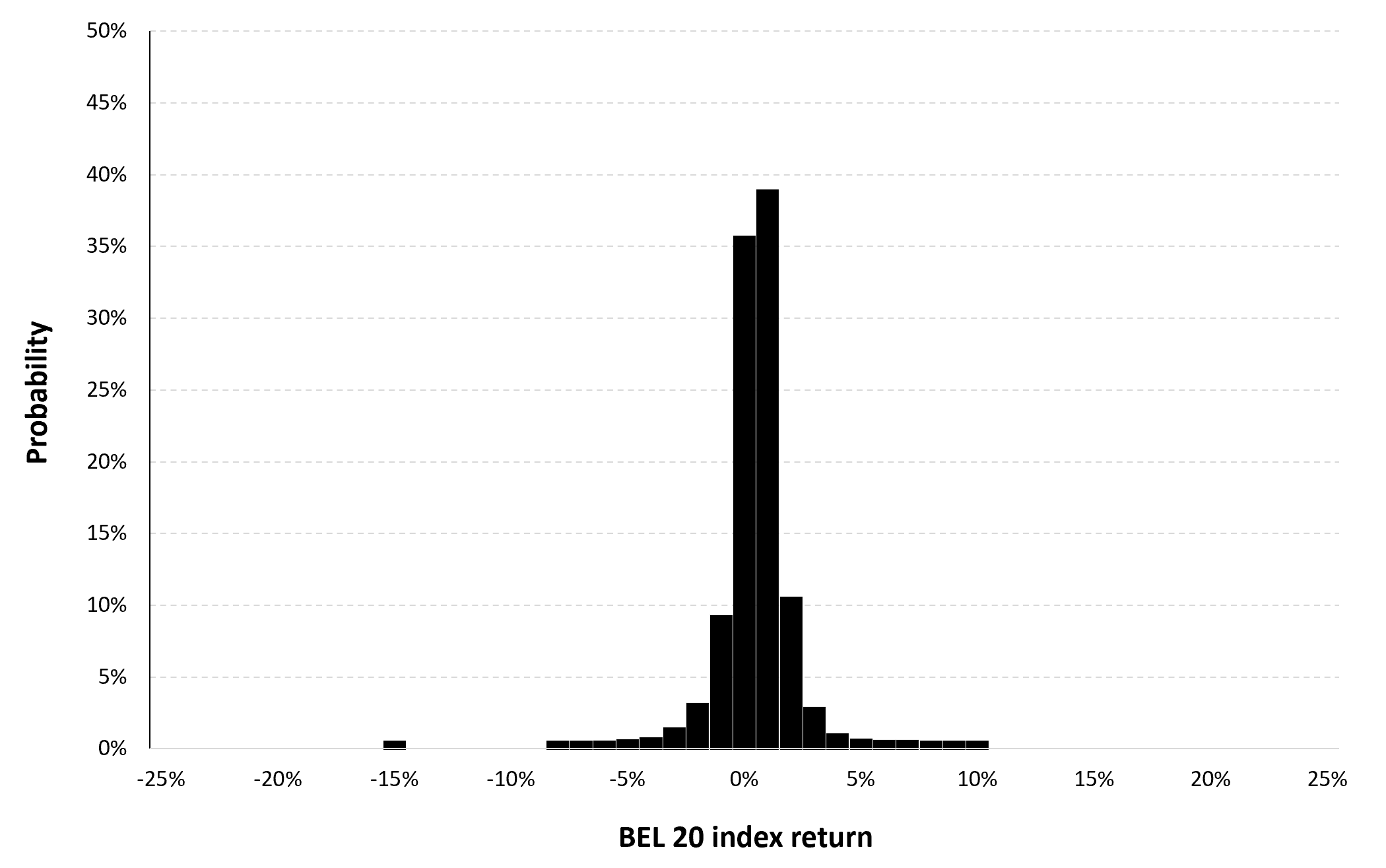

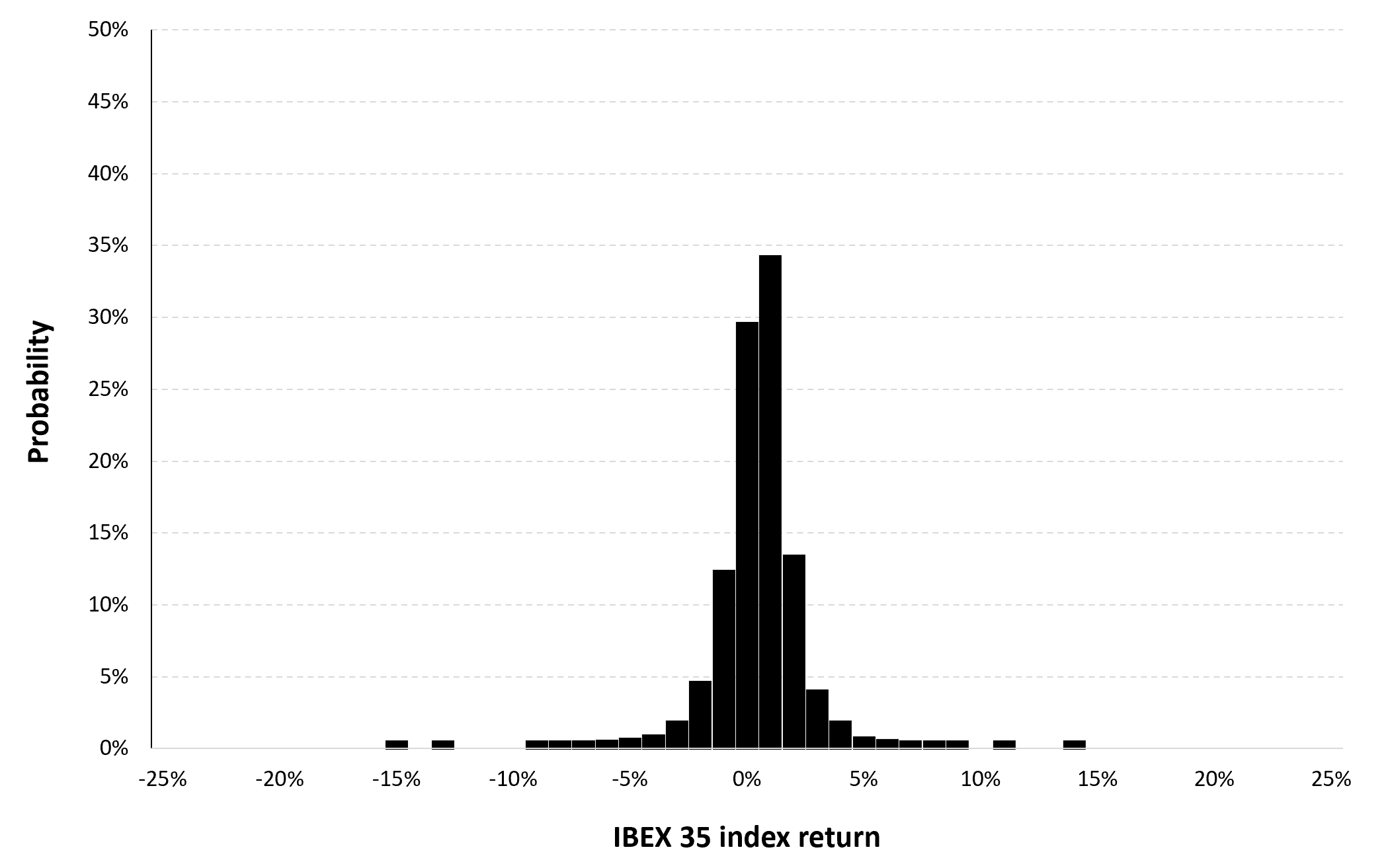

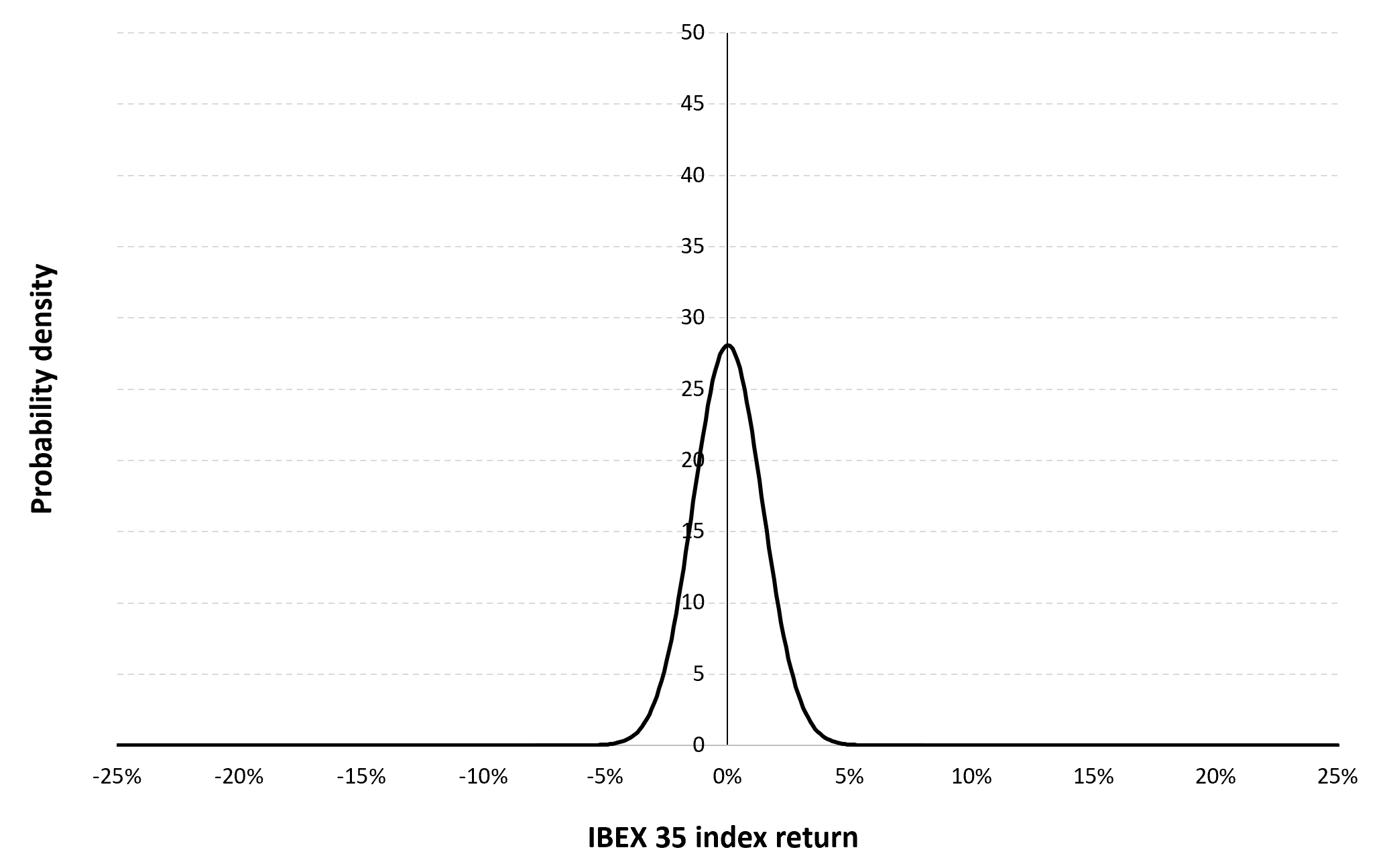

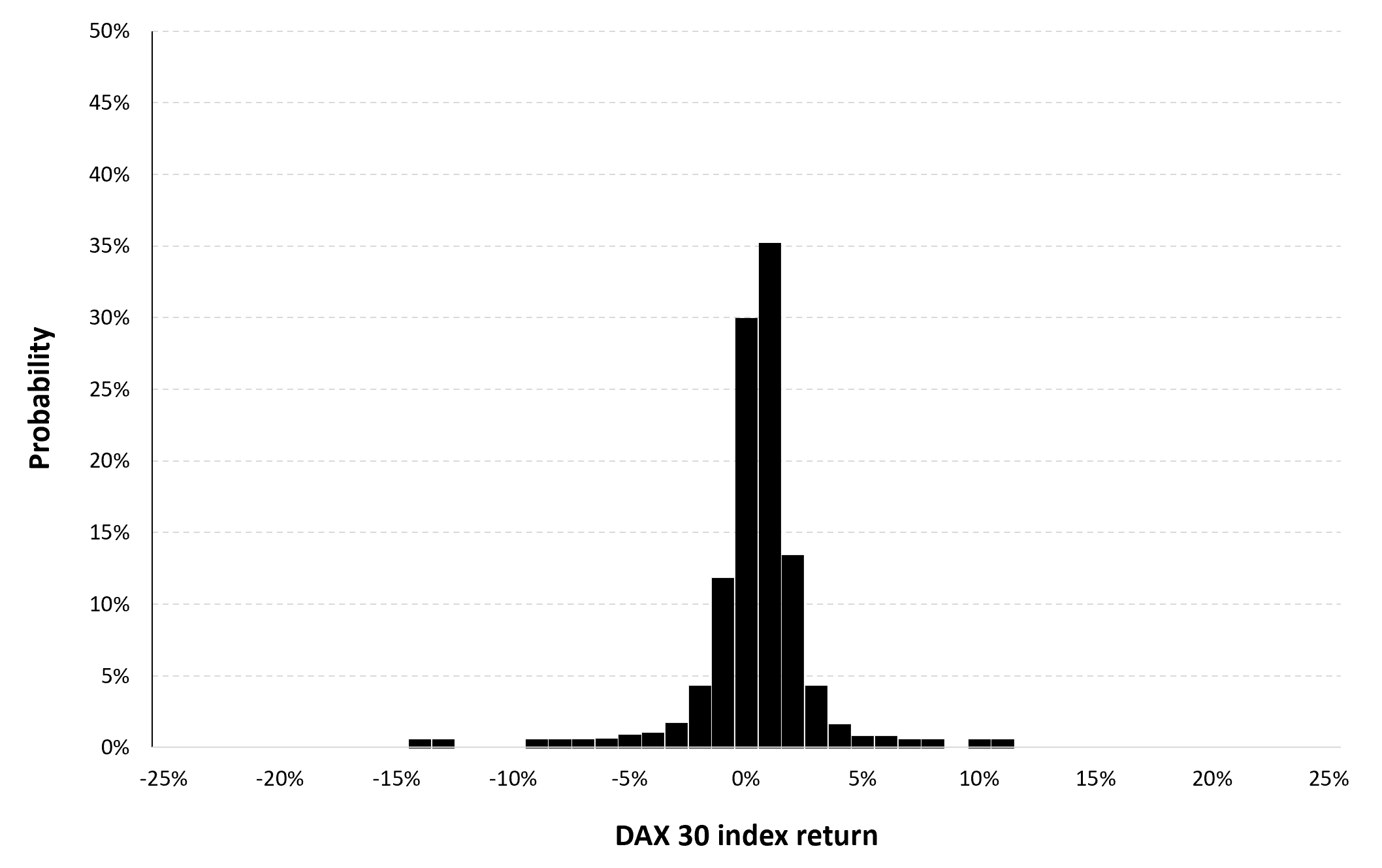

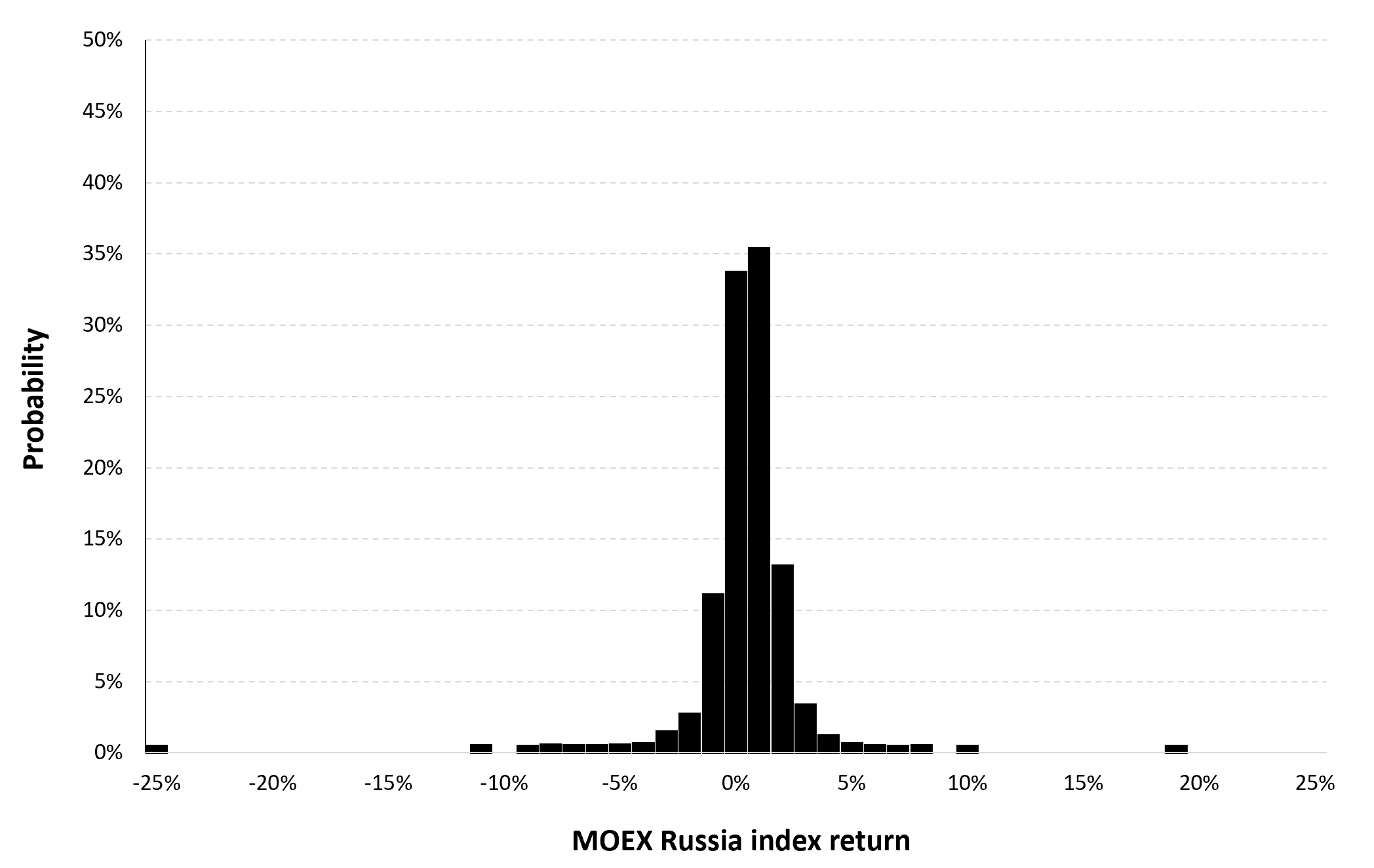

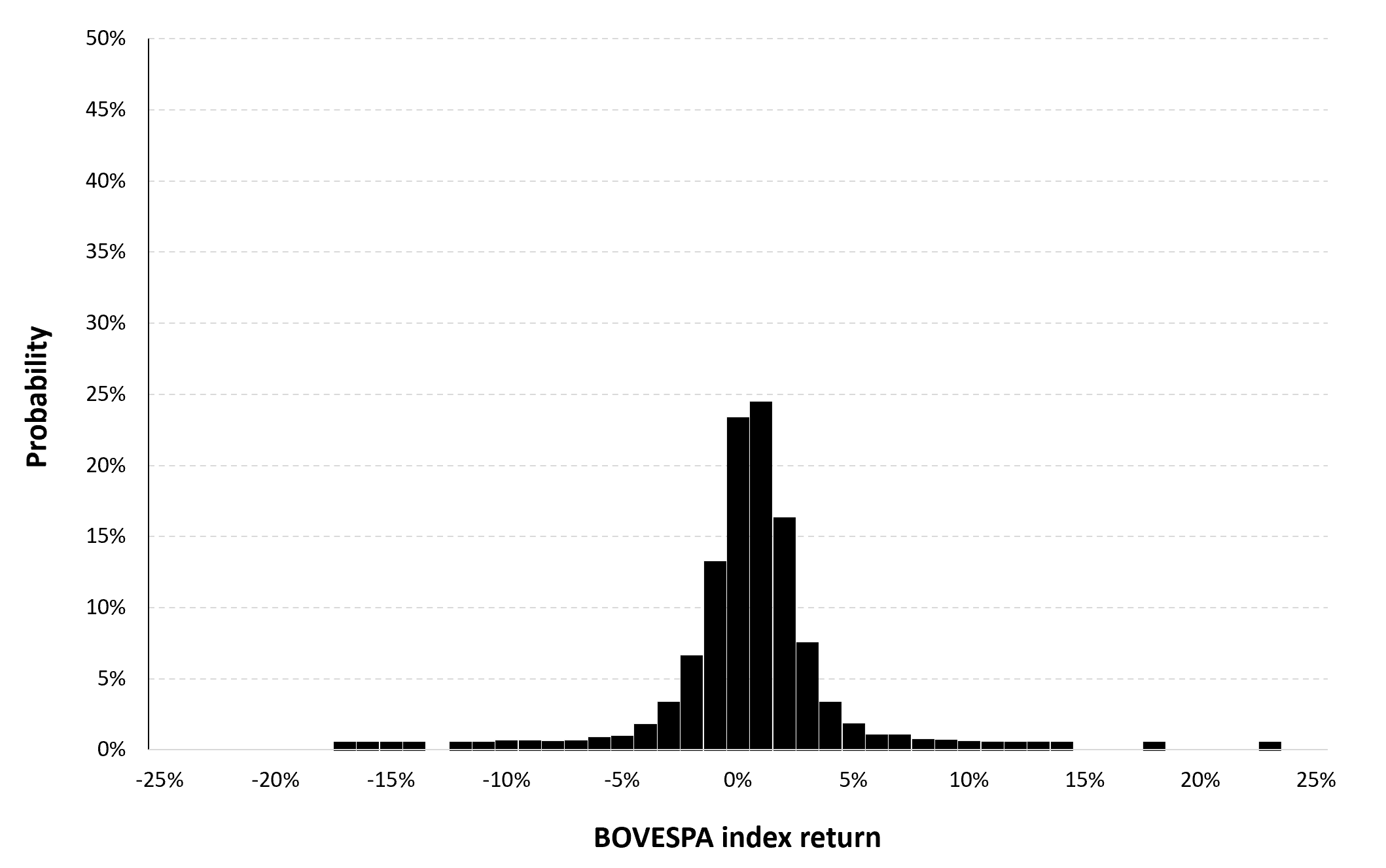

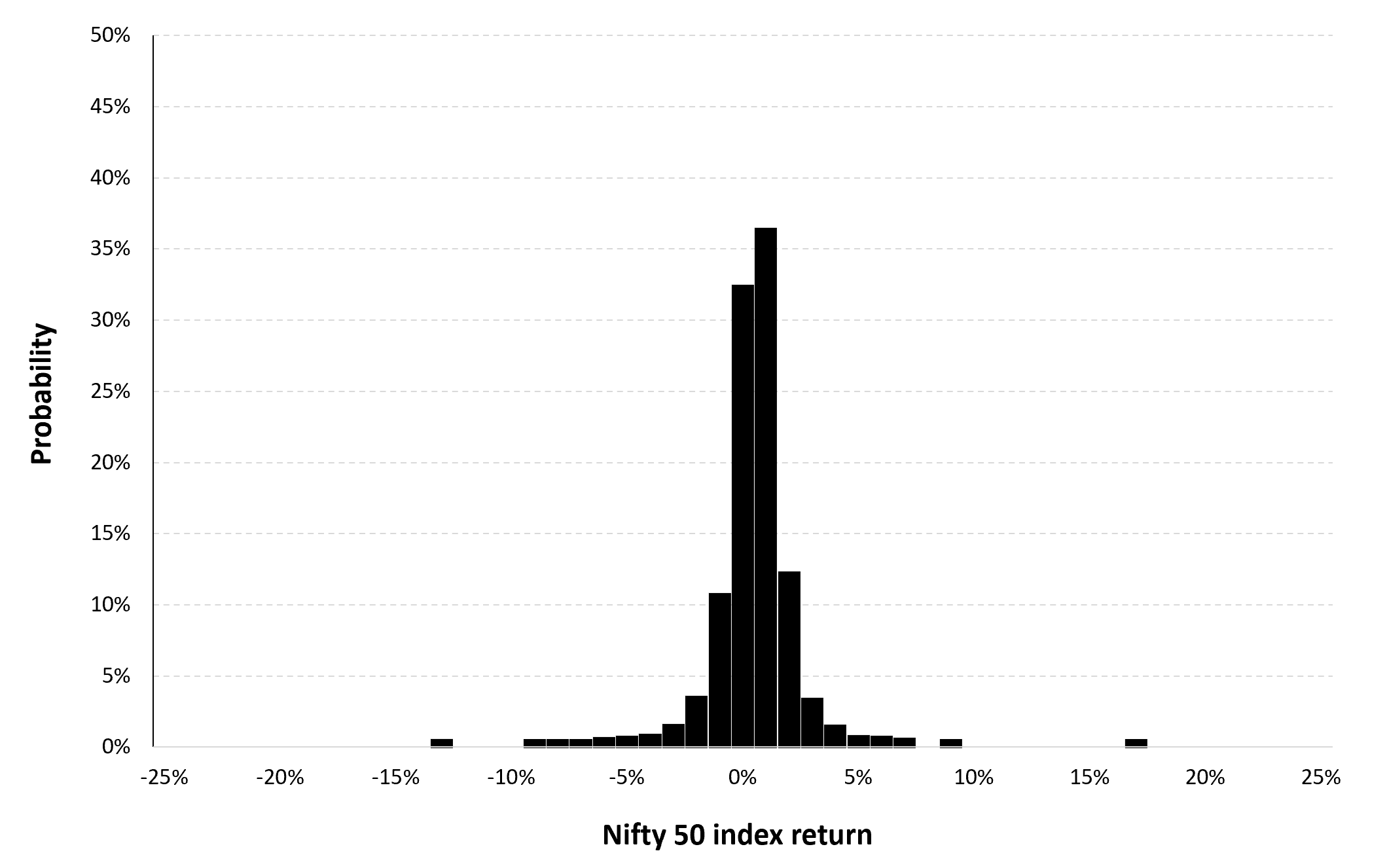

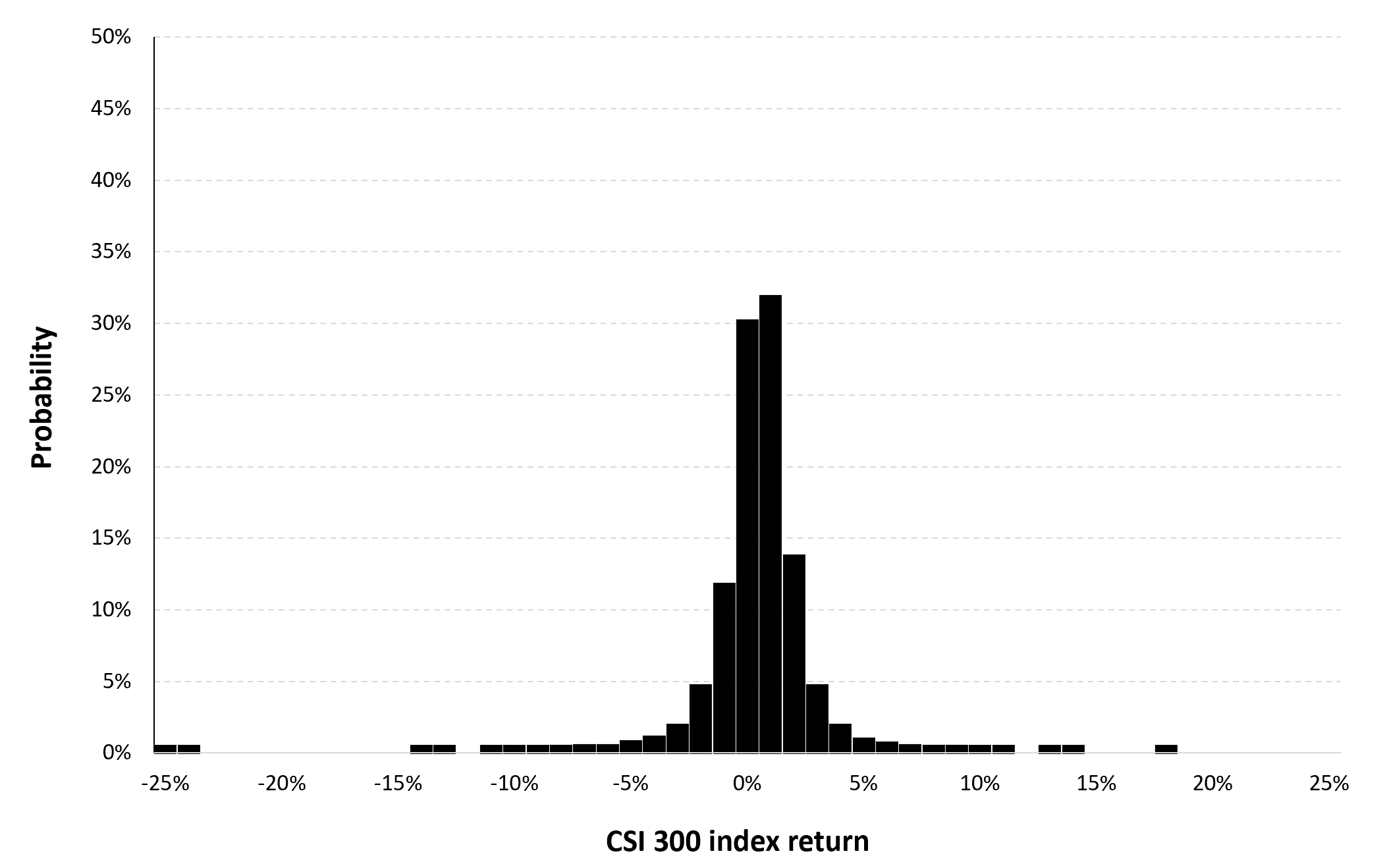

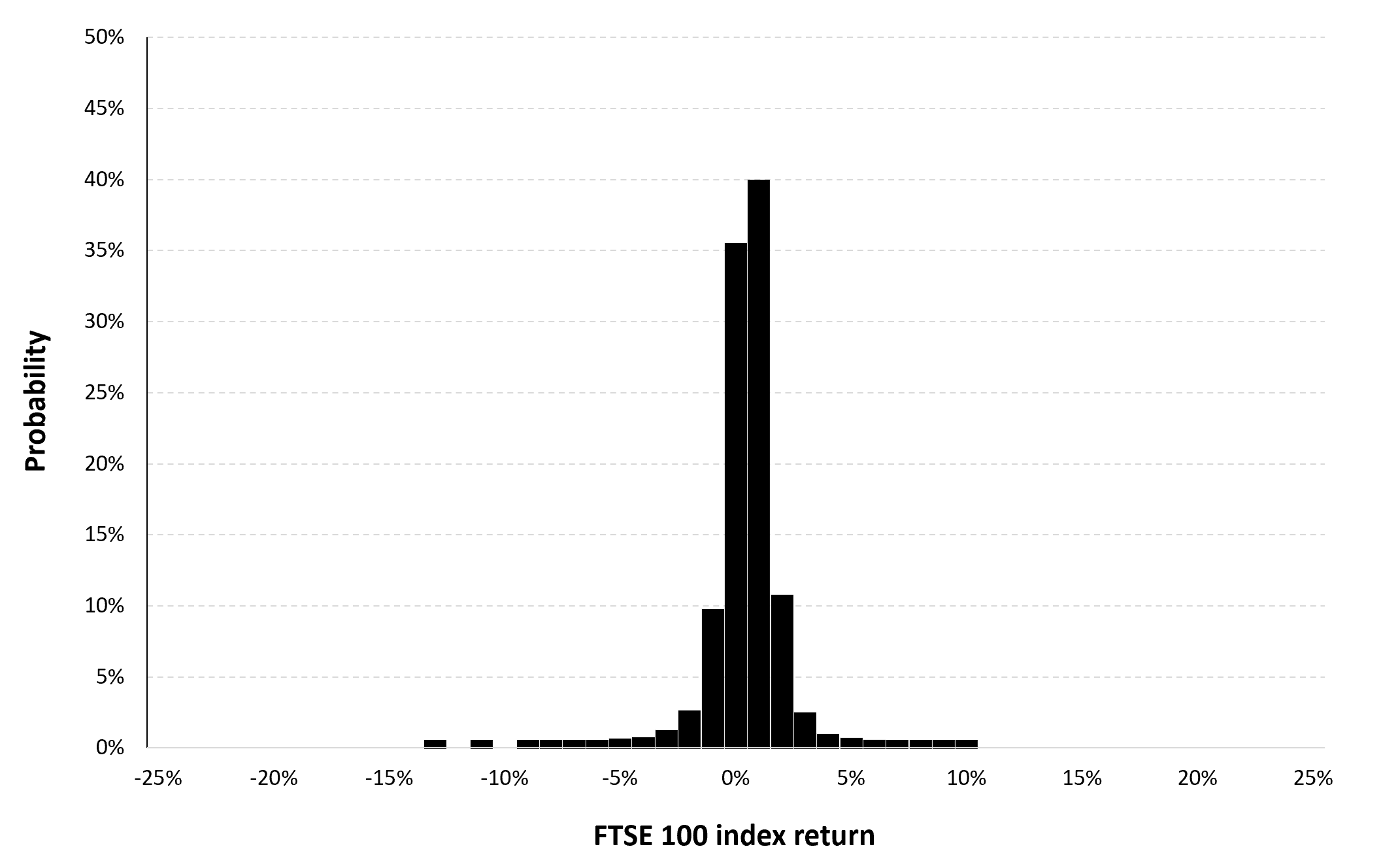

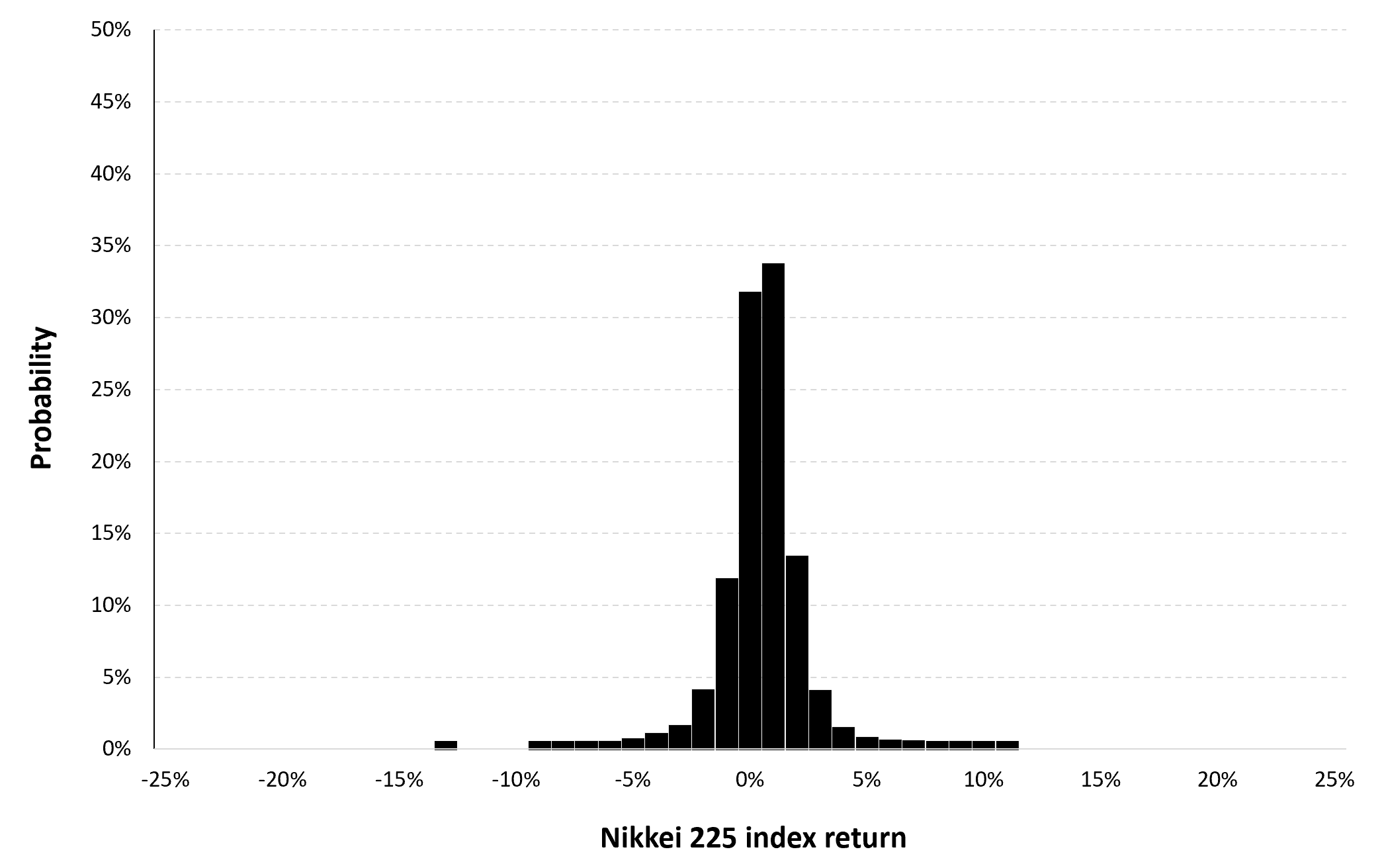

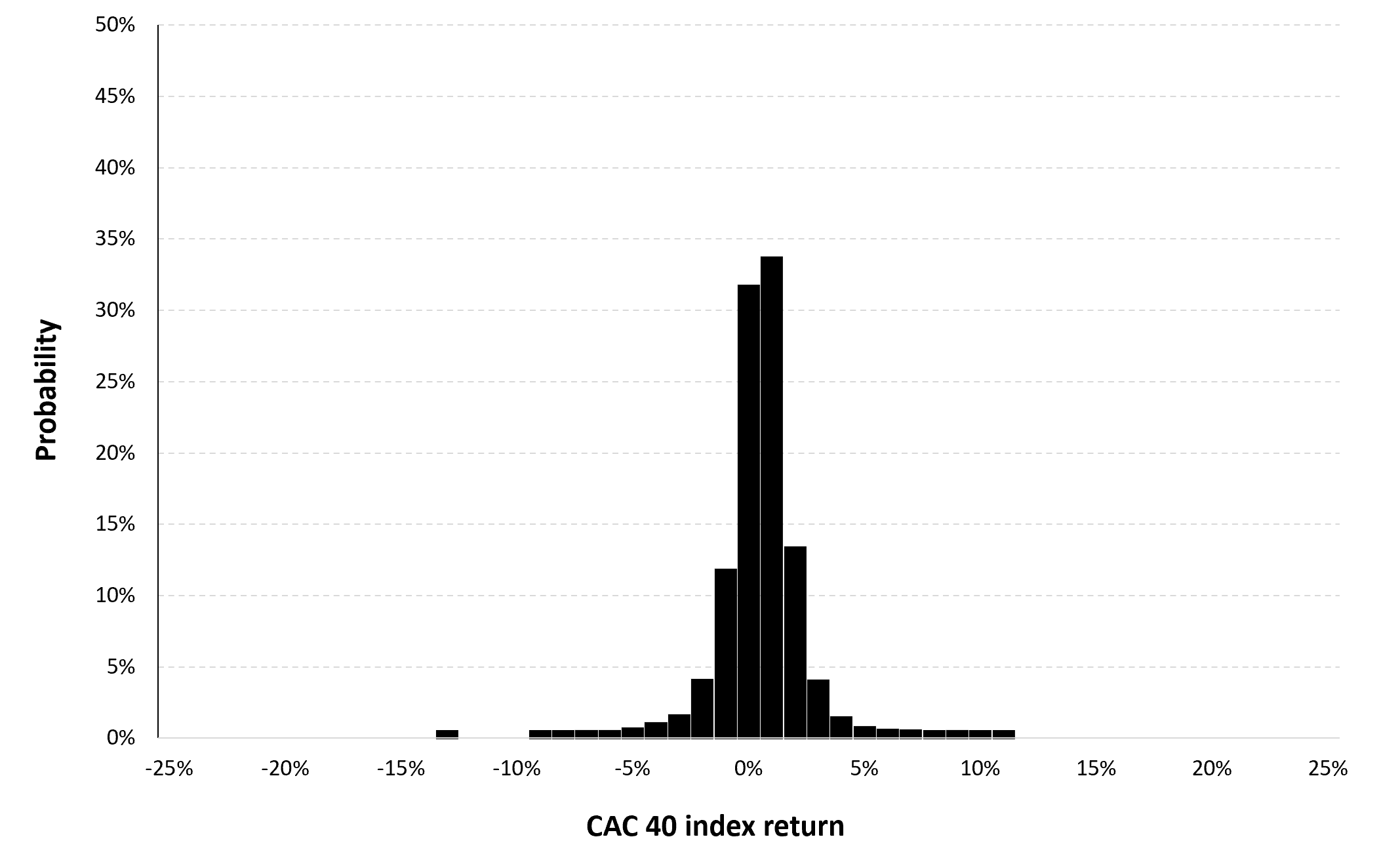

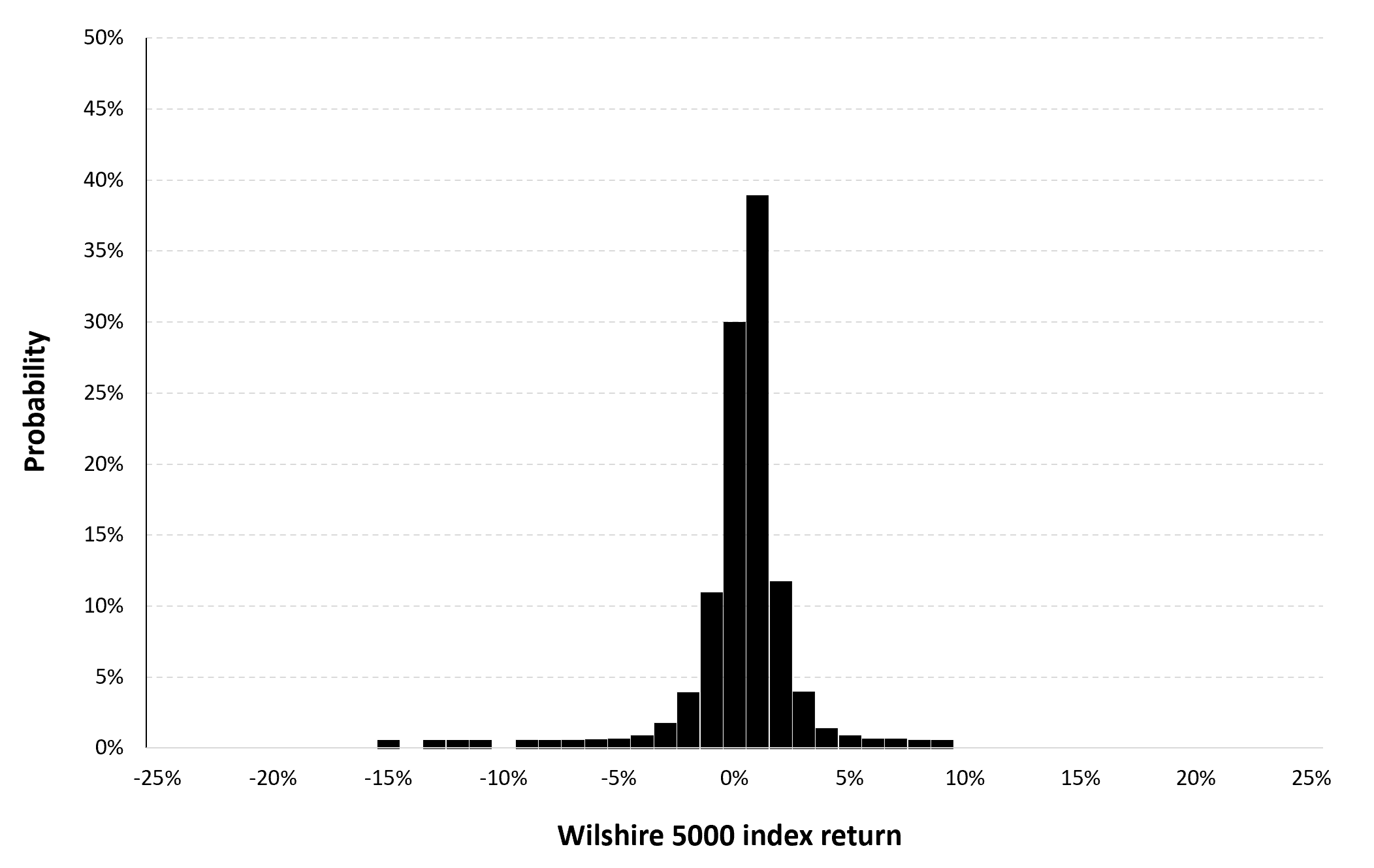

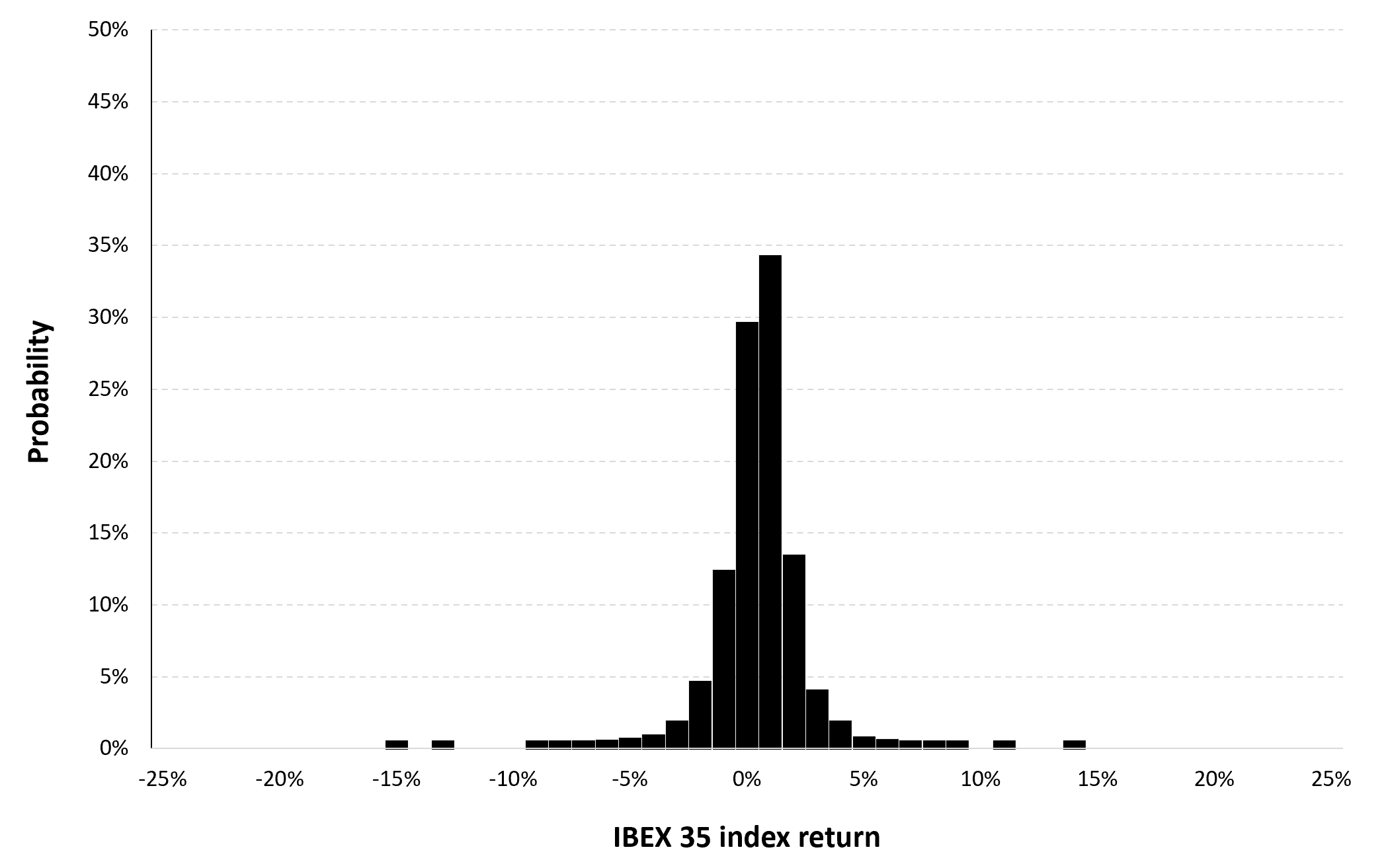

Figure 3 represents the historical distribution of the IBEX 35 index daily returns for the period from July 12, 1993 to December 30, 2022.

Figure 3. Historical distribution of the IBEX 35 index returns.

Source: computation by the author (data: Yahoo! Finance website).

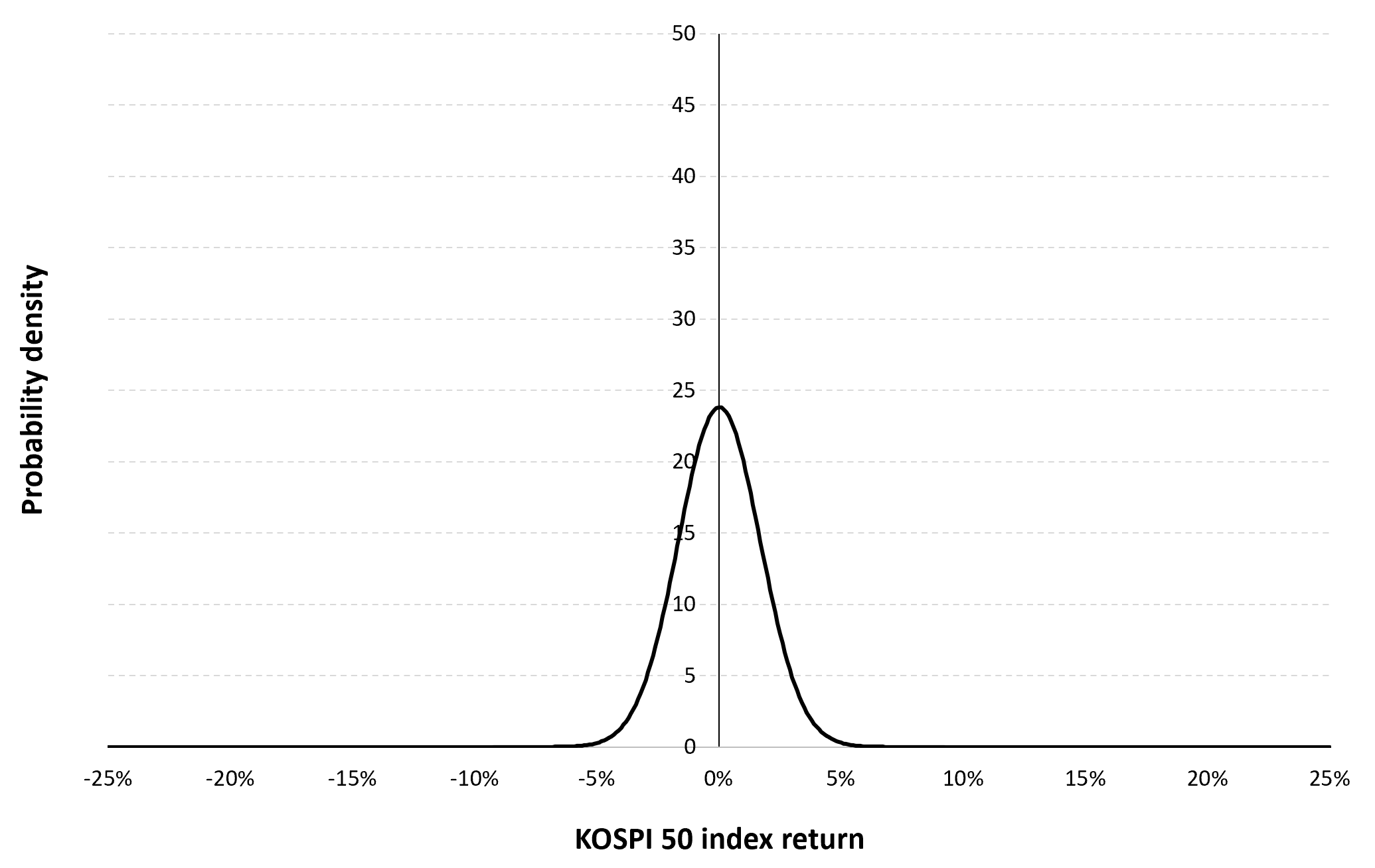

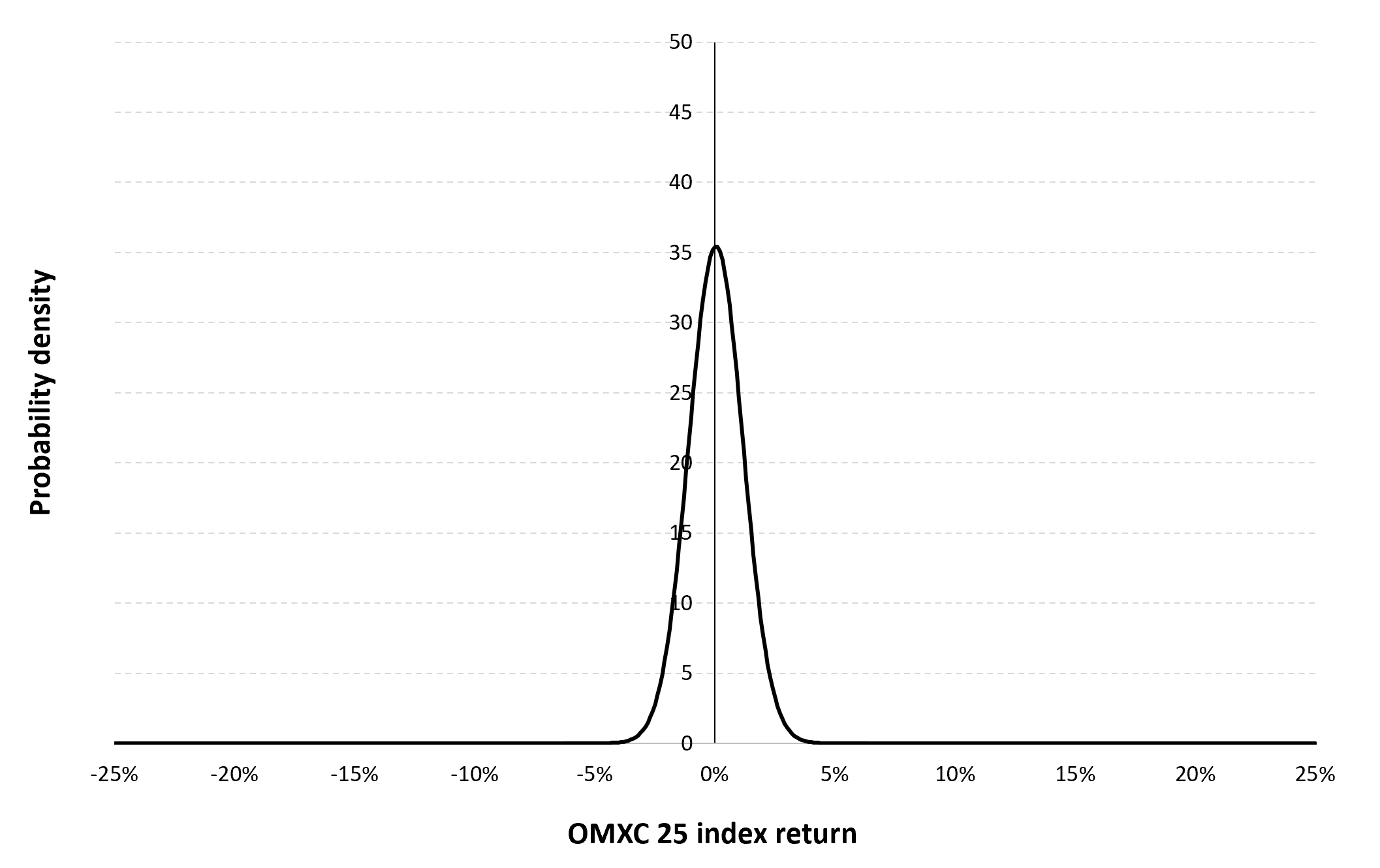

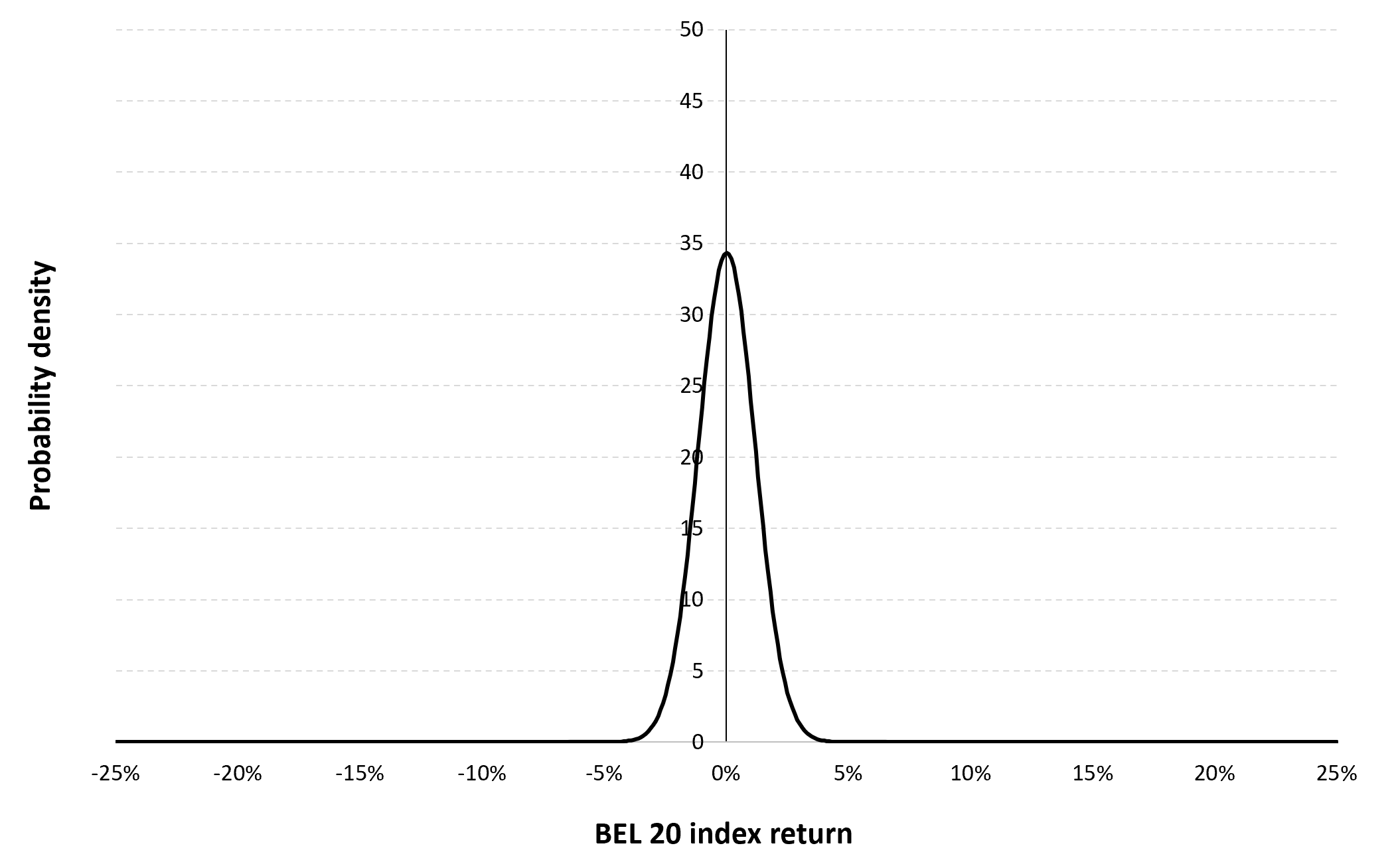

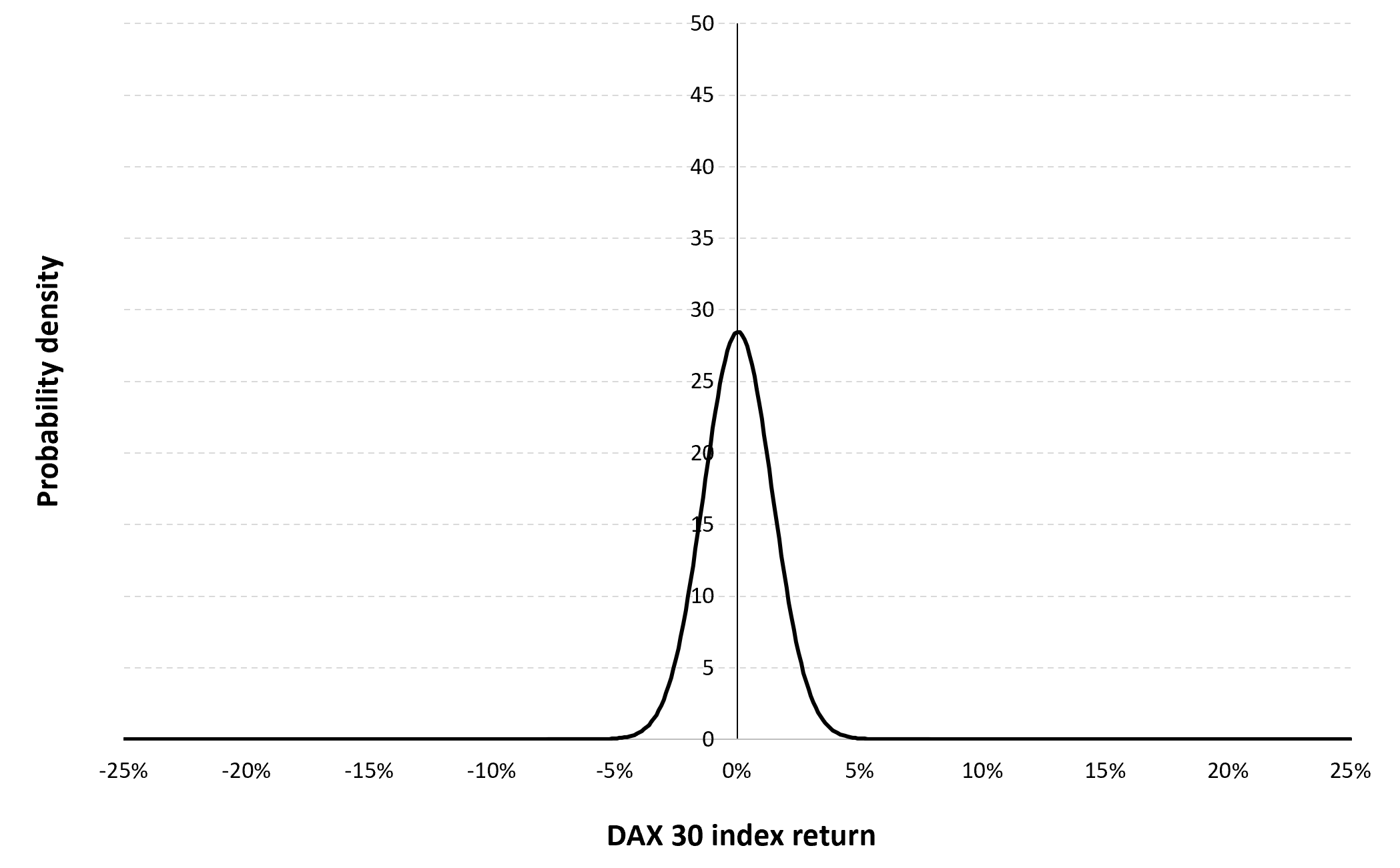

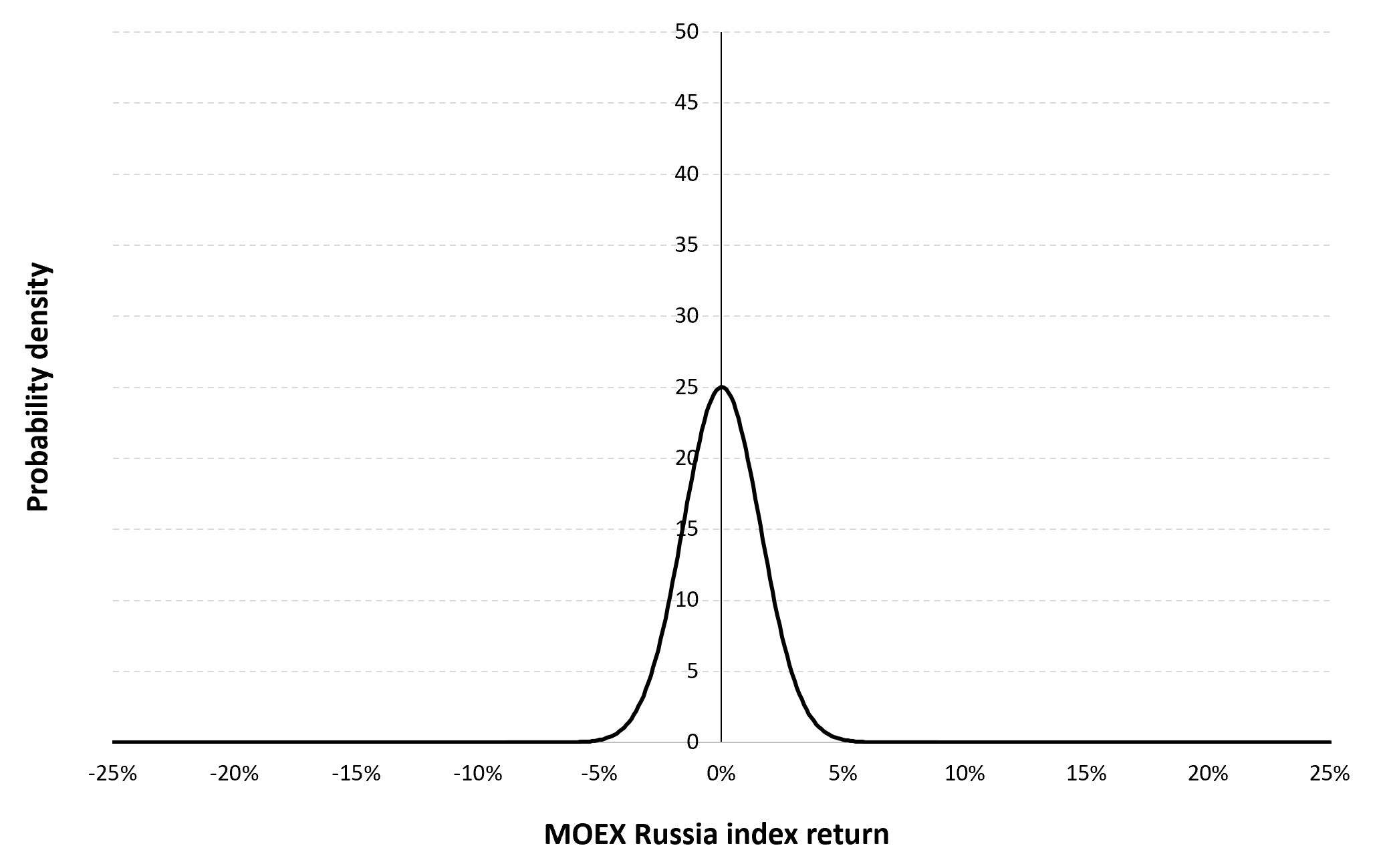

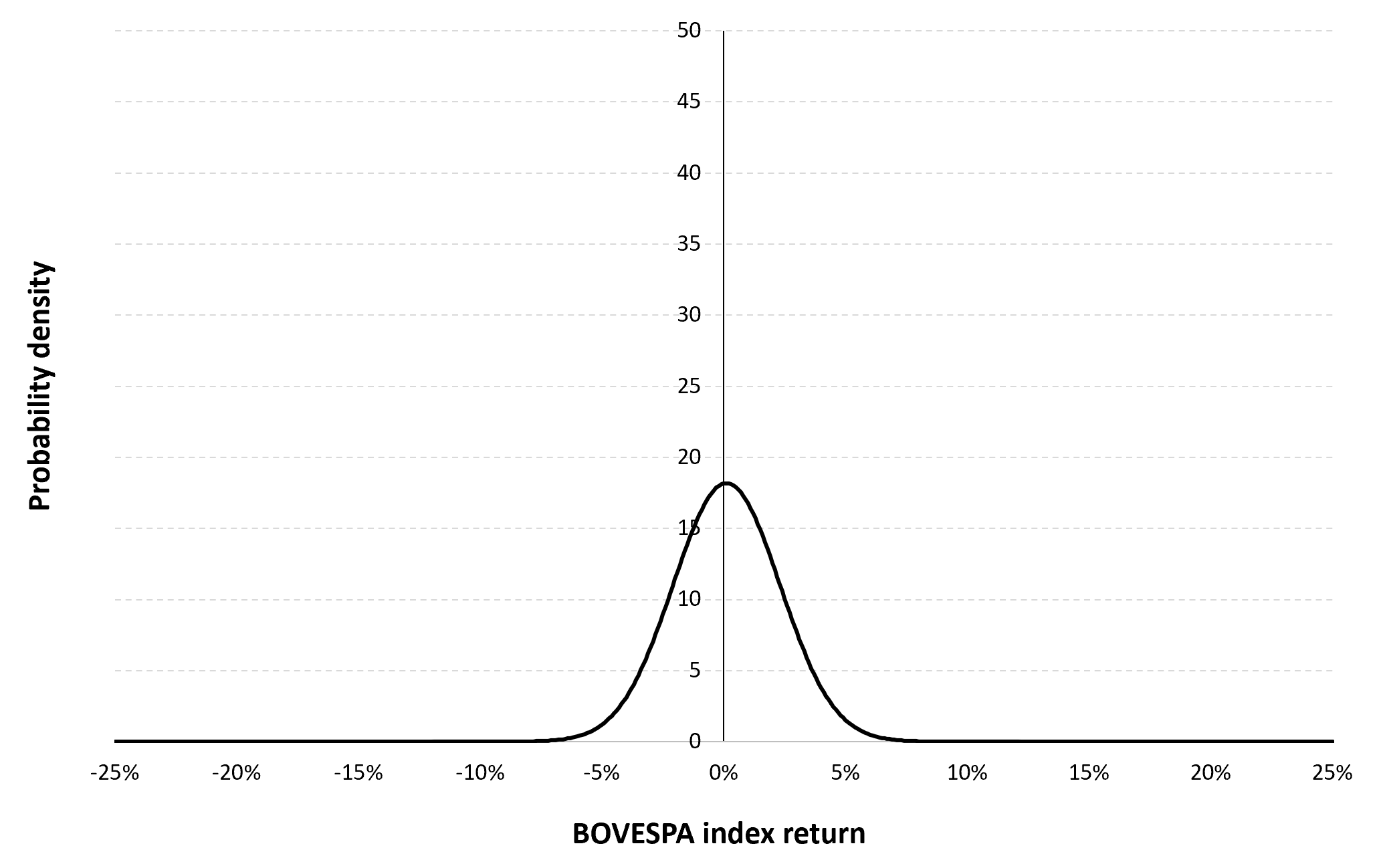

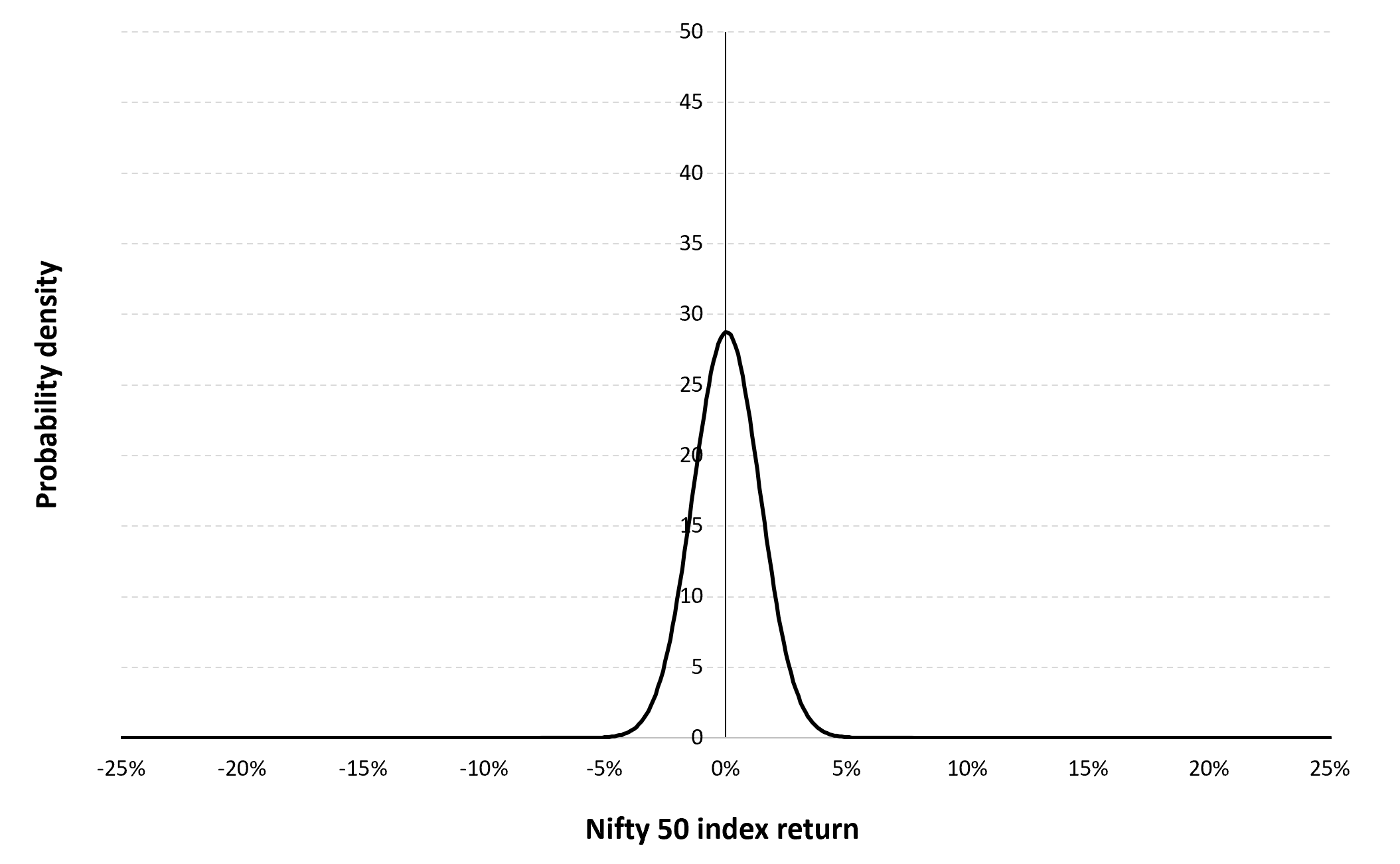

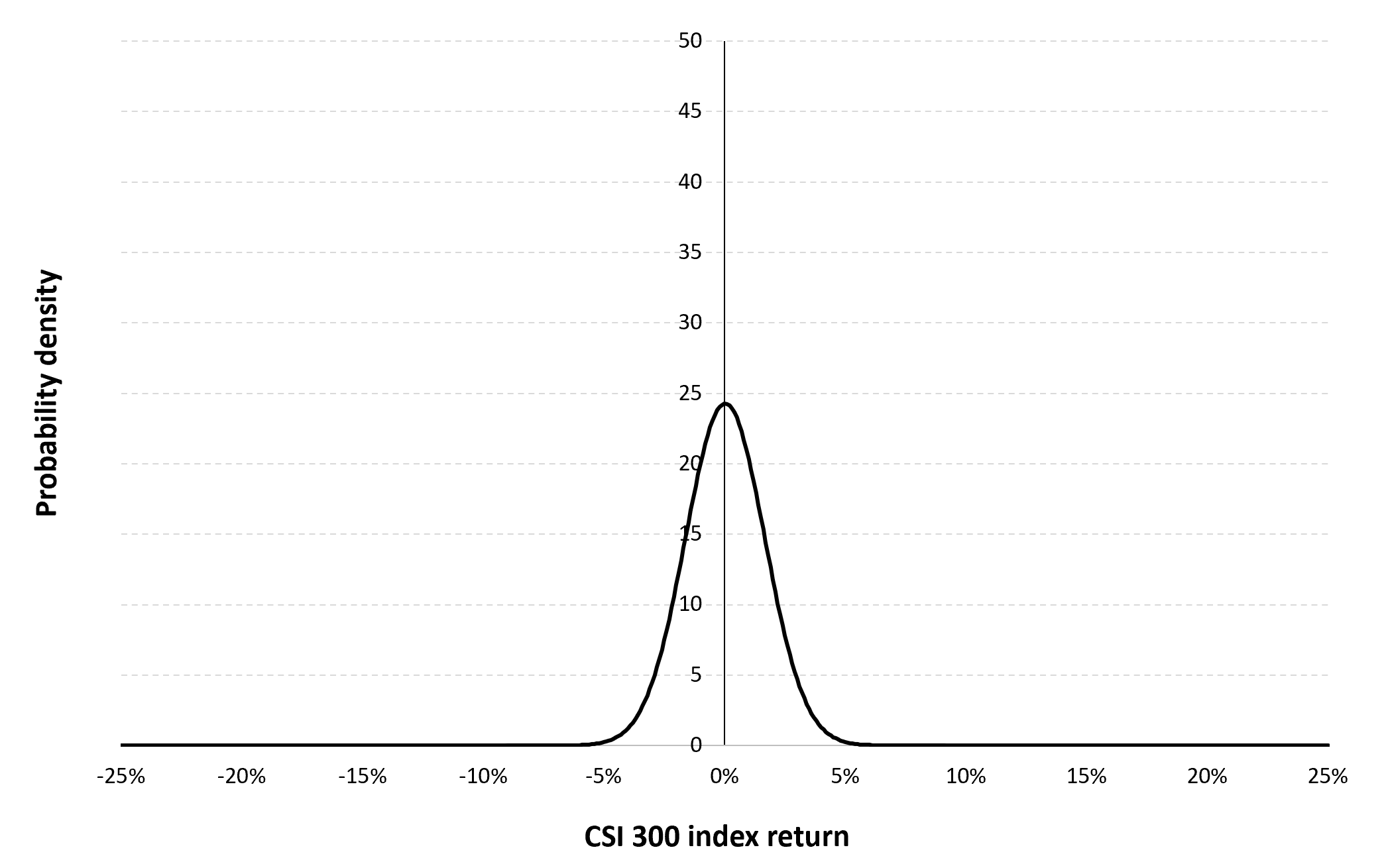

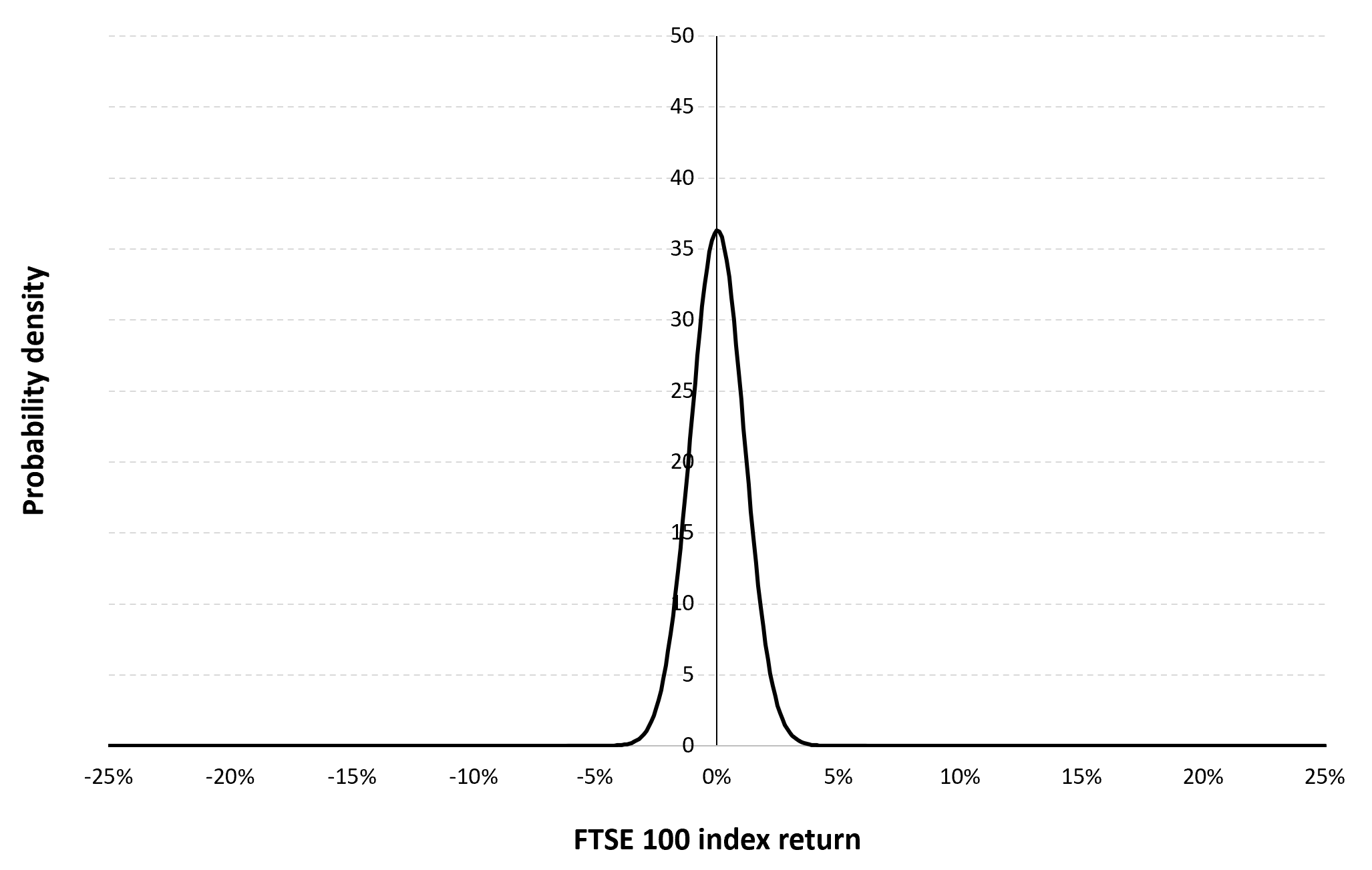

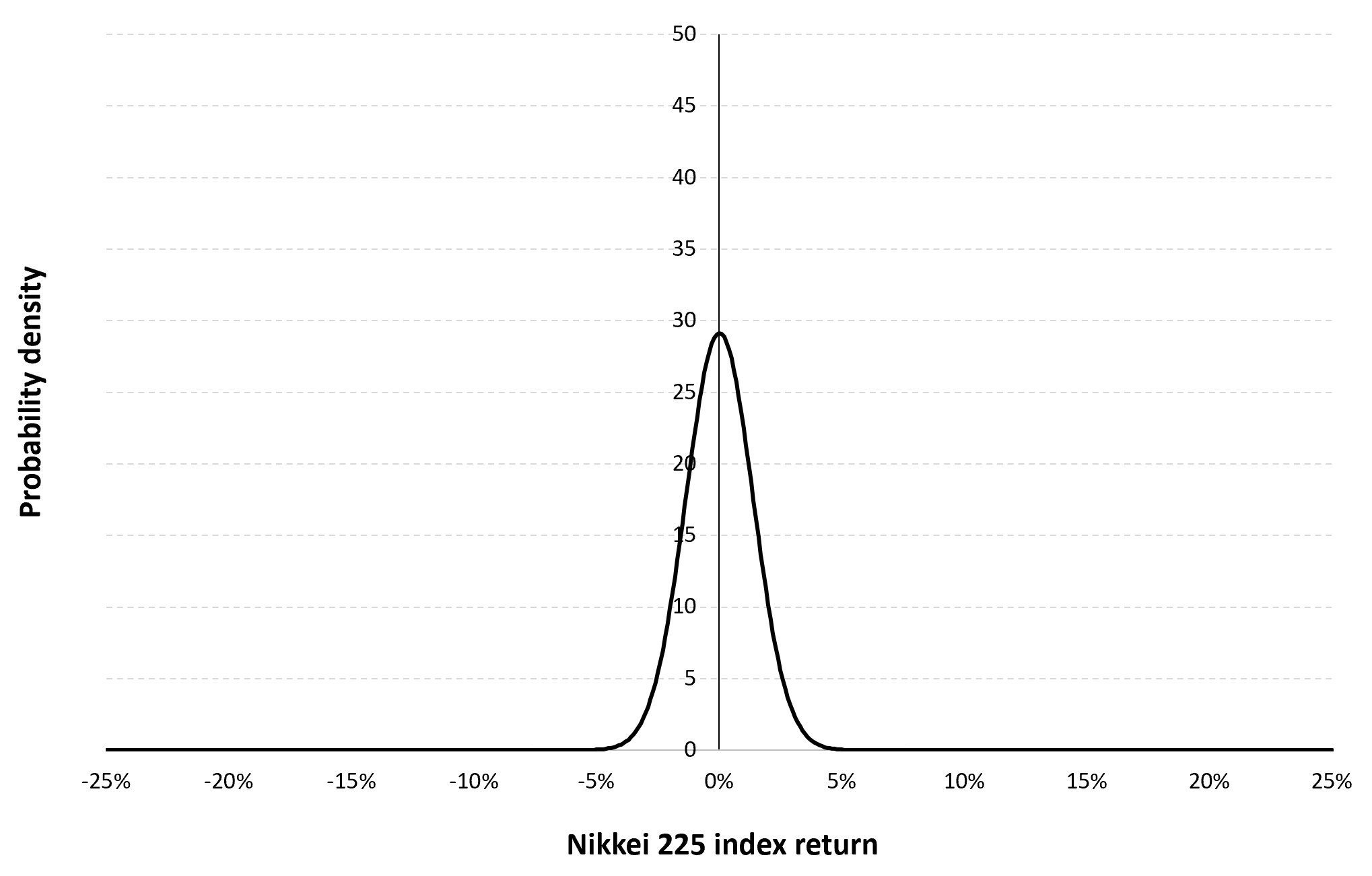

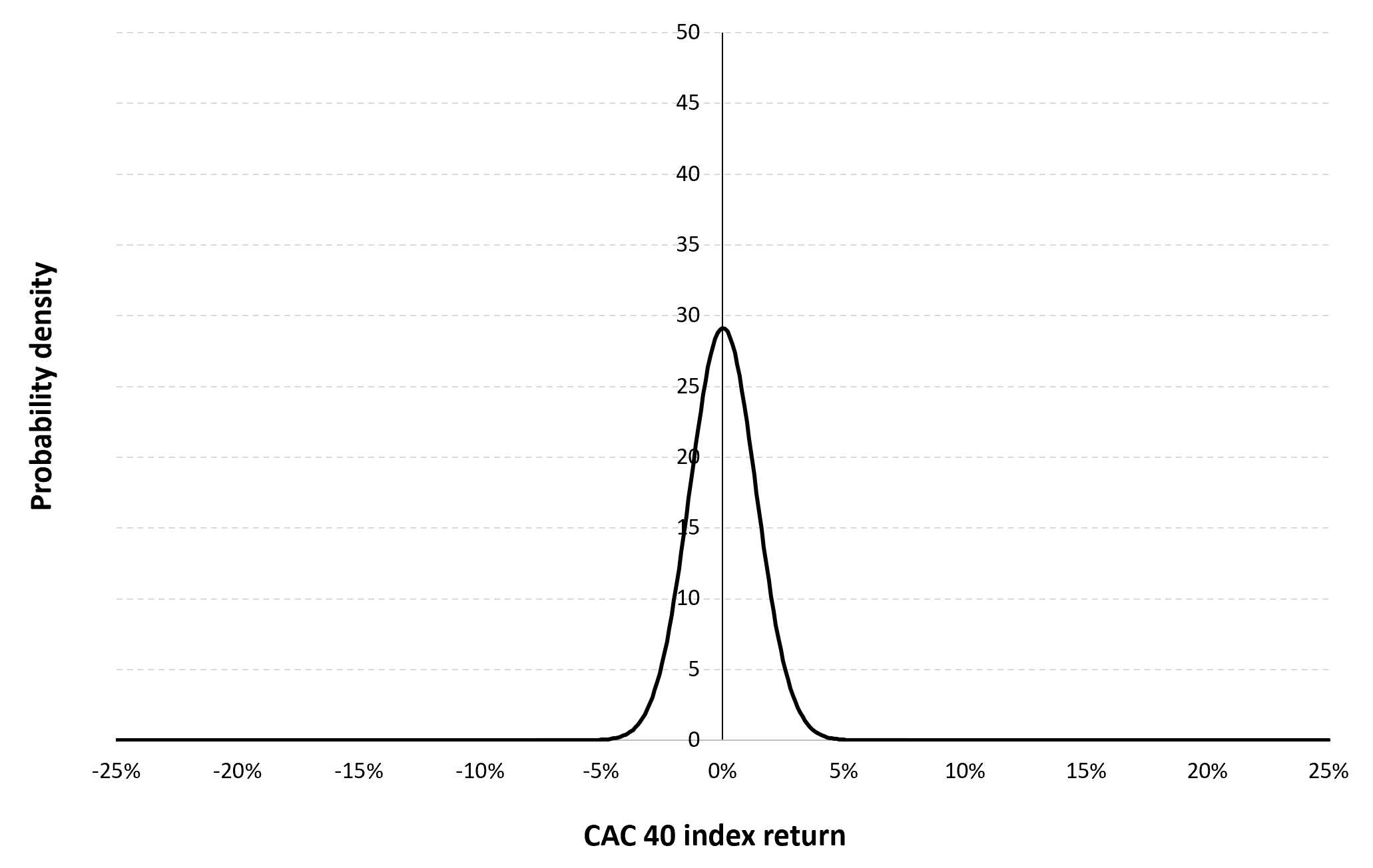

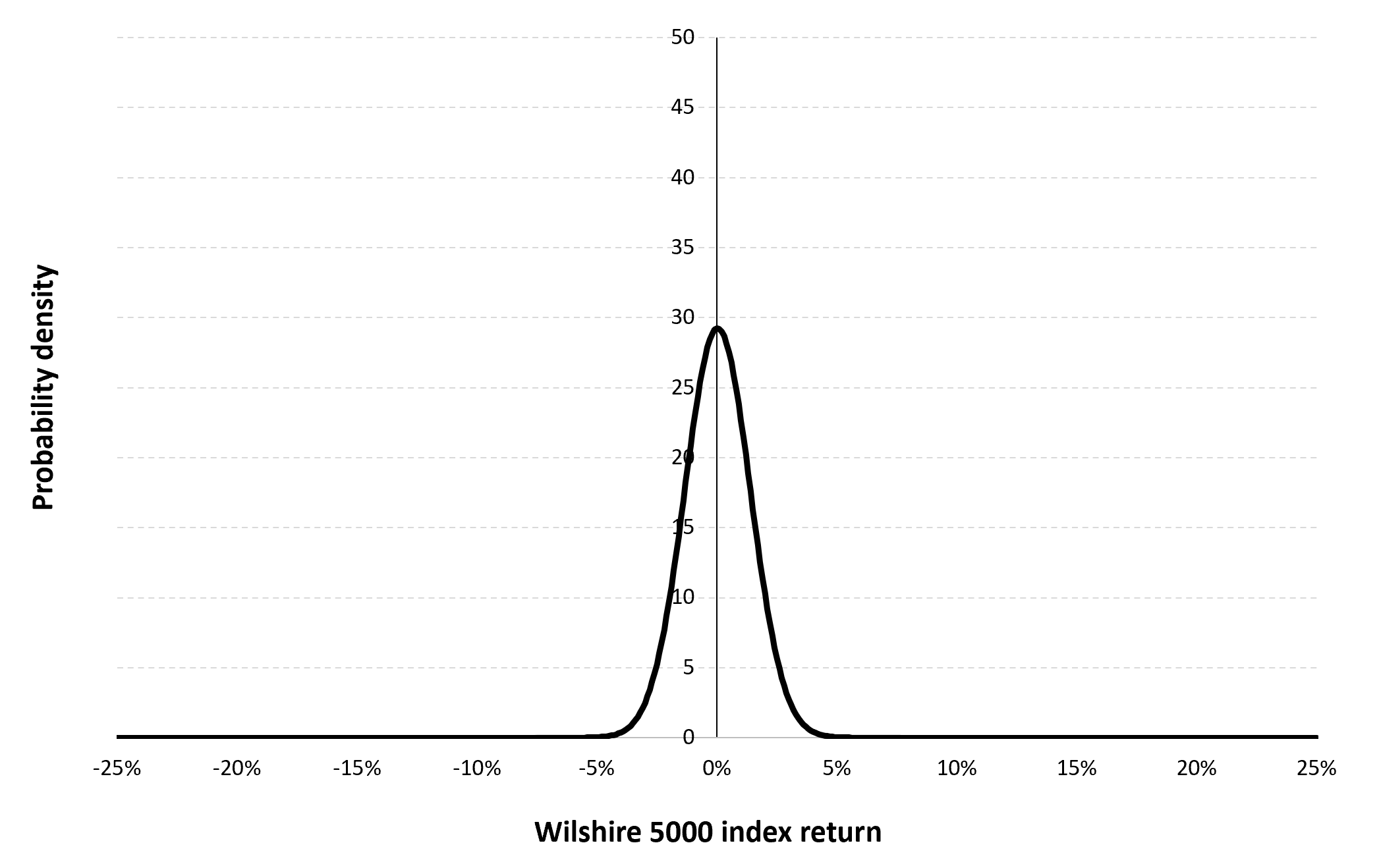

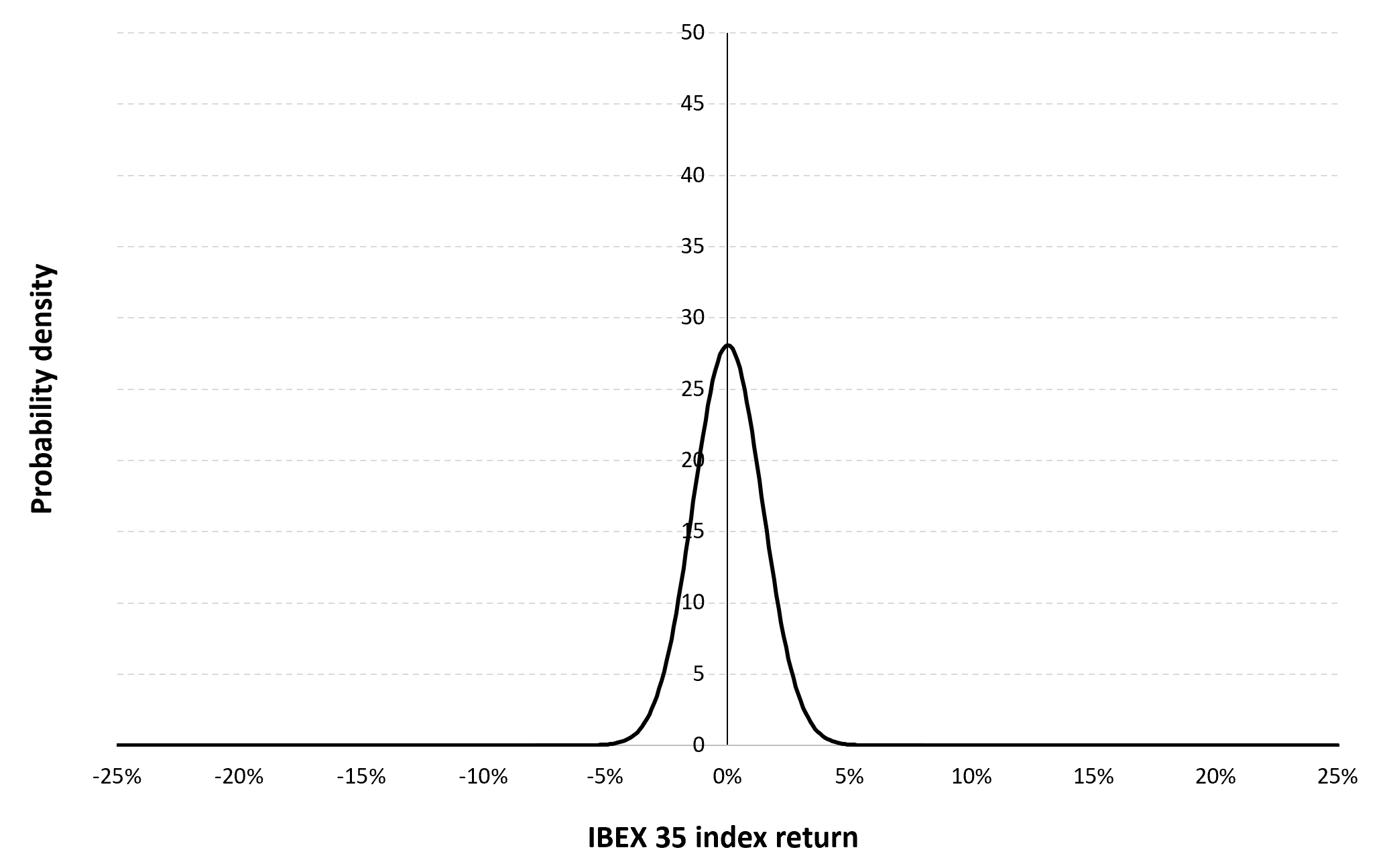

Gaussian distribution

The Gaussian distribution (also called the normal distribution) is a parametric distribution with two parameters: the mean and the standard deviation of returns. We estimated these two parameters over the period from July 12, 1993 to December 30, 2022. The mean of daily returns is equal to 0.02% and the standard deviation of daily returns is equal to 1.37% (or equivalently 3.94% for the annual mean and 28.02% for the annual standard deviation as shown in Table 3 above).

Figure 4 below represents the Gaussian distribution of the IBEX 35 index daily returns with parameters estimated over the period from July 12, 1993 to December 30, 2022.

Figure 4. Gaussian distribution of the IBEX 35 index returns.

Source: computation by the author (data: Yahoo! Finance website).

Risk measures of the IBEX 35 index returns

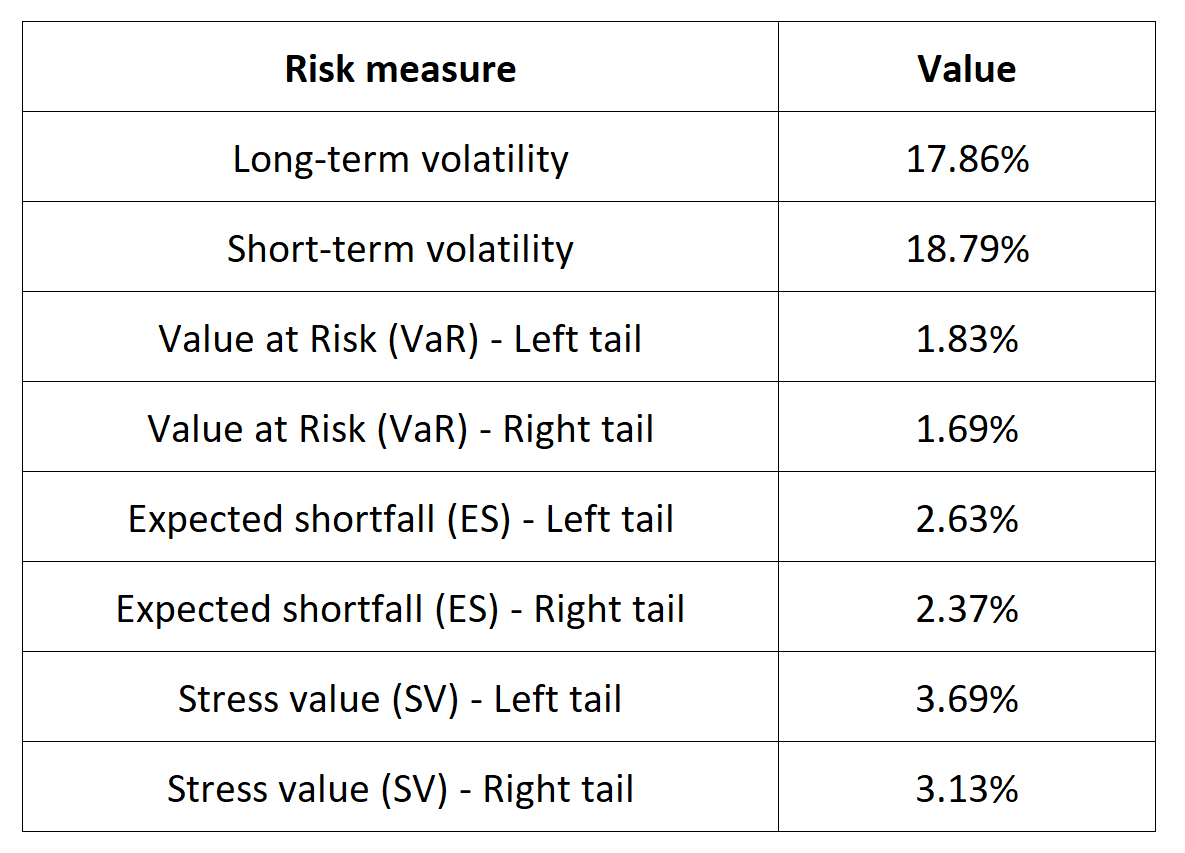

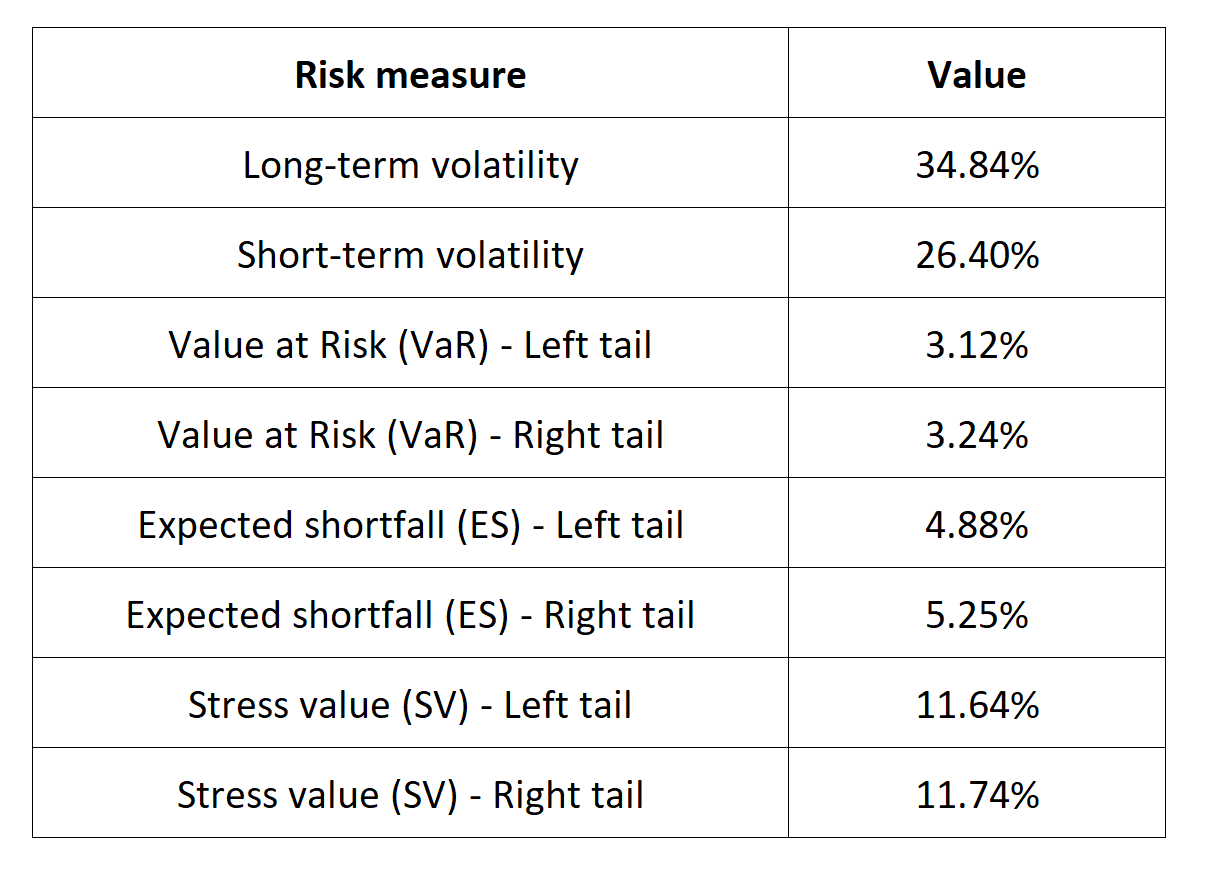

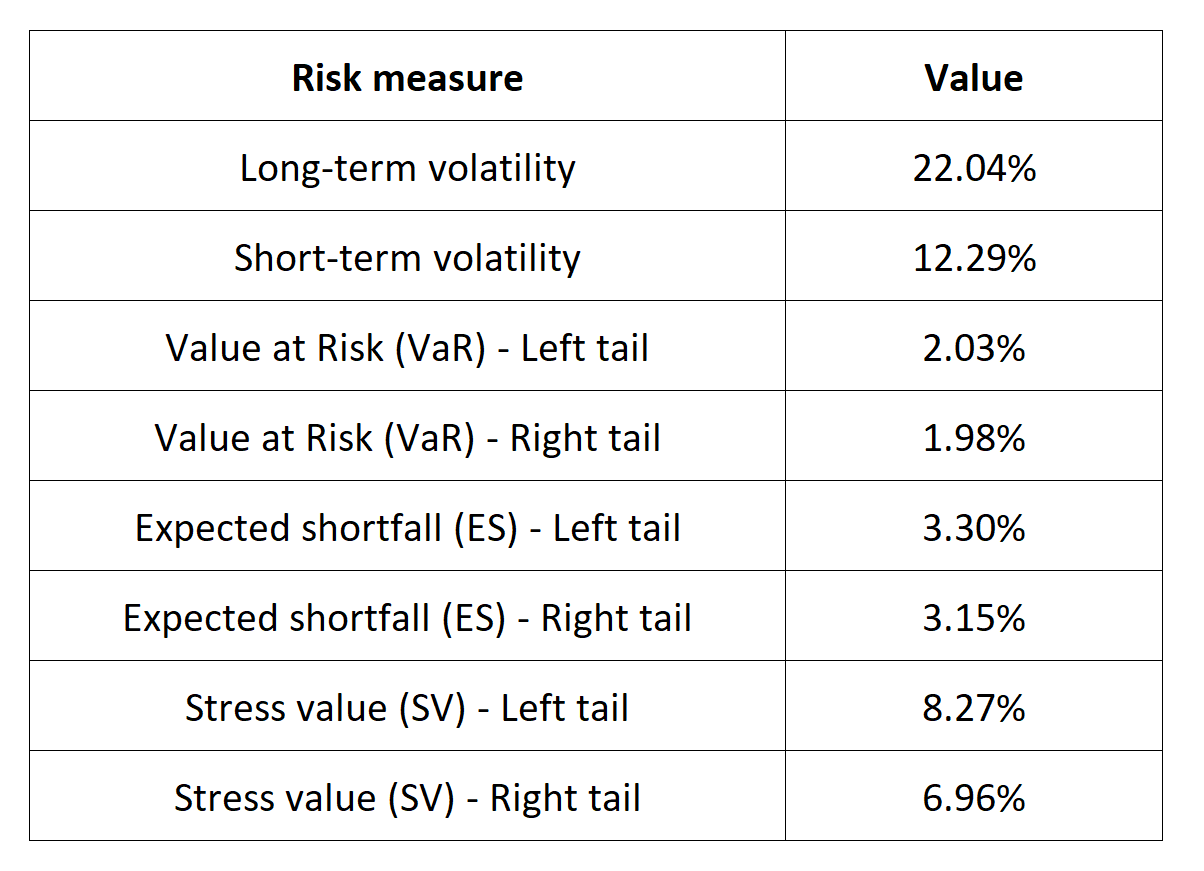

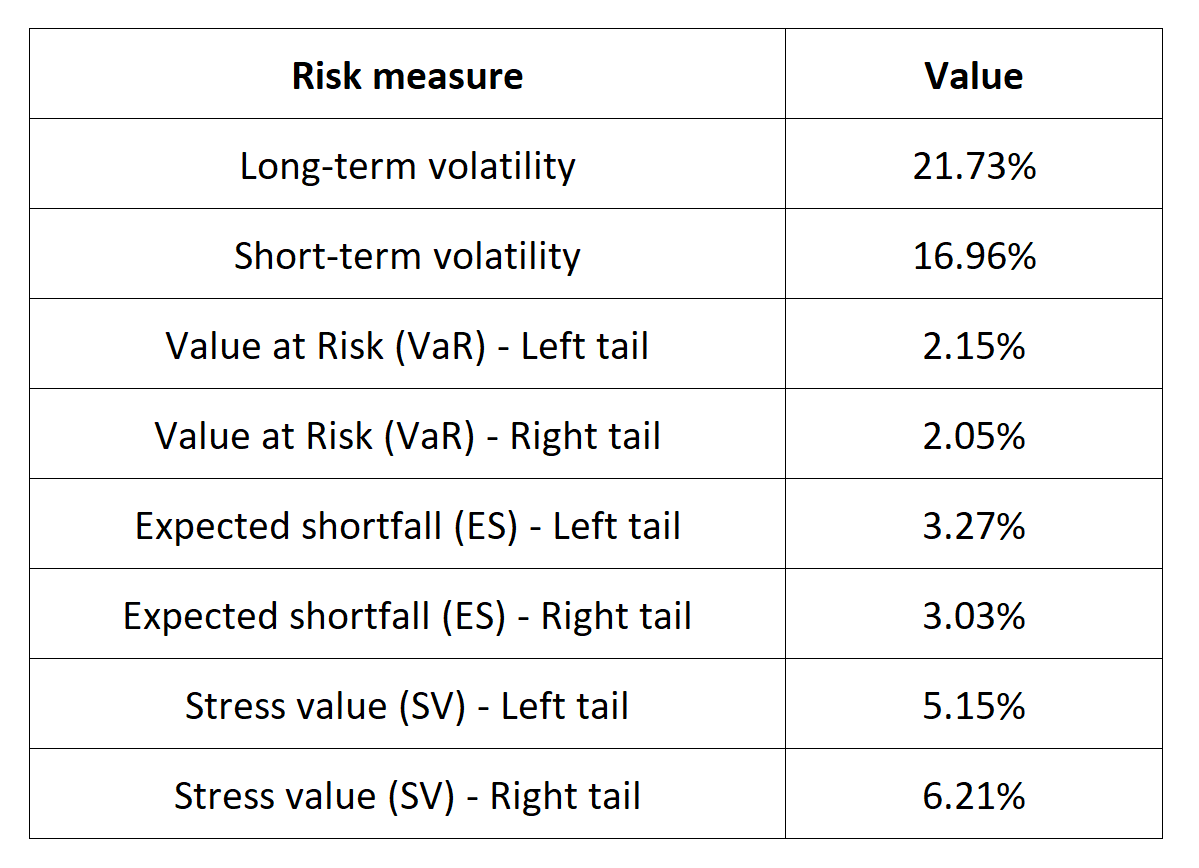

The R program that you can download above also allows you to compute risk measures about the returns of the IBEX 35 index.

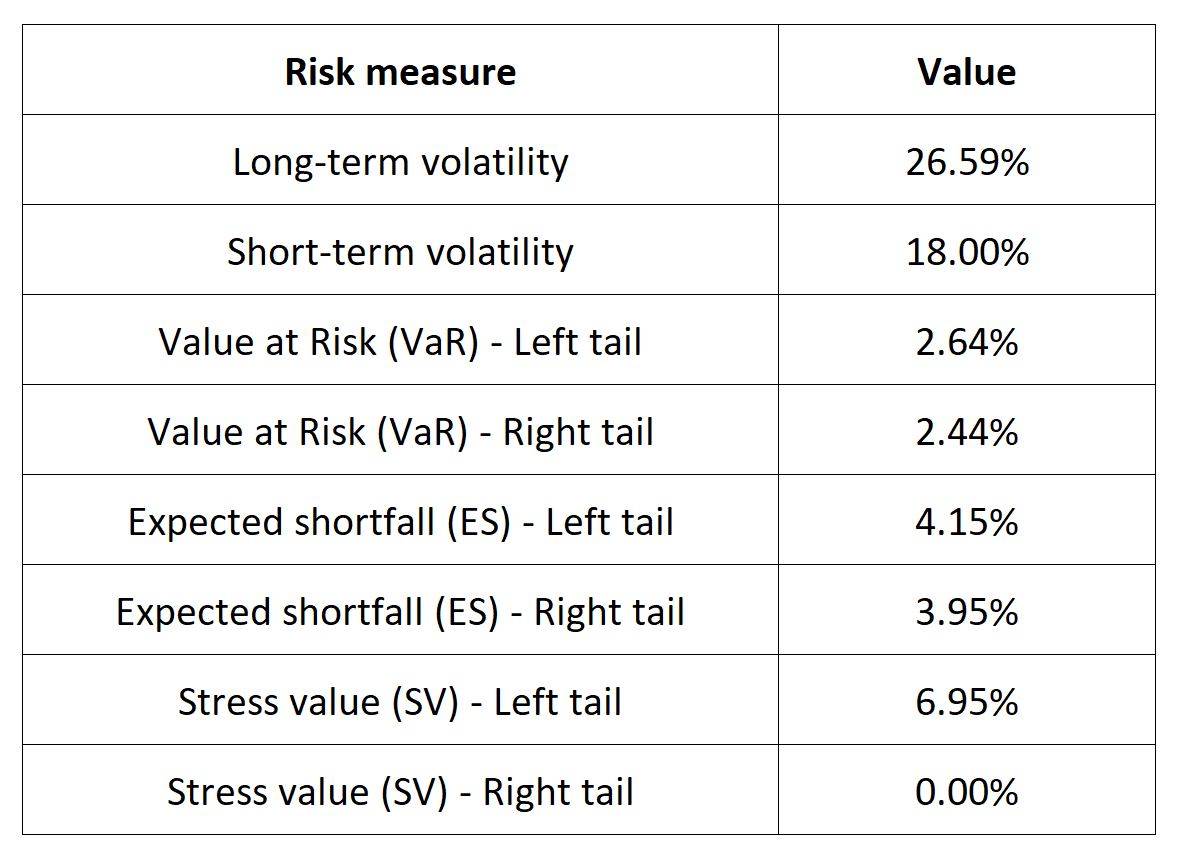

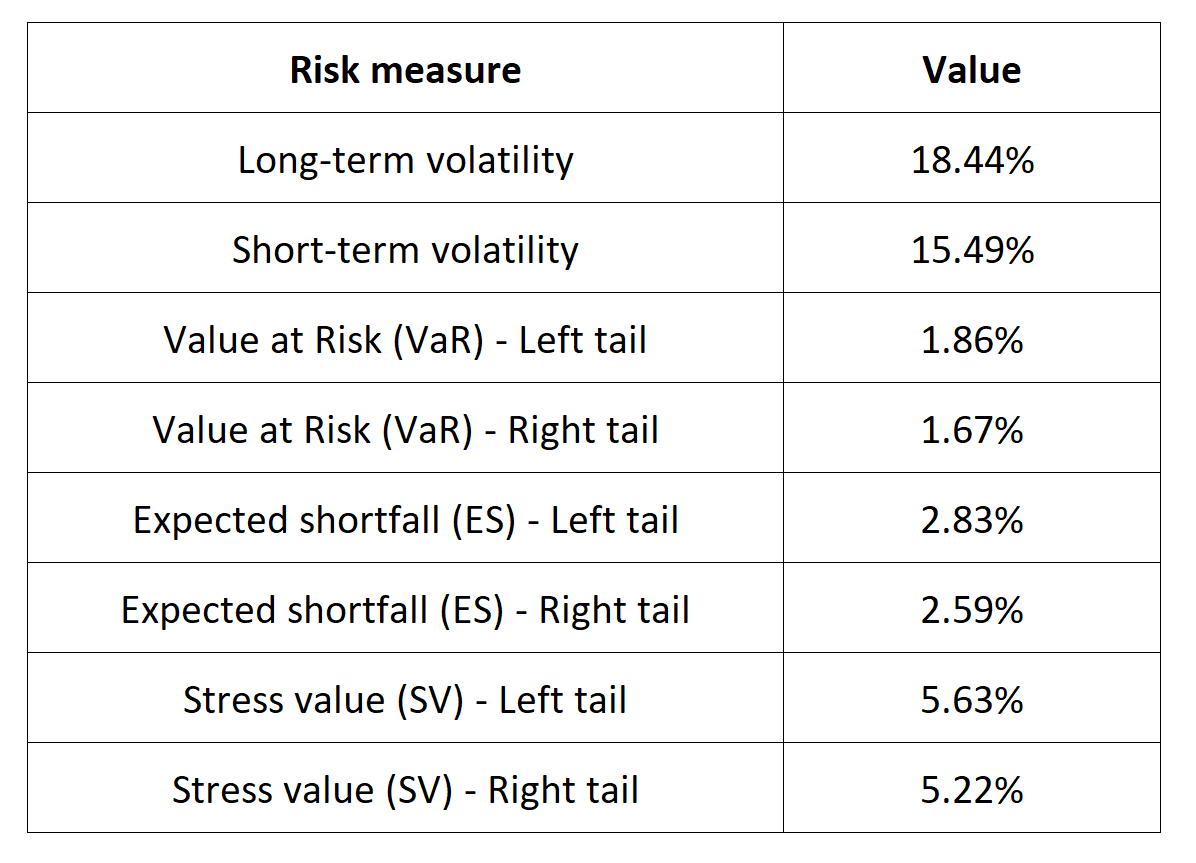

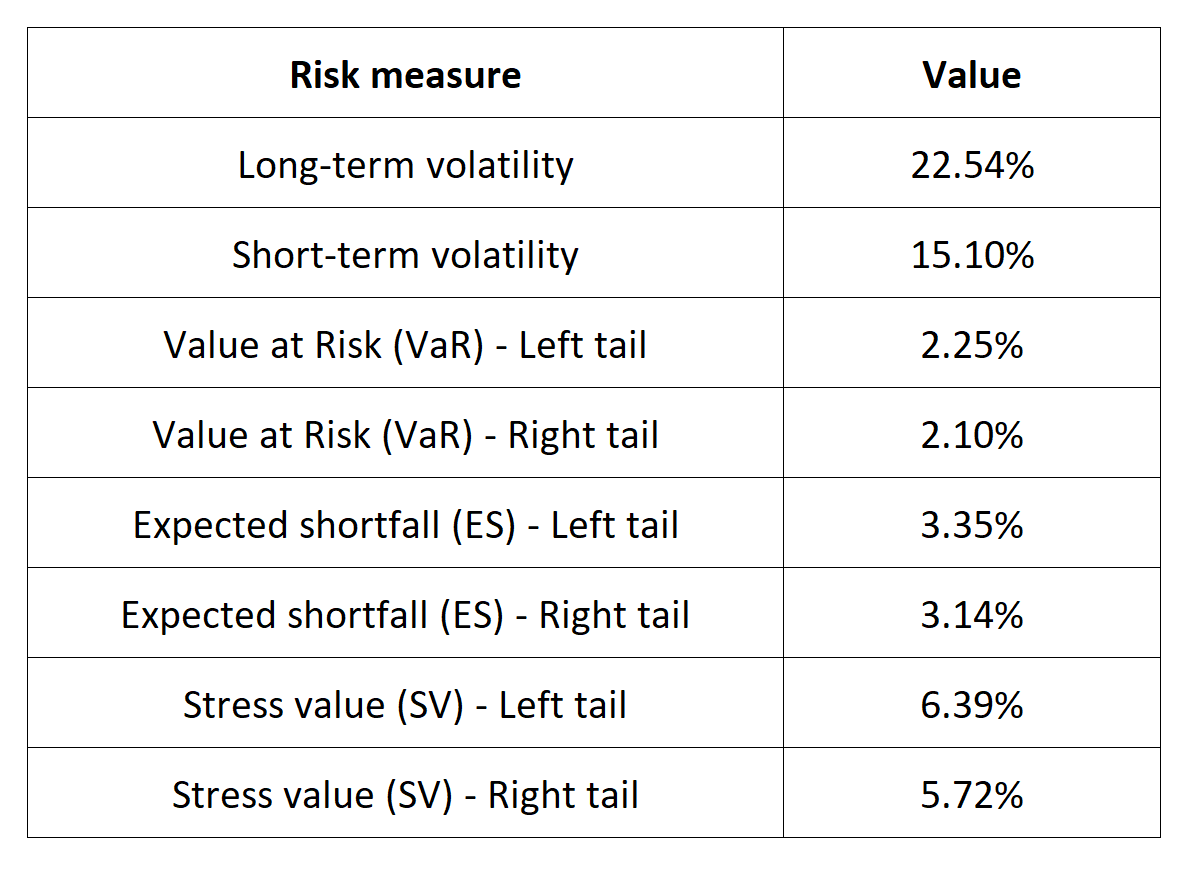

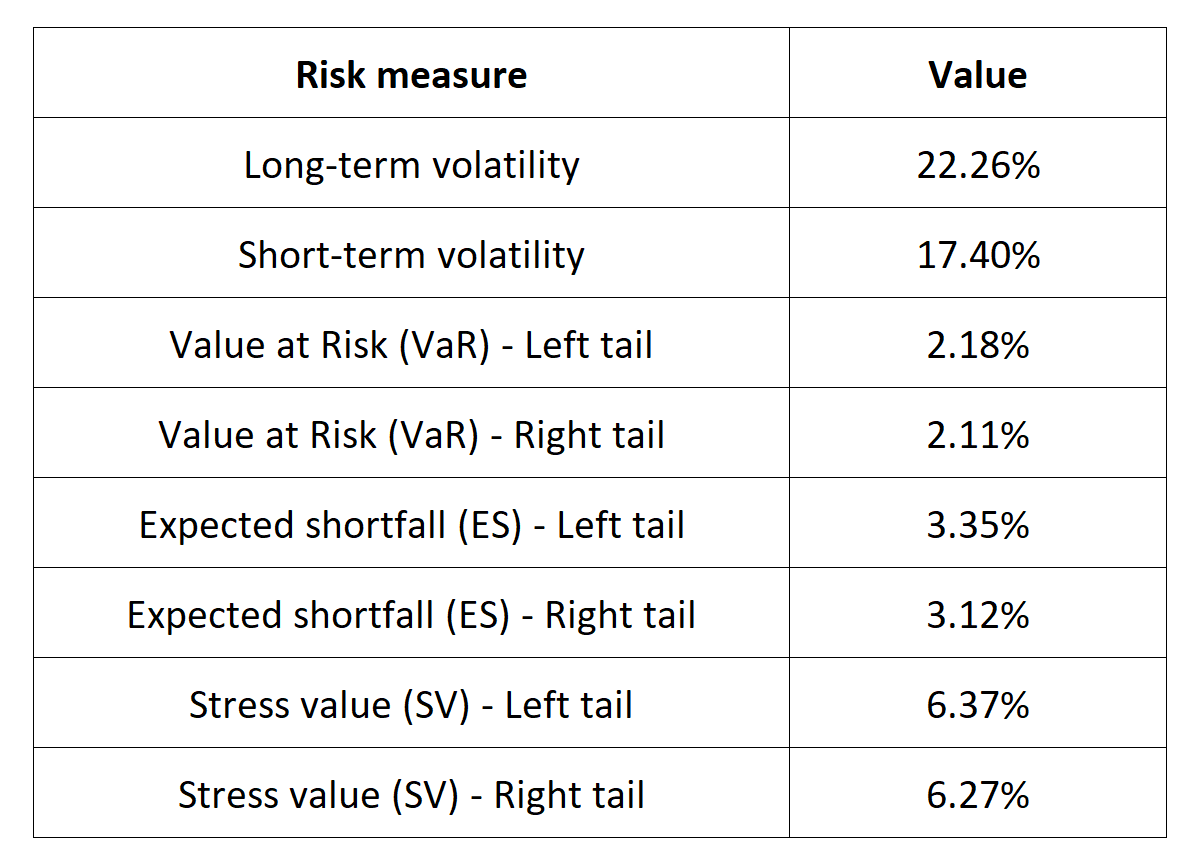

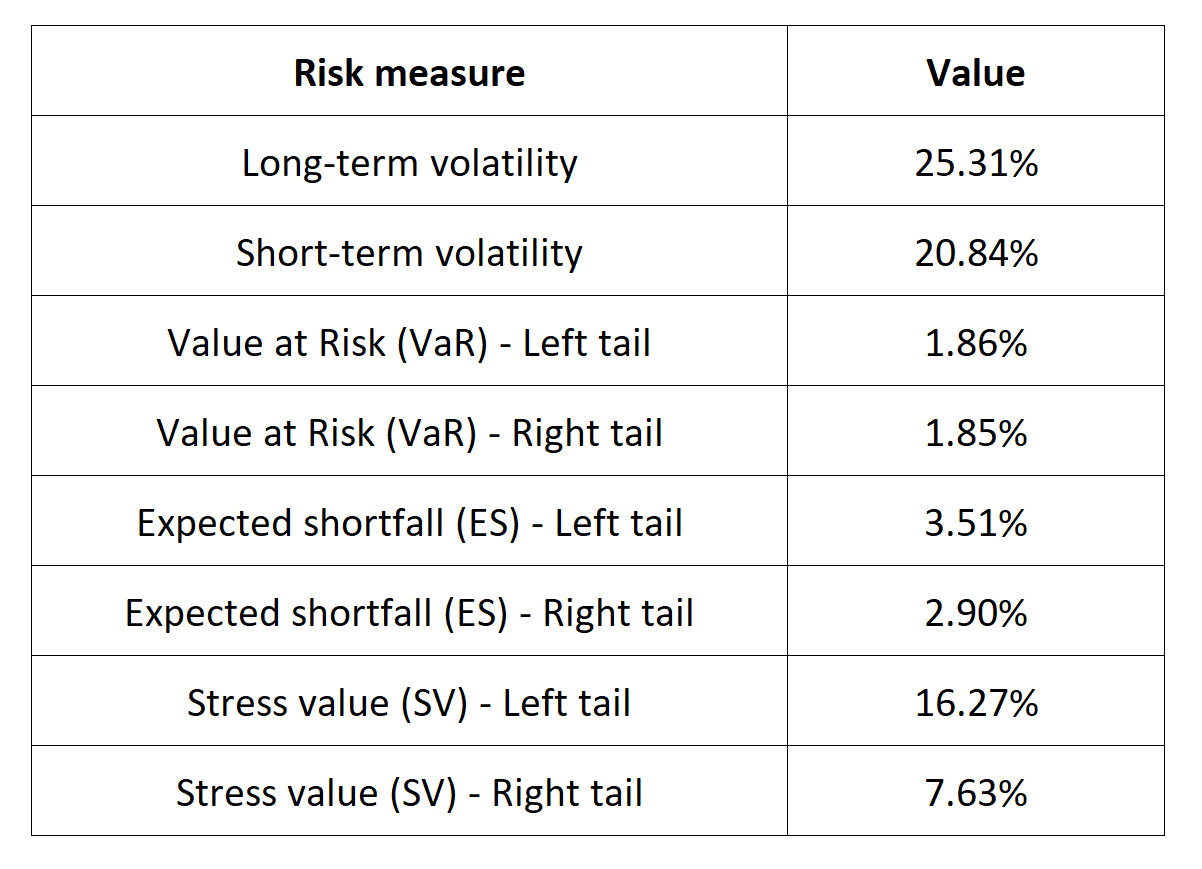

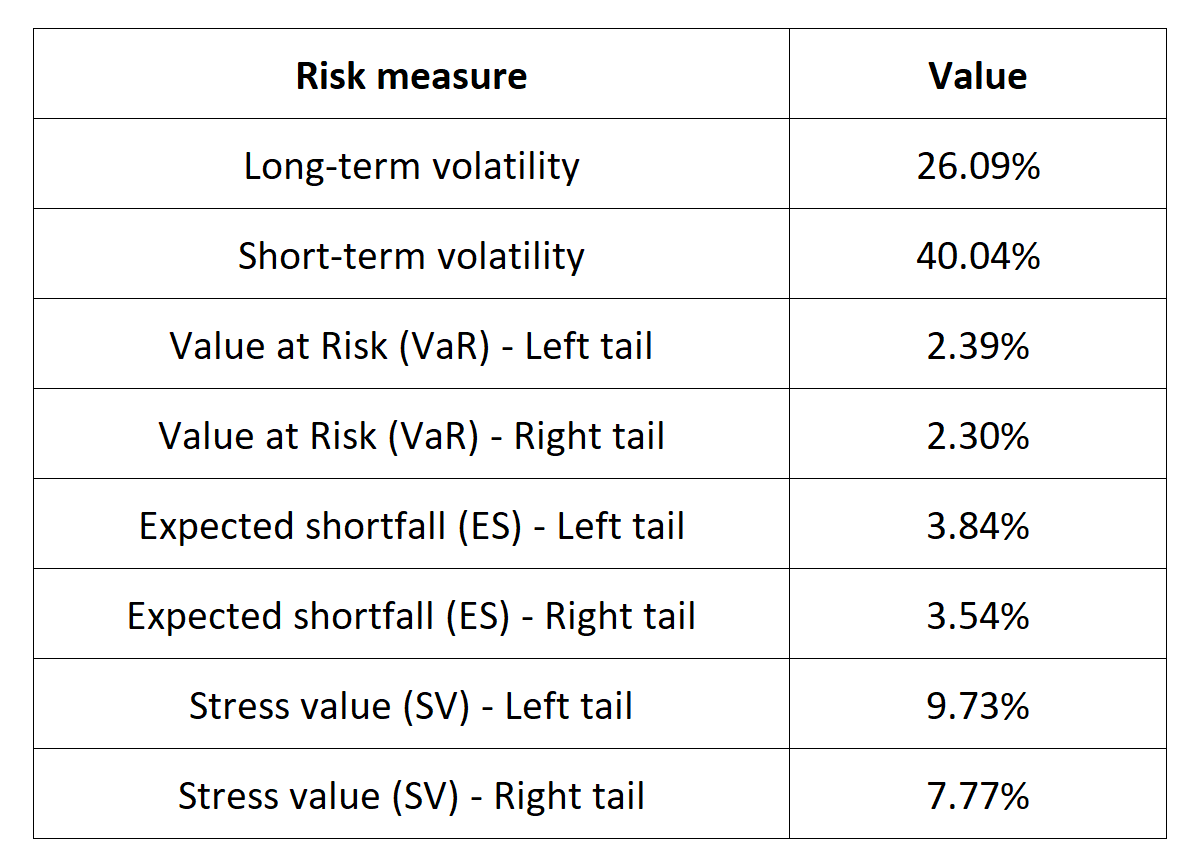

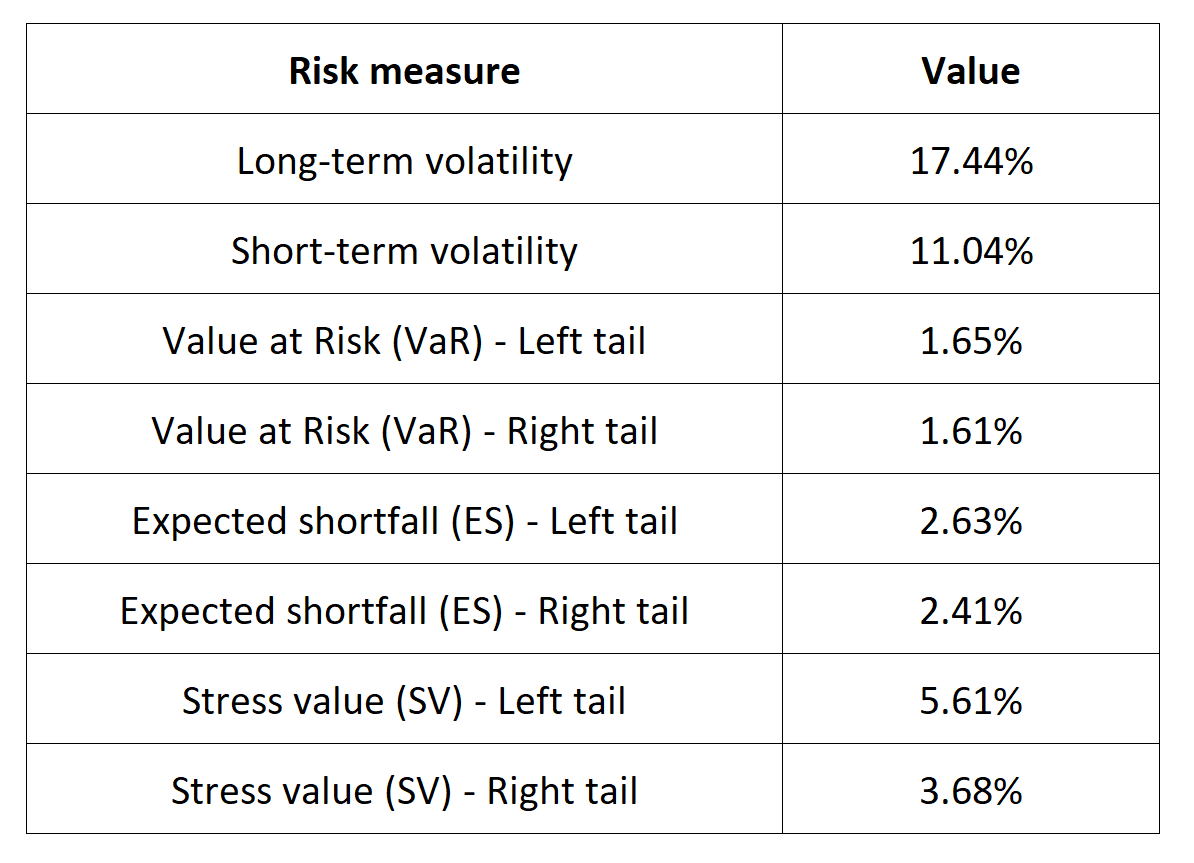

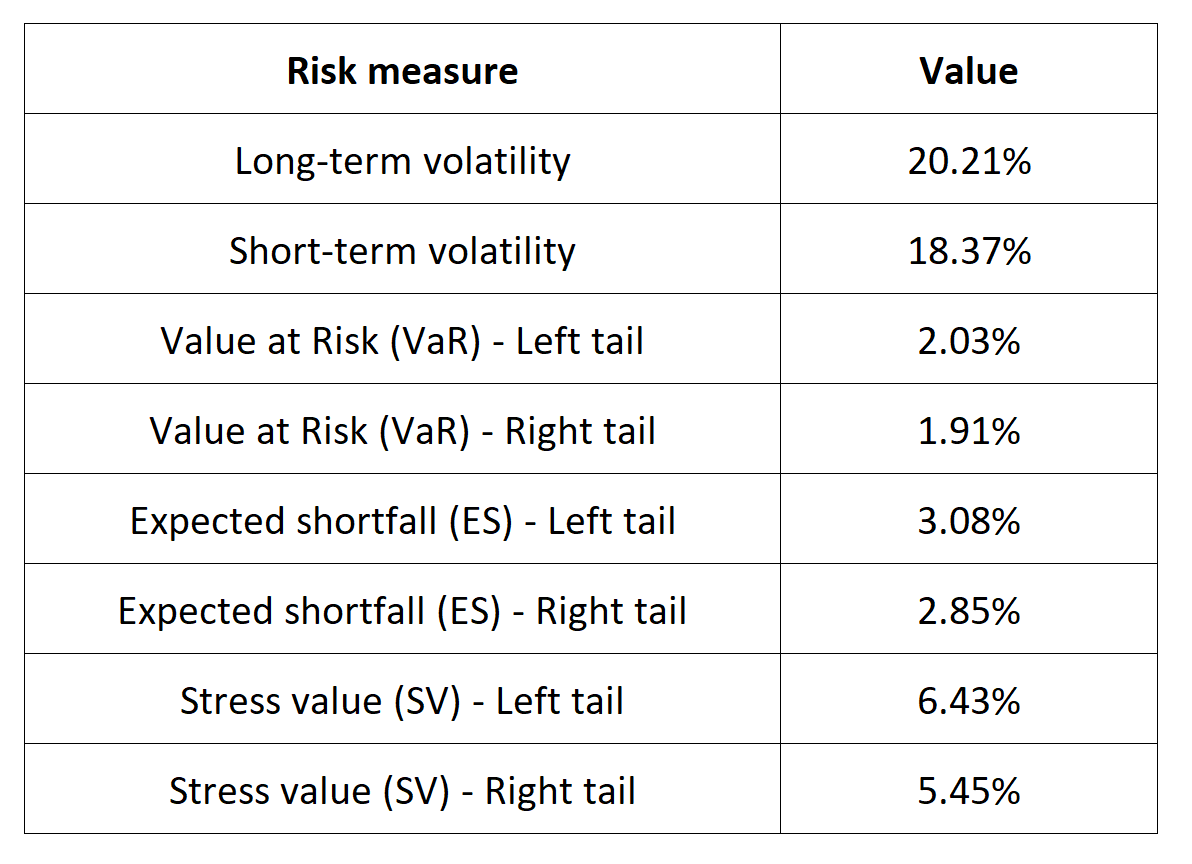

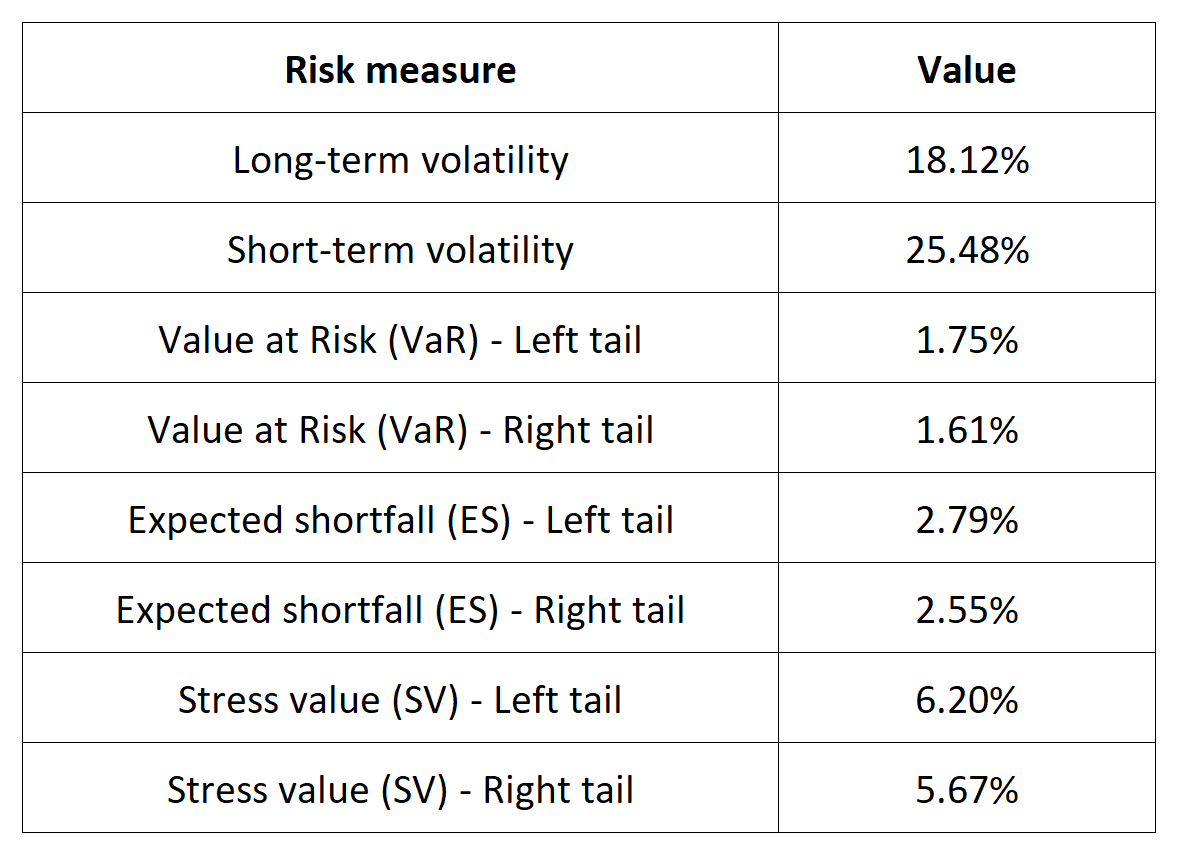

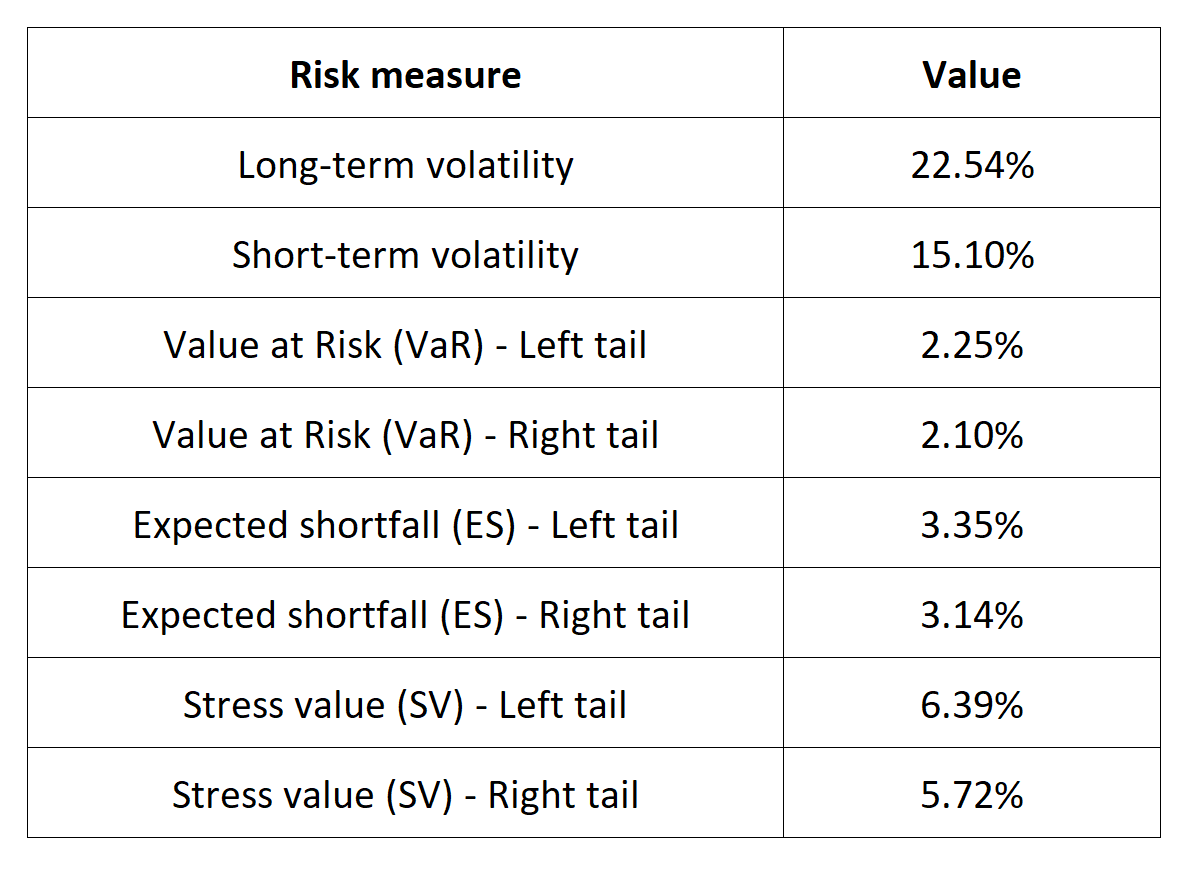

Table 5 below presents the following risk measures estimated for the IBEX 35 index:

- The long-term volatility (the unconditional standard deviation estimated over the entire period)

- The short-term volatility (the standard deviation estimated over the last three months)

- The Value at Risk (VaR) for the left tail (the 5% quantile of the historical distribution)

- The Value at Risk (VaR) for the right tail (the 95% quantile of the historical distribution)

- The Expected Shortfall (ES) for the left tail (the average loss over the 5% quantile of the historical distribution)

- The Expected Shortfall (ES) for the right tail (the average loss over the 95% quantile of the historical distribution)

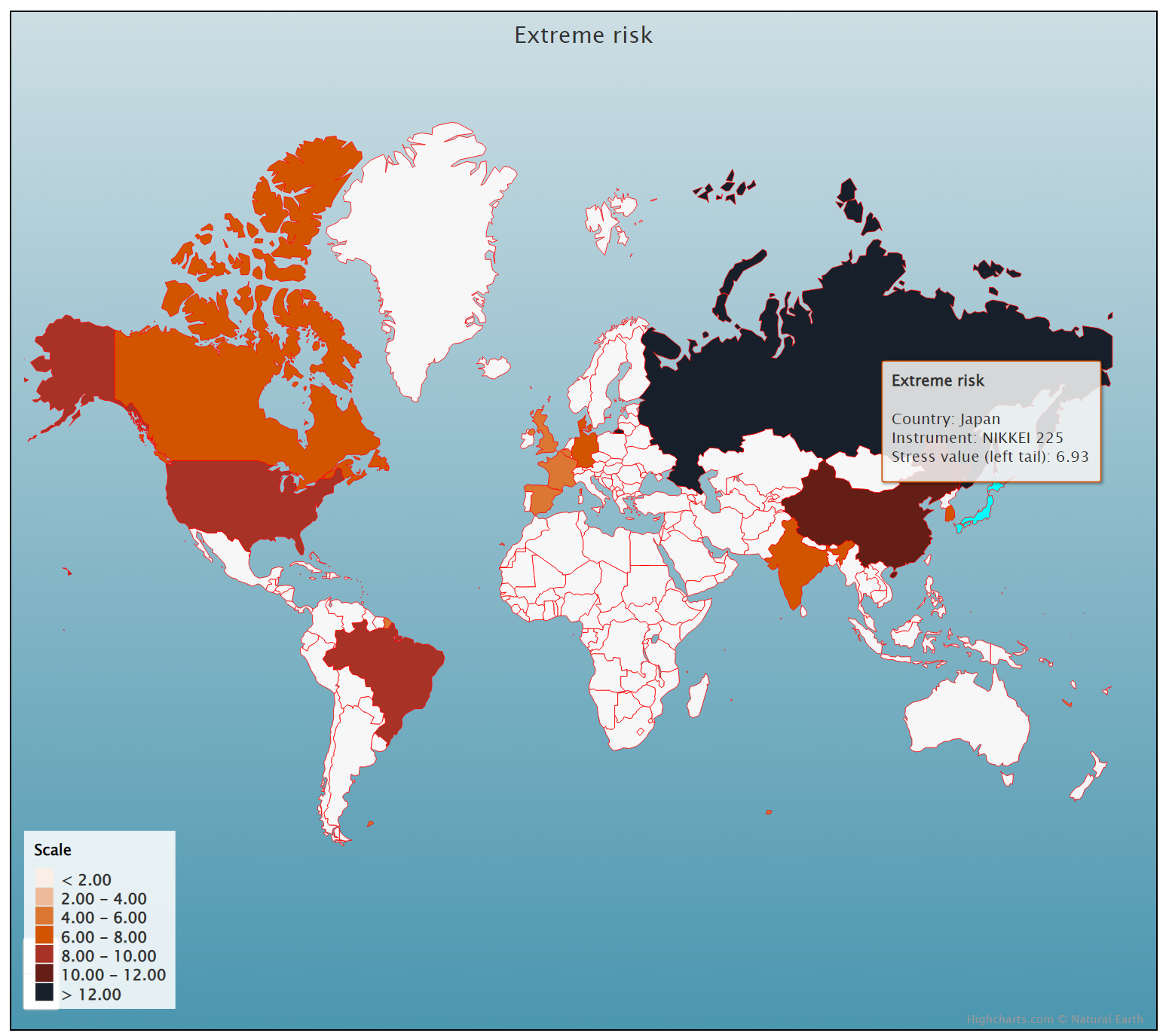

- The Stress Value (SV) for the left tail (the 1% quantile of the tail distribution estimated with a Generalized Pareto distribution)

- The Stress Value (SV) for the right tail (the 99% quantile of the tail distribution estimated with a Generalized Pareto distribution)

Table 5. Risk measures for the IBEX 35 index.

Source: computation by the author (data: Yahoo! Finance website).

The volatility is a global measure of risk as it considers all the returns. The Value at Risk (VaR), Expected Shortfall (ES) and Stress Value (SV) are local measures of risk as they focus on the tails of the distribution. The study of the left tail is relevant for an investor holding a long position in the IBEX 35 index while the study of the right tail is relevant for an investor holding a short position in theIBEX 35 index.

Why should I be interested in this post?

Students can gain useful knowledge about the Spanish stock market and its major sectors by looking at the IBEX 35 index. These firms represent a wide range of industries, including consumer goods, energy, finance, and telecommunications, making the index a useful benchmark for the Spanish economy. Students can learn how industries function, how competition affects the market, and what elements contribute to business success in Spain by examining the performance of the companies included in the index.

Furthermore, investors can use financial products linked to the IBEX 35 index, such as exchange-traded funds (ETFs), futures, and options contracts, to access the Spanish market and potentially generate returns. By understanding the dynamics of the IBEX 35 index and the Spanish economy, students can develop valuable skills for careers in investment banking, portfolio management, and corporate finance.

Related posts on the SimTrade blog

About financial indexes

▶ Nithisha CHALLA Financial indexes

▶ Nithisha CHALLA Calculation of financial indexes

▶ Nithisha CHALLA The business of financial indexes

▶ Nithisha CHALLA Float

Other financial indexes

▶ Nithisha CHALLA The S&P 500 index

▶ Nithisha CHALLA The FTSE 100 index

▶ Nithisha CHALLA The DAX 30 index

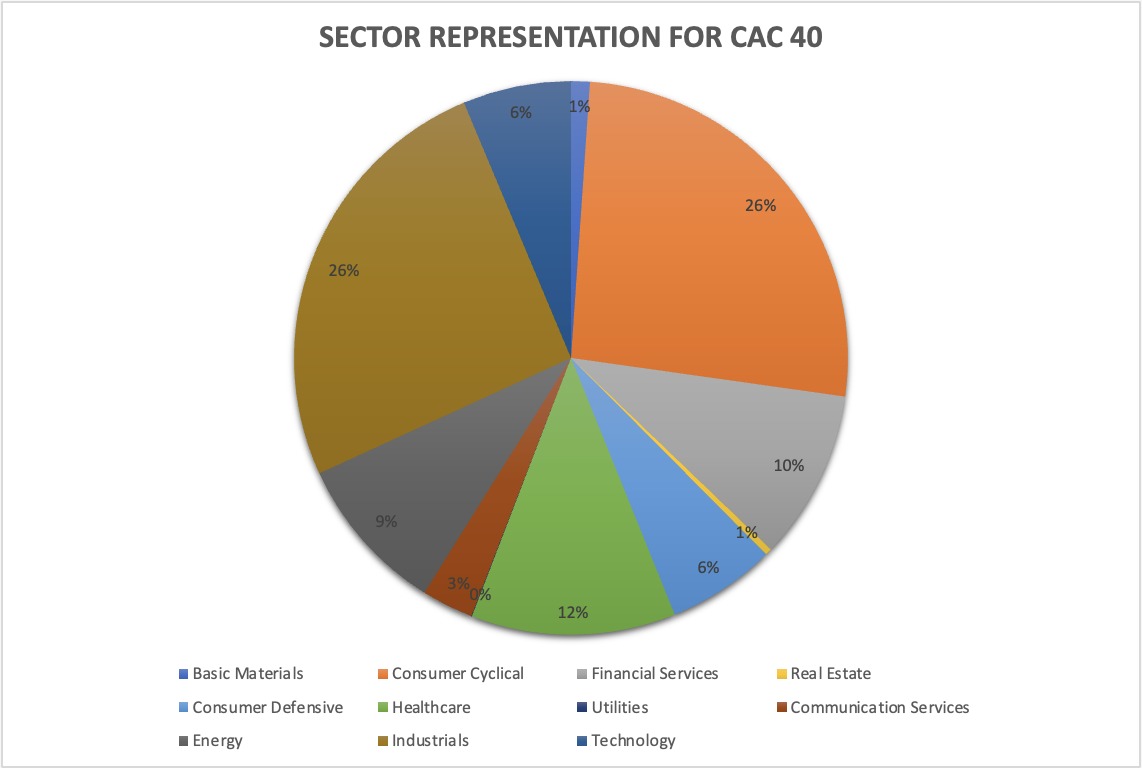

▶ Nithisha CHALLA The CAC 40 index

About portfolio management

▶ Youssef LOURAOUI Portfolio

▶ Jayati WALIA Returns

About statistics

▶ Shengyu ZHENG Moments de la distribution

▶ Shengyu ZHENG Mesures de risques

Useful resources

About the IBEX 35 index

Wikipedia What is the IBEX 35 index

AVA trade An Overview of Spain’s Financial Engine – IBEX 35

DailyFX What is the IBEX 35 Index and what influences its price?

Academic research about risk

Longin F. (2000) From VaR to stress testing: the extreme value approach Journal of Banking and Finance, N°24, pp 1097-1130.

Longin F. (2016) Extreme events in finance: a handbook of extreme value theory and its applications Wiley Editions.

Data

Yahoo! Finance

Yahoo! Finance Data for the IBEX 35 index

About the author

The article was written in June 2023 by Nithisha CHALLA (ESSEC Business School, Grande Ecole Program – Master in Management, 2021-2023).