The Price of Gold

In this article, Nithisha CHALLA (ESSEC Business School, Grande Ecole Program – Master in Management (MiM), 2021-2024) explores the complexities behind gold pricing, key external drivers, and historical trends.

Introduction

Gold has been a store of value and medium of exchange for centuries, its price deeply rooted in global economic, political, and financial systems. In modern markets, the price of gold is influenced by a range of factors, from macroeconomic conditions to geopolitical tensions.

What Is the Gold Price?

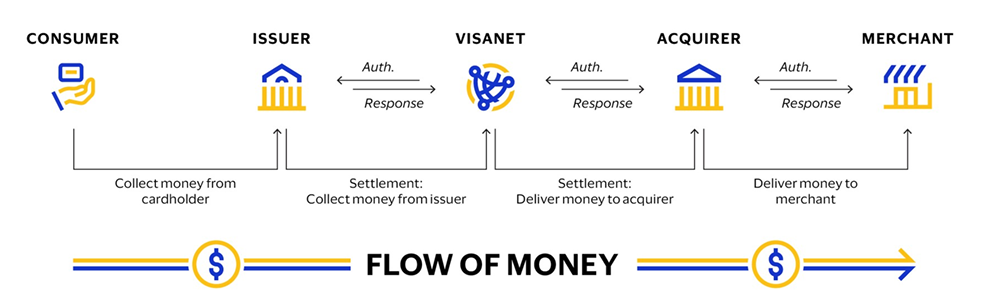

The gold price is the market value of one troy ounce of gold. It is quoted in terms of various currencies, most notably the U.S. dollar (USD). The price fluctuates based on supply and demand dynamics in international markets, and gold is traded on commodity exchanges such as the London Bullion Market Association (LBMA) and COMEX in the United States.

When studying gold price there are two terms we certainly come across, they are Spot Price and Futures Price. Spot Price is the current market price at which gold can be bought or sold for immediate delivery. The futures price is the agreed-upon price for gold to be delivered at a future date, which can differ from the spot price due to expectations of future market conditions.

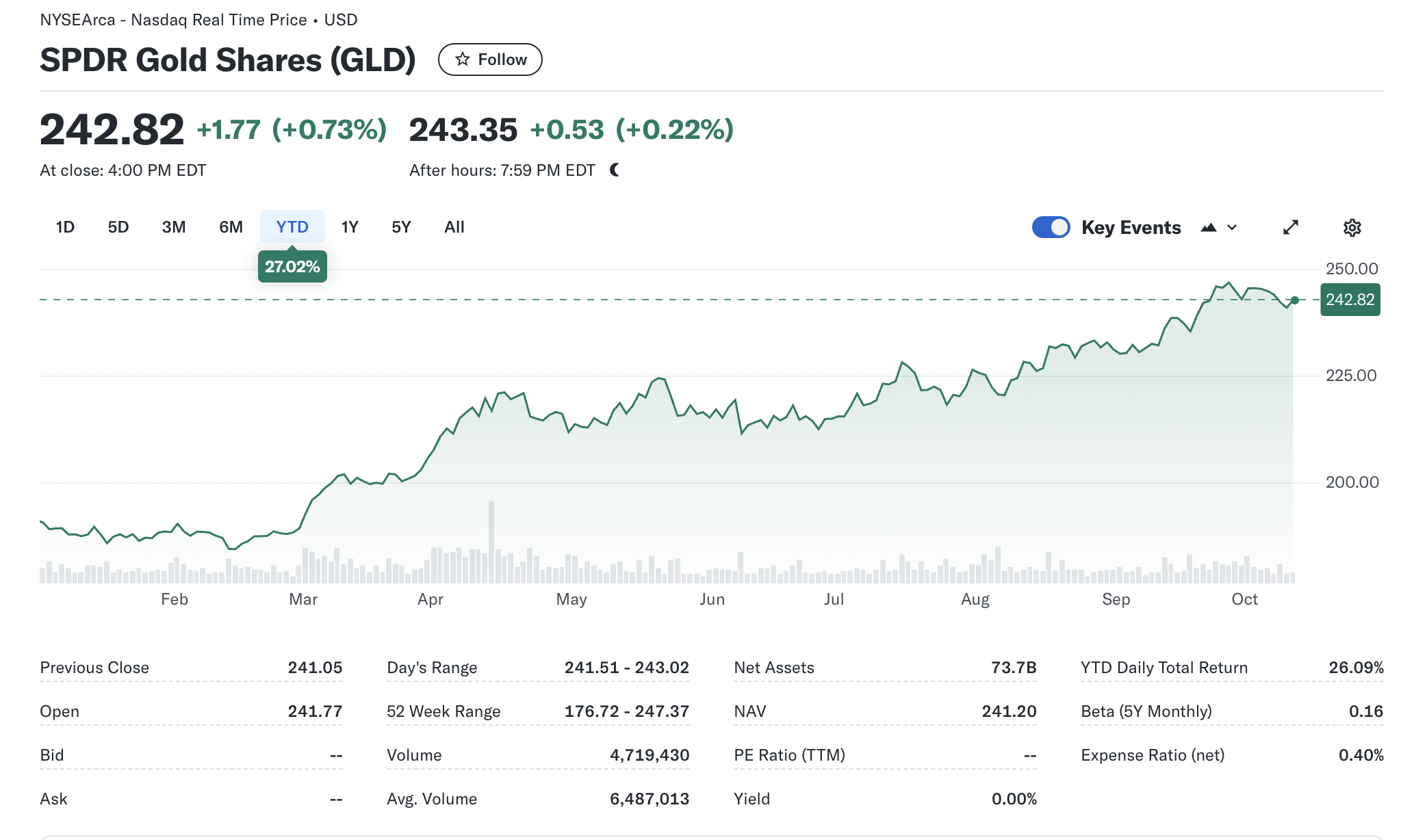

Figure 1 below gives the evolution of the gold price over the period January 1971-September 2024.

Figure 1. Evolution of the Gold price

Source: Wikipedia

Key factors affecting gold price

Supply and demand dynamics

The fundamental economic principle of supply and demand plays a crucial role in determining the price of gold. However, unlike consumable commodities, the supply of gold remains relatively stable since most of the gold ever mined is still in existence.

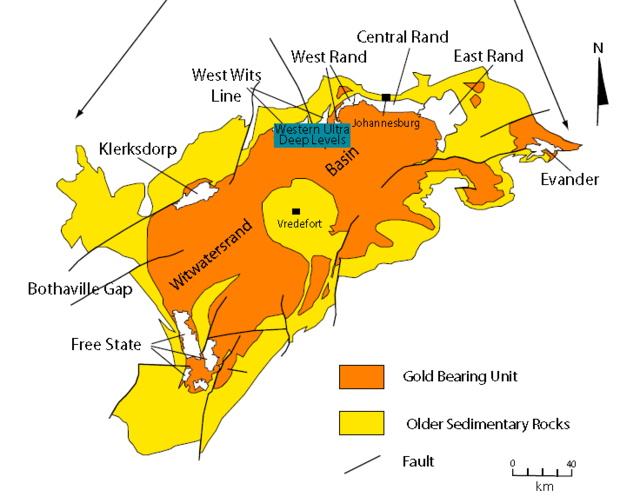

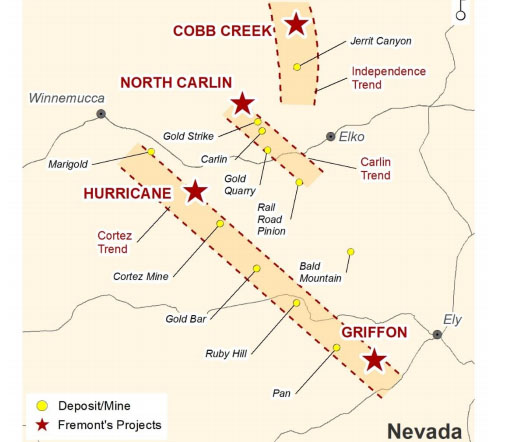

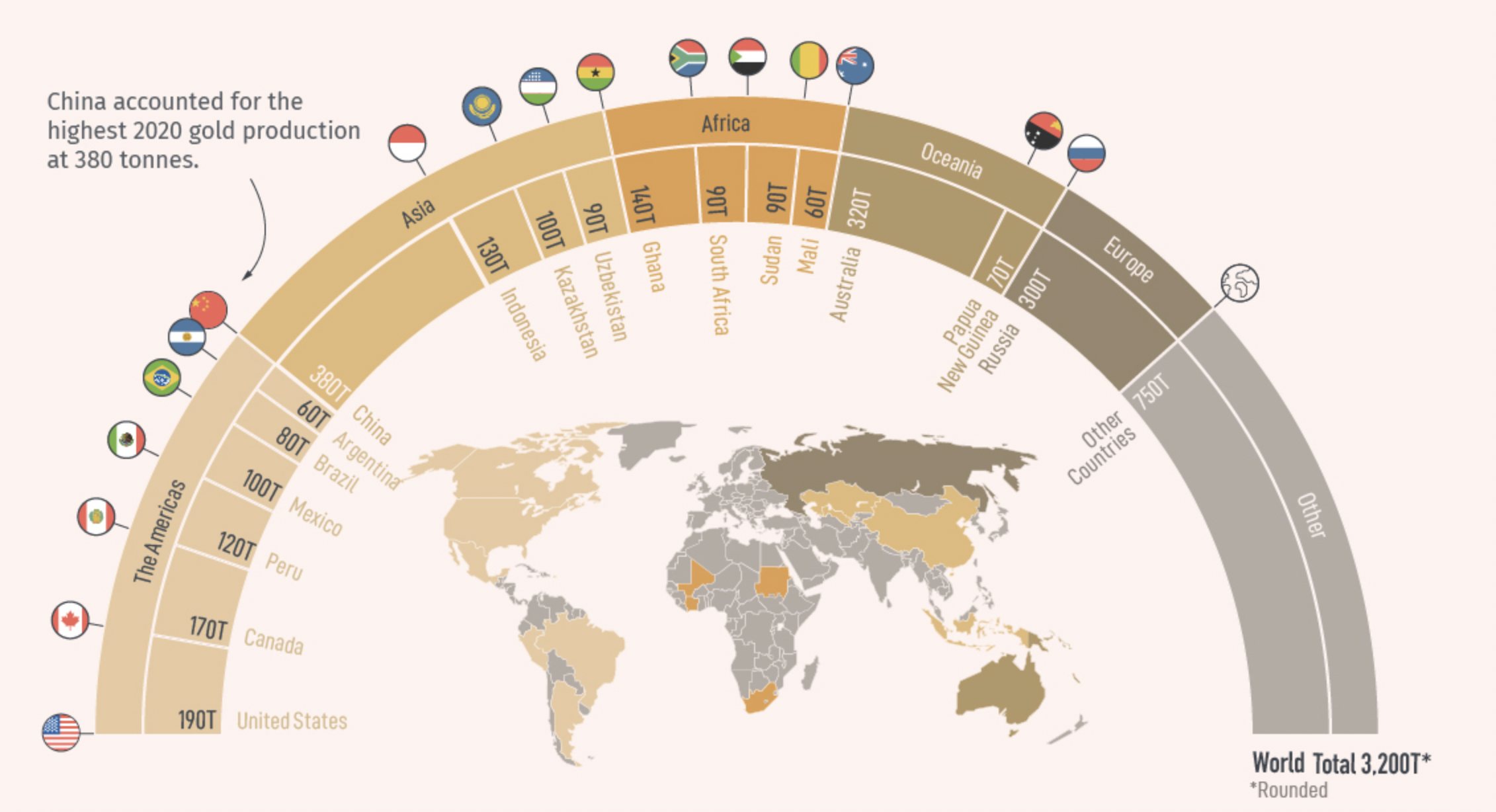

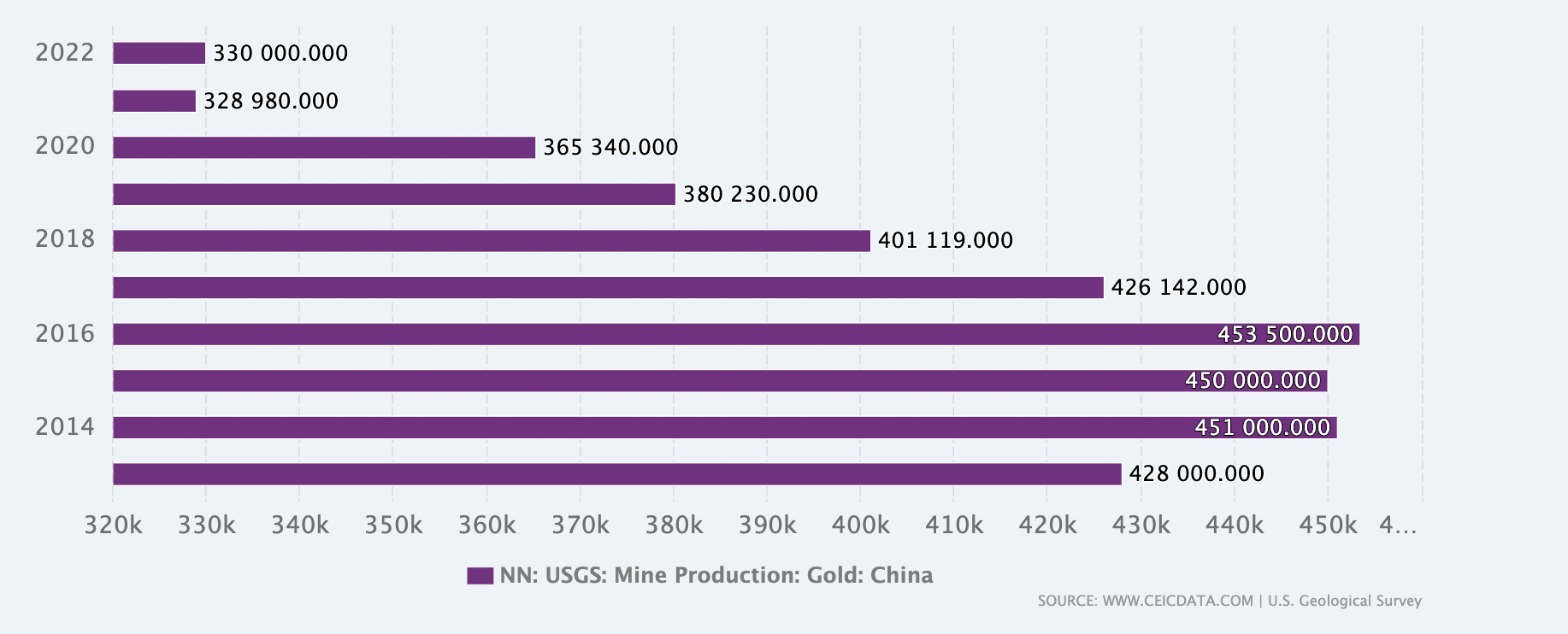

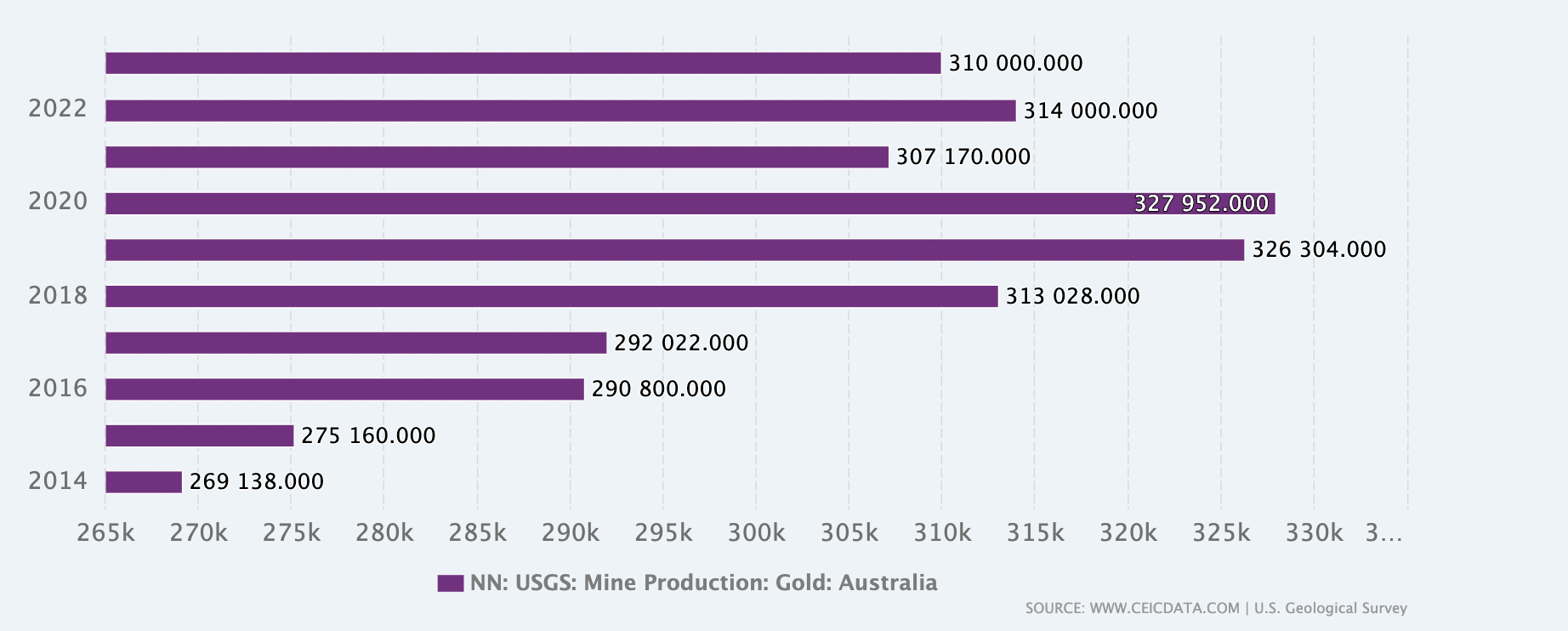

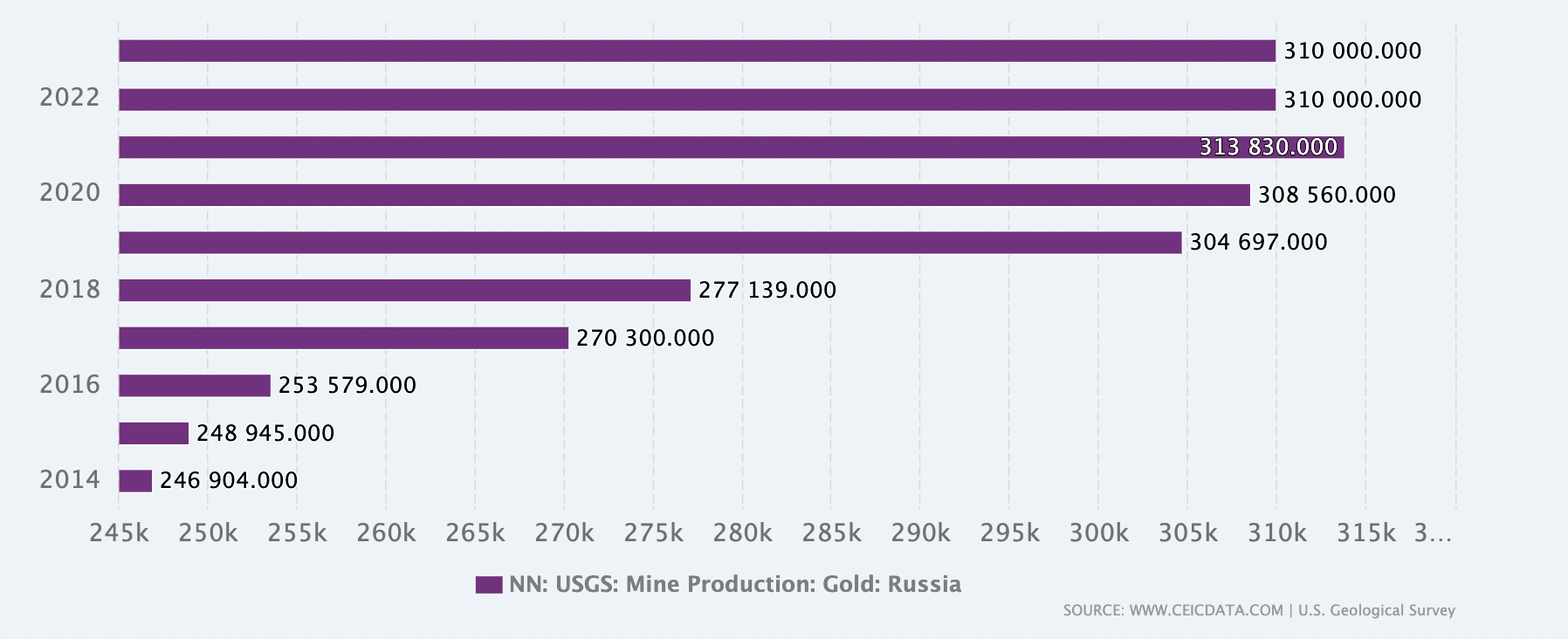

Global gold production:

New gold production, primarily from mining, adds a limited amount to the existing gold supply. Countries like China, Australia, and Russia are major gold producers, and changes in production levels can impact prices.Jewelry and industrial demand:

Jewelry accounts for a significant portion of global gold demand, particularly in countries like India and China. In addition, gold has applications in technology, particularly in electronics and medical devices.Economic Conditions:

During economic prosperity, people may be more inclined to purchase gold jewelry for personal adornment or as a status symbol. However, during economic downturns, demand for jewelry may decline.Central Banks:

Central banks can significantly impact gold prices by buying or selling gold reserves. Their actions can influence market sentiment and prices.Technology:

Gold is used in various industries, including electronics, dentistry, and aerospace. Advancements in technology can drive demand for gold in these sectors.

Inflation and gold as a hedge

Gold is traditionally seen as a hedge against inflation. When inflation rises, the purchasing power of fiat currencies decreases, leading investors to seek refuge in assets like gold, which tend to maintain value over time. During periods of high inflation, such as the 1970s, gold prices surged as investors sought protection against currency devaluation.

Erb and Harvey (2013) observed that a common argument for investing in gold is that it is an inflation hedge, a golden constant. The golden constant can be considered as a collection of statements that assert that over a long time, the purchasing power of gold remains largely the same; in the long run, inflation is a fundamental driver of the price of gold; deviations in the nominal price of gold relative to its inflation-adjusted price will be corrected; and in the long run, the real return from owning gold is zero.

Interest rates and opportunity cost

There is a strong inverse relationship between gold prices and interest rates. When interest rates are low, the opportunity cost of holding non-yielding assets like gold decreases, making gold more attractive to investors. The period of low interest rates following the 2008 financial crisis led to a sharp increase in gold prices as central banks worldwide implemented loose monetary policies.

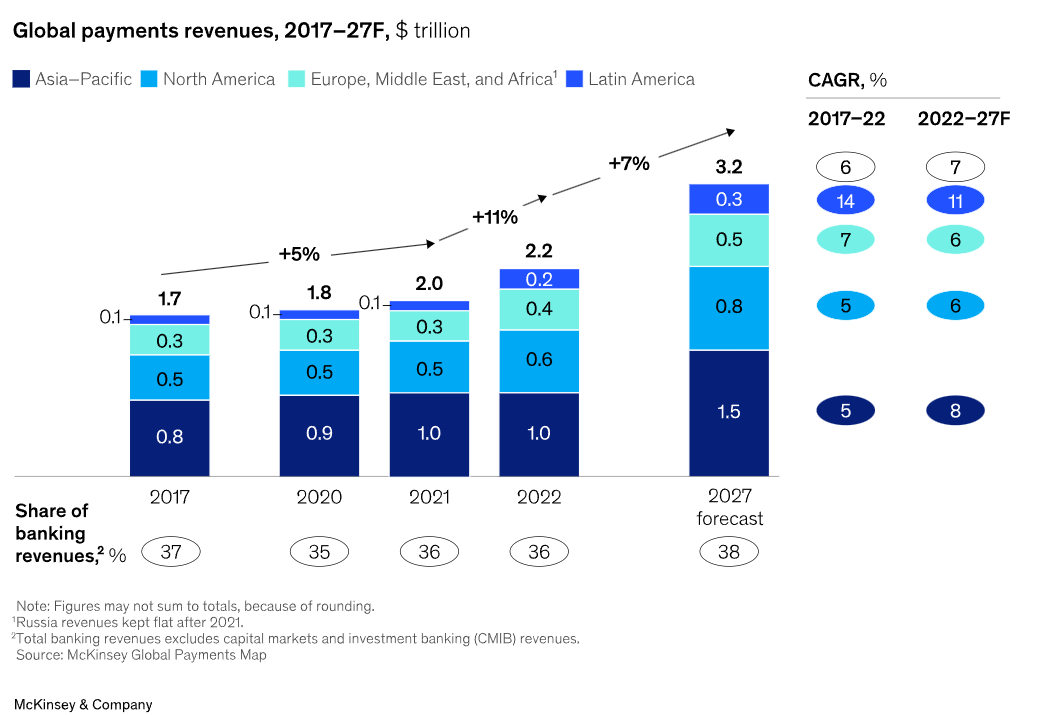

U.S. dollar strength

Gold is priced in U.S. dollars, so fluctuations in the value of the dollar have a direct impact on gold prices. When the U.S. dollar strengthens, gold becomes more expensive for foreign buyers, potentially reducing demand and lowering prices. In 2014, the strengthening of the U.S. dollar against other major currencies contributed to a decline in gold prices.

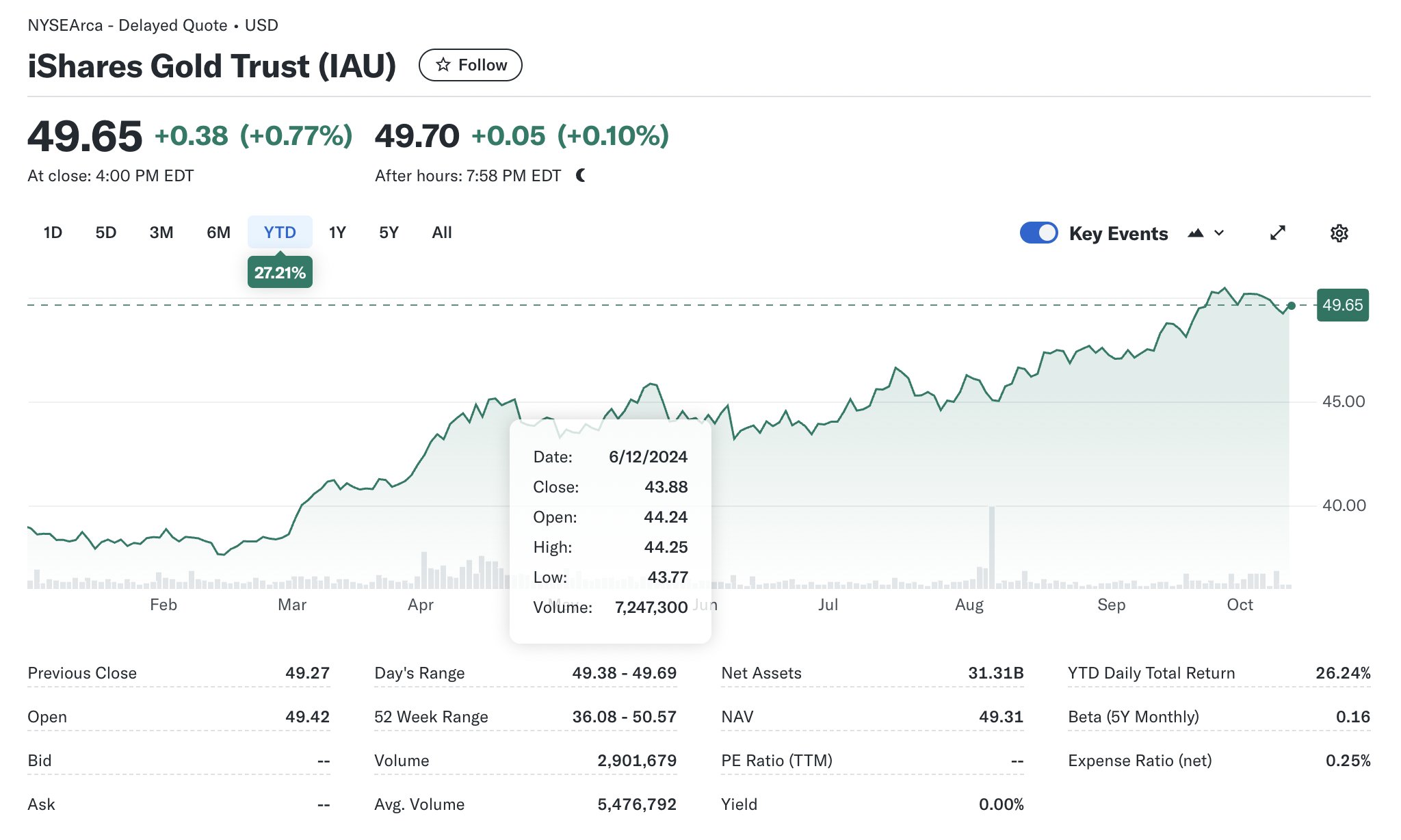

Figure 2 below gives the evolution of the gold price against the US dollar.

Gold price vs US dollar

Source: Bloomberg

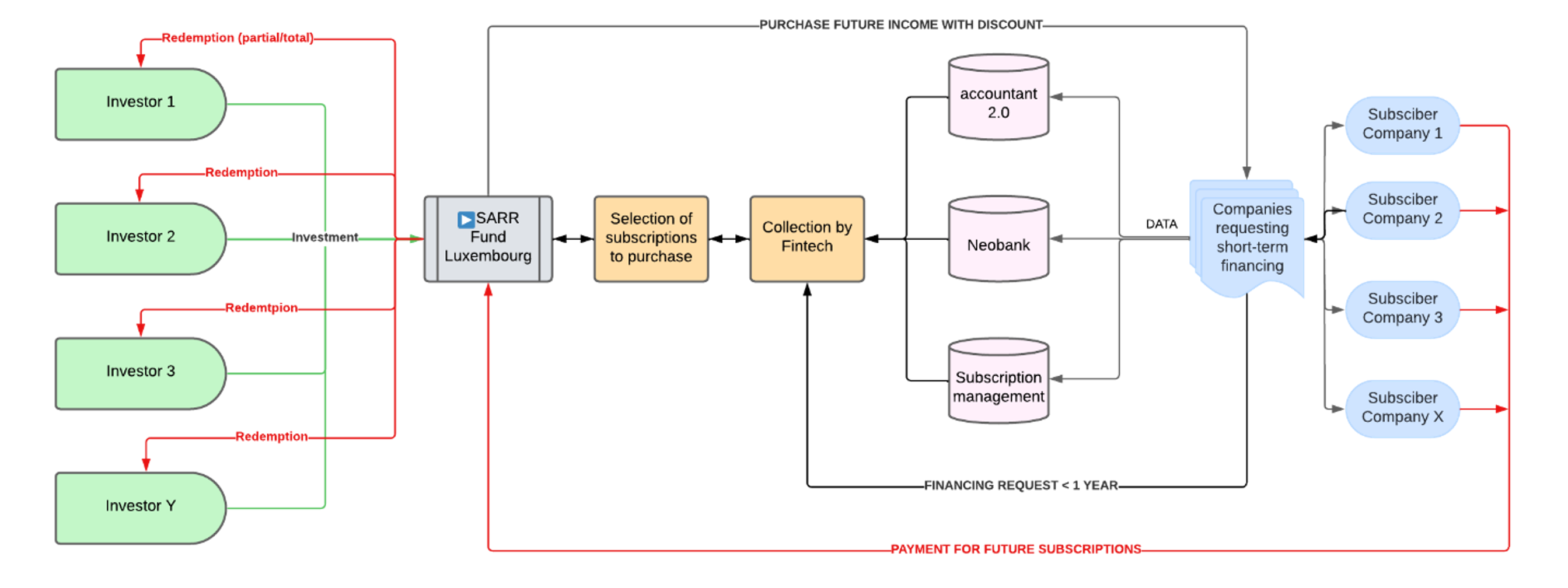

Central bank policies

Central banks around the world hold substantial gold reserves, and their buying or selling behavior can influence gold prices. In recent years, central banks, particularly in emerging markets, have increased their gold holdings to diversify reserves away from fiat currencies like the U.S. dollar. Russia and China have been among the largest buyers of gold in recent years, which has helped support the price of gold.

Gold as a hedge against global financial crises

- The role of gold in times of crisis: Gold is widely regarded as a hedge during financial crises. Investors tend to flock to gold during periods of extreme volatility in stock markets or global currency markets. The COVID-19 pandemic is the most recent example, where gold rallied to record highs as economies around the world faced unprecedented challenges. During the 2008 financial crisis, gold prices surged as investors sought alternatives to failing banking institutions and depreciating fiat currencies.

- Currency devaluation and hyperinflation: In countries facing hyperinflation or severe currency devaluation, such as Venezuela or Zimbabwe, gold has acted as a critical asset to preserve wealth. When a nation’s currency rapidly loses value, gold remains a valuable and stable store of wealth

Gold price and its relationship with other assets

Gold vs stock market

Gold often moves inversely to the stock market. During periods of stock market decline or volatility, investors tend to move funds into gold, leading to price increases. However, in bull markets, gold may lag as investors seek higher returns in equities. For example, during the 2008 financial crisis, while global stock markets crashed, gold prices surged as it became a safe-haven asset.

Gold vs bonds

There is a similar inverse relationship between gold and bond yields, especially U.S. Treasury yields. When bond yields are low, the opportunity cost of holding gold decreases, making it more attractive. Conversely, rising bond yields can lead to lower demand for gold as bonds offer better returns.

Gold vs. cryptocurrencies

The rise of cryptocurrencies like Bitcoin has sparked debate about whether these digital assets could replace gold as a store of value. Although both assets are seen as alternatives to fiat currency, gold has a longer history and is less volatile. Cryptocurrencies offer higher potential for speculative gains, but their price volatility makes gold the preferred haven during financial crises.

The Future of gold prices

Increasing Central Bank Demand: Central banks, particularly in emerging markets, are expected to continue increasing their gold reserves, further supporting demand and prices.

The rise of digital assets such as Bitcoin has led to debates about whether cryptocurrencies could replace gold as a store of value. While some investors are shifting toward crypto, gold remains a trusted asset with thousands of years of history backing its status as a safe haven

Conclusion

The gold price is a reflection of a wide array of global economic and political factors, from inflation and central bank policies to geopolitical risks and financial market stability. While gold’s role as a hedge against inflation and a safe-haven asset remains unchanged, its interactions with modern financial markets, including competition from digital assets like cryptocurrencies, continue to evolve. Investors and central banks alike look to gold as a reliable store of value, particularly in times of uncertainty.

Why should I be interested in this post?

Gold has been a key financial asset for centuries, acting as a store of value, a hedge against inflation, and a safe-haven asset during economic crises. Understanding its investment options helps students grasp fundamental market dynamics and investor behavior, especially during economic uncertainty.

Related posts on the SimTrade blog

▶ Nithisha CHALLA History of Gold

▶ Nithisha CHALLA Gold resources in the world

Useful resources

Academic research

Erb, C.B., and C.R. Harvey (2013) The Golden Dilemma. Financial Analysts Journal 69 (4): 10–42.

Erb, C.B., and C.R. Harvey (2024) Is there still a Golden Dilemma. Working paper.

Business resources

World Gold Council Gold spot prices

Bloomberg Investing in Gold: Is Gold Still a Good Inflation Hedge in a Recession?

Focus economics Gold: The Most Precious of Metals

Other

Wikipedia Gold

About the author

The article was written in October 2024 by Nithisha CHALLA (ESSEC Business School, Grande Ecole Program – Master in Management (MiM), 2021-2024).