Quantitative risk management

In this article, Jayati WALIA (ESSEC Business School, Grande Ecole Program – Master in Management, 2019-2022) presents Quantitative risk management.

Introduction

Risk refers to the degree of uncertainty in the future value of an investment or the potential losses that may occur. Risk management forms an integral part of any financial institution to safeguard the investments against different risks. The key question that forms the backbone for any risk management strategy is the degree of variability in the profit and loss statement for any investment.

The process of the risk management has three major phases. The first phase is risk identification which mainly focuses on identifying the risk factors to which the institution is exposed. This is followed by risk measurement that can be based on different types of metrics, from monitoring of open positions to using statistical models and Value-at-Risk. Finally, in the third phase risk management is performed by setting risk limits based on the determined risk appetite, back testing (testing the quality of the models on the historical data) and stress testing (assessing the impact of severe but still plausible adverse scenarios).

Different types of risks

There are several types of risks inherent in any investment. They can be categorized in the following ways:

Market risk

An institution can invest in a broad list of financial products including stocks, bonds, currencies, commodities, derivatives, and interest rate swaps. Market risk essentially refers to the risk arising from the fluctuation in the market prices of these assets that an institution trades or invests in. The changes in prices of these underlying assets due to market volatility can cause financial losses and hence, to analyze and hedge against this risk, institutions must constantly monitor the performance of the assets. After measuring the risk, they must also implement necessary measures to mitigate these risks to protect the institution’s capital. Several types of market risks include interest rate risk, equity risk, currency risk, credit spread risk etc.

Credit risk

The risk of not receiving promised repayments due to the counterparty failing to meet its obligations is essentially credit risk. The counterparty risk can arise from changes in the credit rating of the issuer or the client or a default on a due obligation. The default risk can arise from non-payments on any loans offered to the institution’s clients or partners. After the financial crisis of 2008-09, the importance of measuring and mitigating credit risks has increased many folds since the crisis was mainly caused by defaults on payments on sub-prime mortgages.

Operational risk

The risk of financial losses resulting from failed or faulty internal processes, people (human error or fraud) or system, or from external events like fraud, natural calamities, terrorism etc. refers to operational risk. Operational risks are generally difficult to measure and may cause potentially high impacts that cannot be anticipated.

Liquidity risk

The liquidity risk comprises to 2 types namely, market liquidity risk and funding liquidity risk. In market liquidity risk can arise from lack of marketability of an underlying asset i.e., the assets are comparatively illiquid or difficult to sell given a low market demand. Funding liquidity risk on the other hand refers to the ease with which institutions can raise funding and thus institutions must ensure that they can raise and retain debt capital to meet the margin or collateral calls on their leveraged positions.

Strategic risk

Strategic risks can arise from a poor strategic business decisions and include legal risk, reputational risk and systematic and model risks.

Basel Committee on Banking Supervision

The Basel Committee on Banking Supervision (BCBS) was formed in 1974 by central bankers from the G10 countries. The committee is headquartered in the office of the Bank for International Settlements (BIS) in Basel, Switzerland. BCBS is the primary global standard setter for the prudential regulation of banks and provides a forum for regular cooperation on banking supervisory matters. Its 45 members comprise central banks and bank supervisors from 28 jurisdictions. Member countries include Australia, Belgium, Canada, Brazil, China, France, Hong Kong, Italy, Germany, India, Korea, the United States, the United Kingdom, Luxembourg, Japan, Russia, Switzerland, Netherlands, Singapore, South Africa among many others.

Over the years, BCBS has developed influential policy recommendations concerning international banking and financial regulations in order to exercise judicious corporate governance and risk management (especially market, credit and operational risks), known as the Basel Accords. The key function of Basel accords is to manage banks’ capital requirements and ensure they hold enough cash reserves to meet their respective financial obligations and henceforth survive in any financial and/or economic distress.

Over the years, the following versions of the Basel accords have been released in order to enhance international banking regulatory frameworks and improve the sector’s ability to manage with financial distress, improve risk management and promote transparency:

Basel I

The first of the Basel accords, Basel I (also known as Basel Capital Accord) was developed in 1988 and implemented in the G10 countries by 1992. The regulations intended to improve the stability of the financial institutions by setting minimum capital reserve requirements for international banks and provided a framework for managing of credit risk through the risk-weighting of different assets which was also used for assessing banks’ credit worthiness.

However, there were many limitations to this accord, one of which being that Basel I only focused on credit risk ignoring other risk types like market risk, operational risk, strategic risk, macroeconomic conditions etc. that were not covered by the regulations. Also, the requirements posed by the accord were nearly the same for all banks, no matter what the bank’s risk level and activity type.

Basel II

Basel II regulations were developed in 2004 as an extension of Basel I, with a more comprehensive risk management framework and thereby including standardized measures for managing credit, operational and market risks. Basel II strengthened corporate supervisory mechanisms and market transparency by developing disclosure requirements for international regulations inducing market discipline.

Basel III

After the 2008 Financial Crisis, it was perceived by the BCBS that the Basel regulations still needed to be strengthened in areas like more efficient coverage of banks’ risk exposures and quality and measure of the regulatory capital corresponding to banks’ risks.

Basel III intends to correct the miscalculations of risk that were believed to have contributed to the crisis by requiring banks to hold higher percentages of their assets in more liquid instruments and get funding through more equity than debt. Basel III thus tries to strengthen resilience and reduce the risk of system-wide financial shocks and prevent future economic credit events. The Basel III regulations were introduced in 2009 and the implementation deadline was initially set for 2015 however, due to conflicting negotiations it has been repeatedly postponed and currently set to January 1, 2022.

Risk Measures

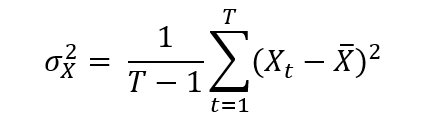

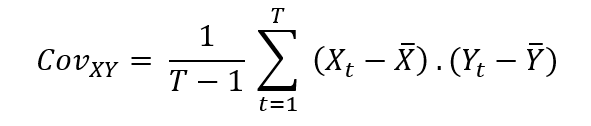

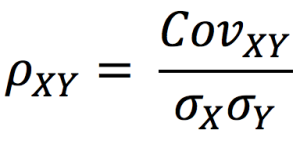

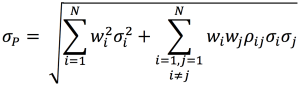

Efficient risk measurement based on relevant risk measures is a fundamental pillar of the risk management. The following are common measures used by institutions to facilitate quantitative risk management:

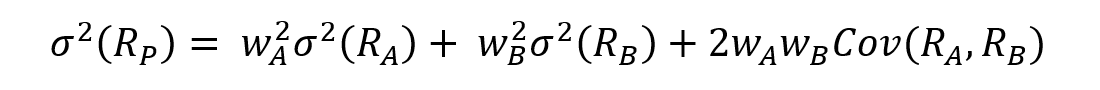

Value at risk (VaR)

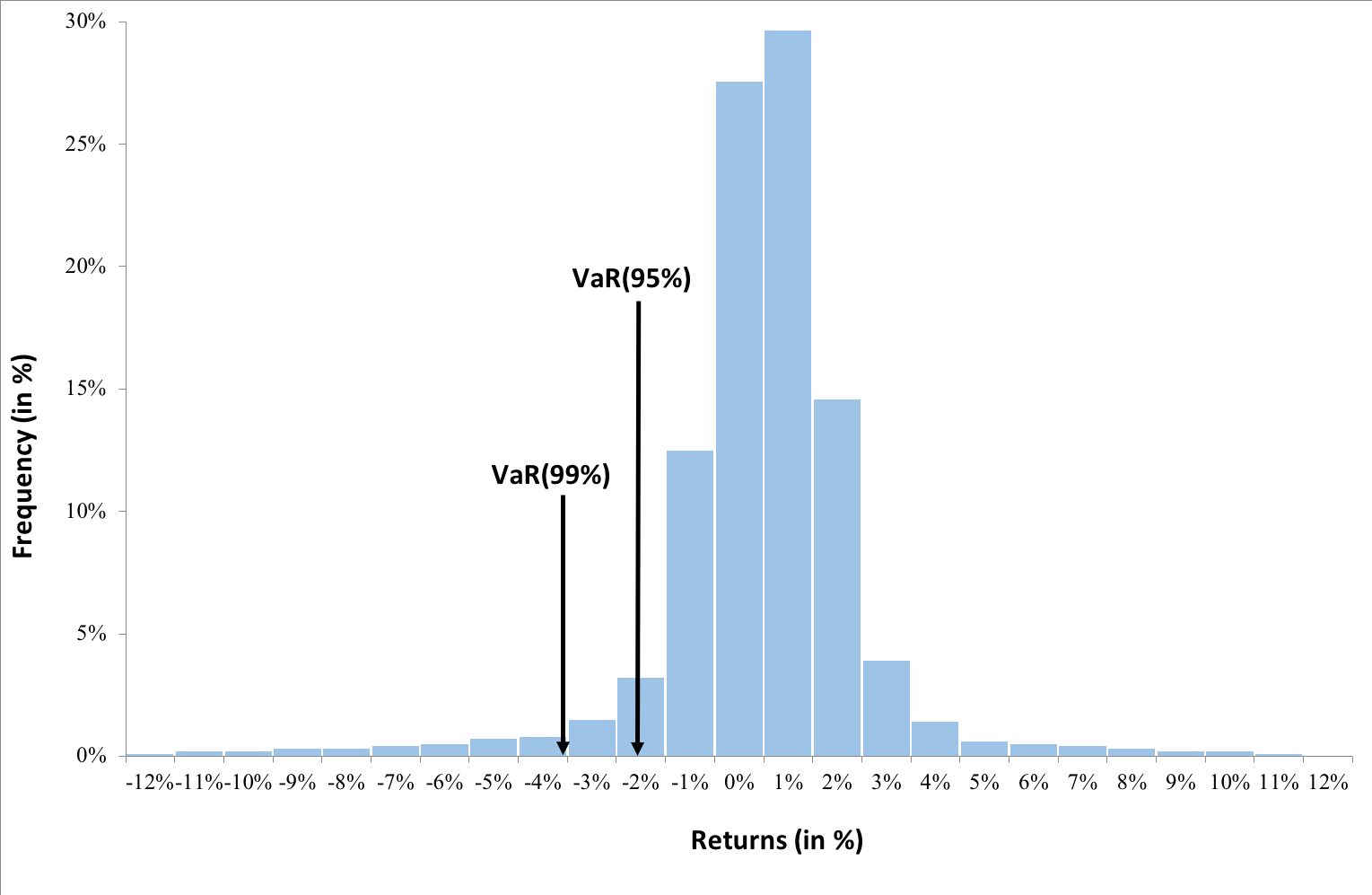

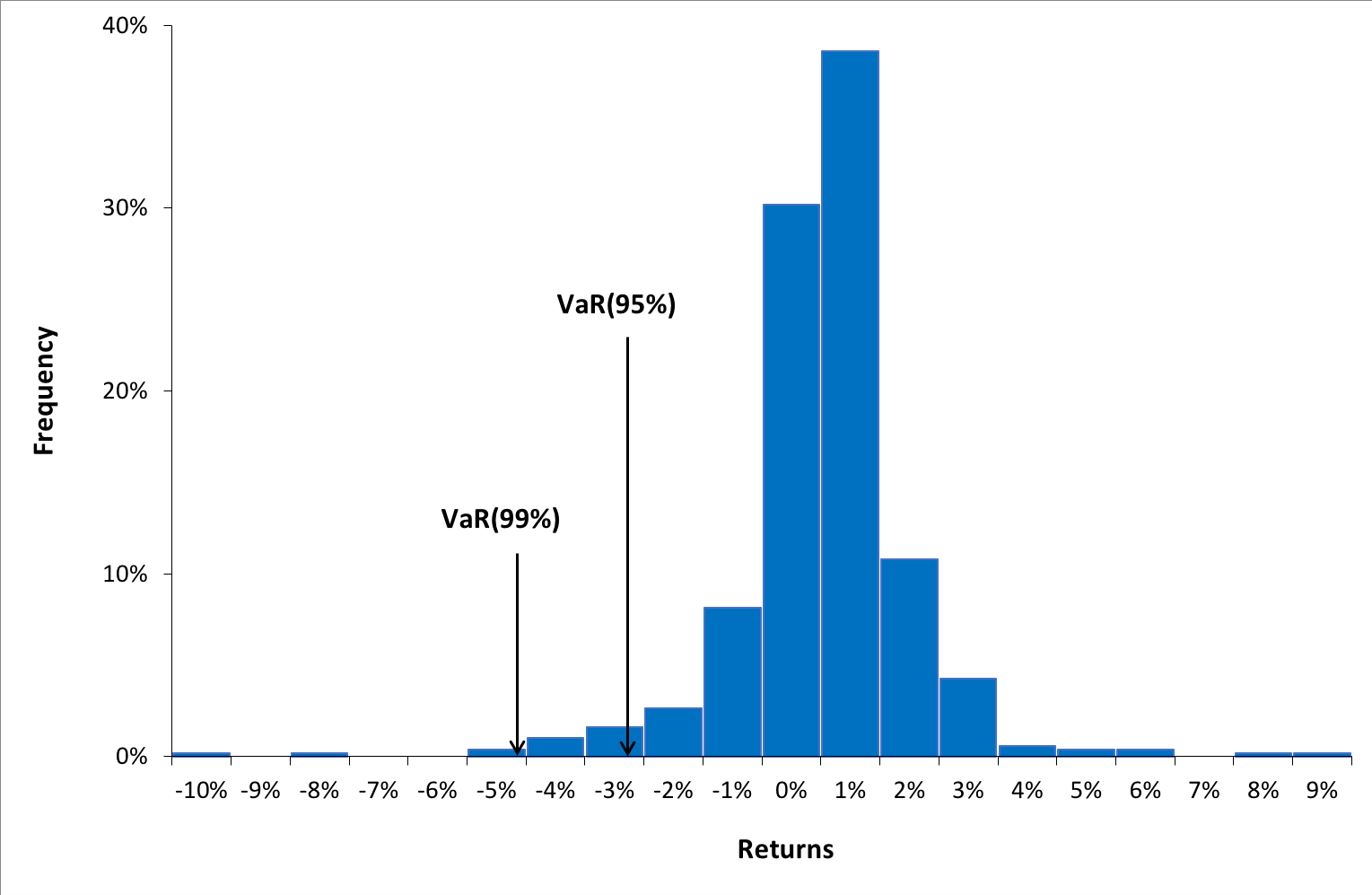

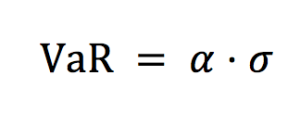

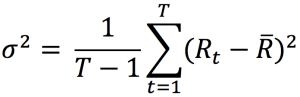

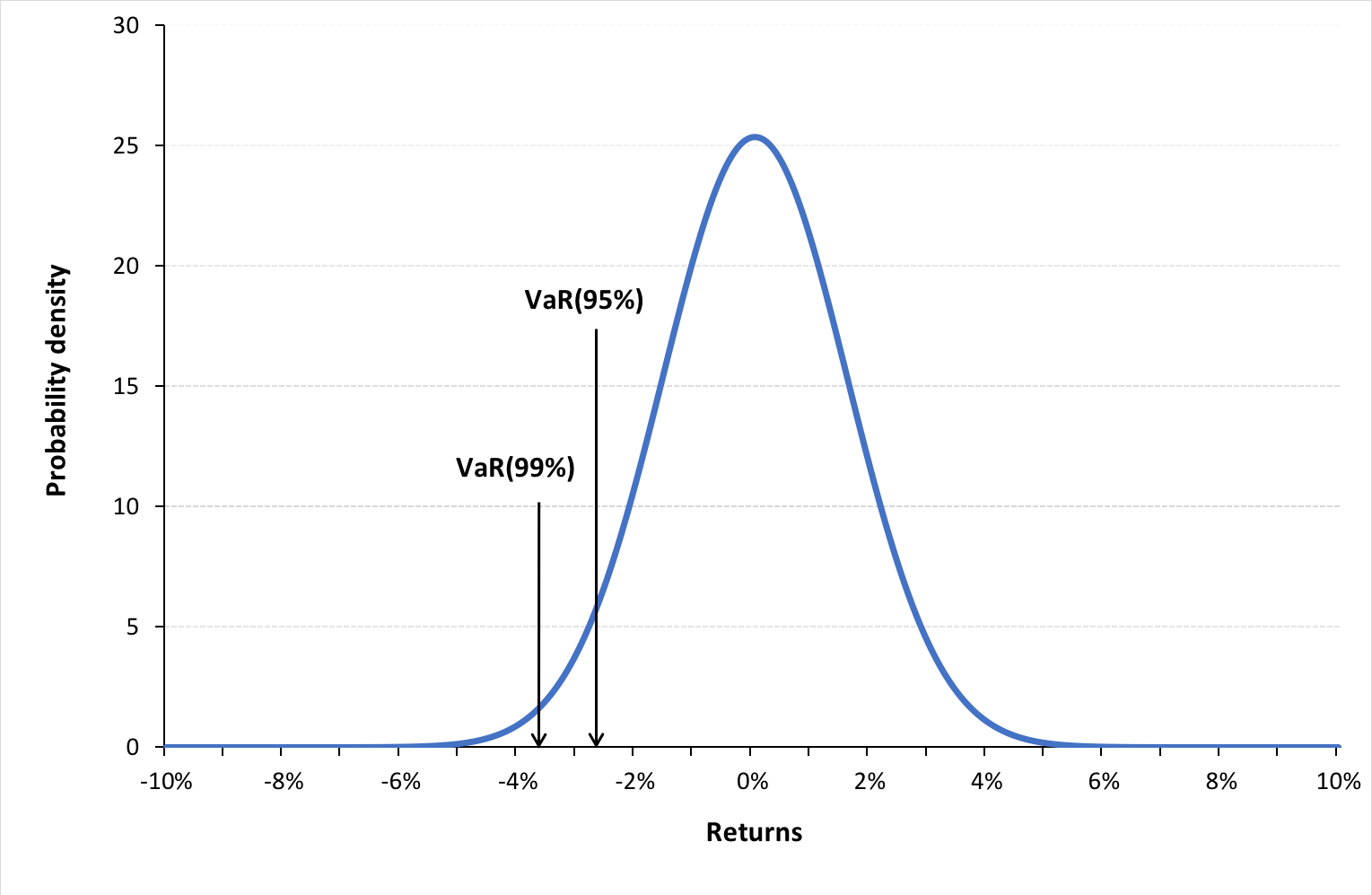

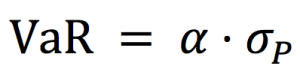

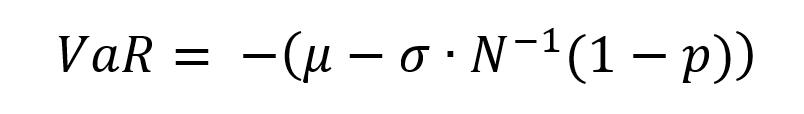

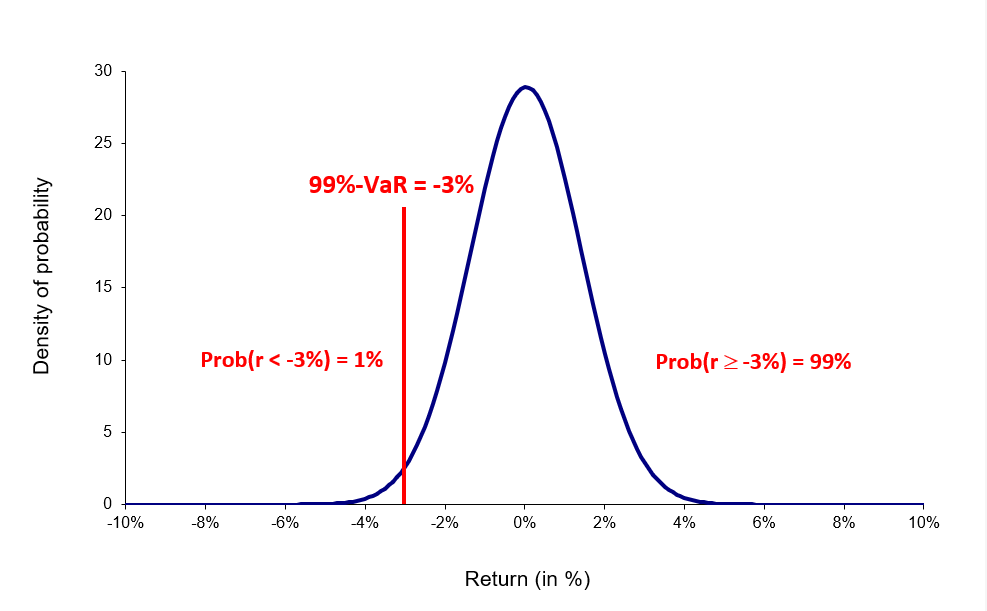

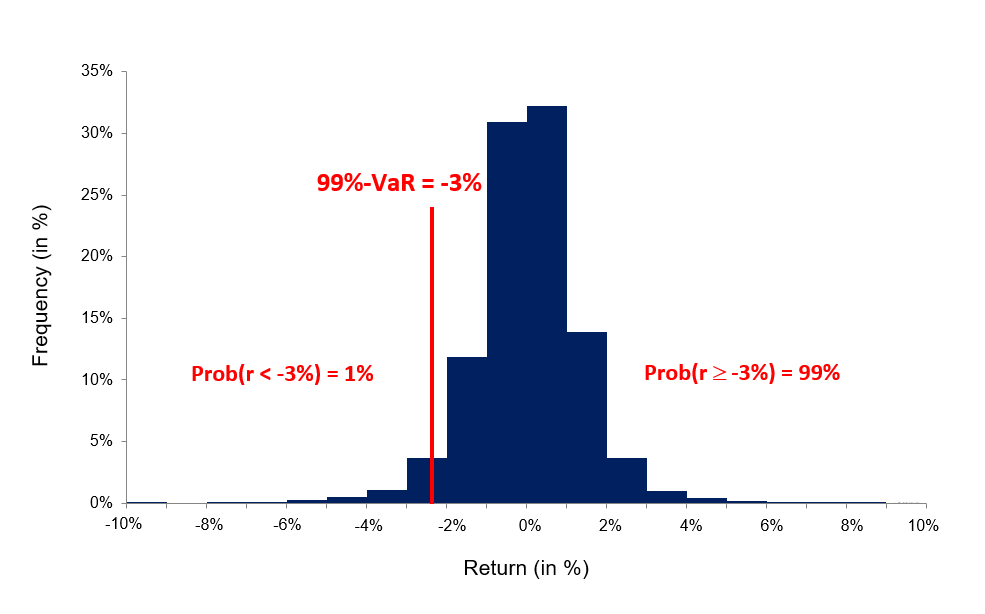

VaR is the most extensively used risk measure and essentially refers to the maximum loss that should not be exceeded during a specific period of time with a given probability. VaR is mainly used to calculate minimum capital requirements for institutions that are needed to fulfill their financial obligations, decide limits for asset management and allocation, calculate insurance premiums based on risk and set margin for derivatives transactions.

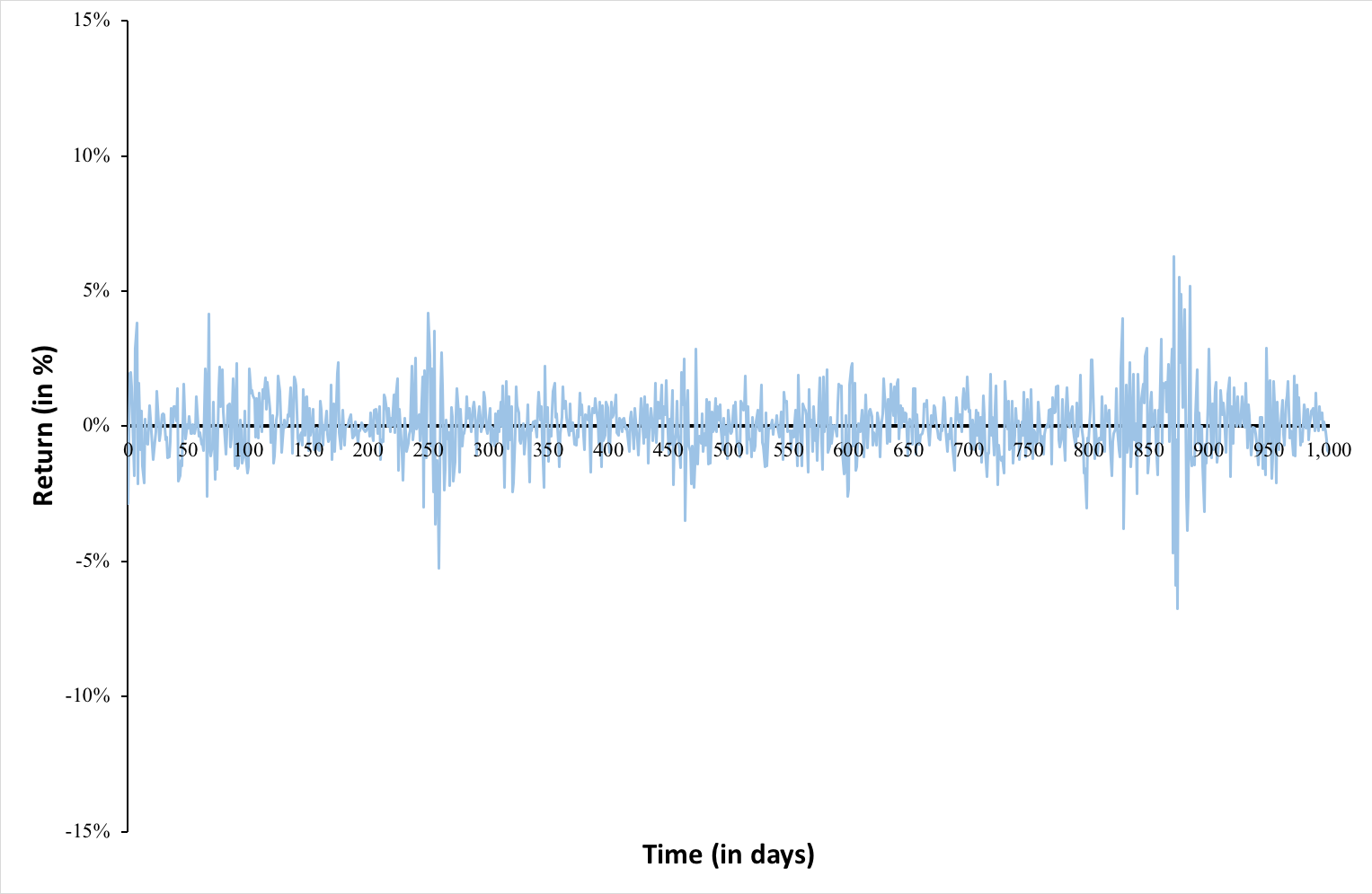

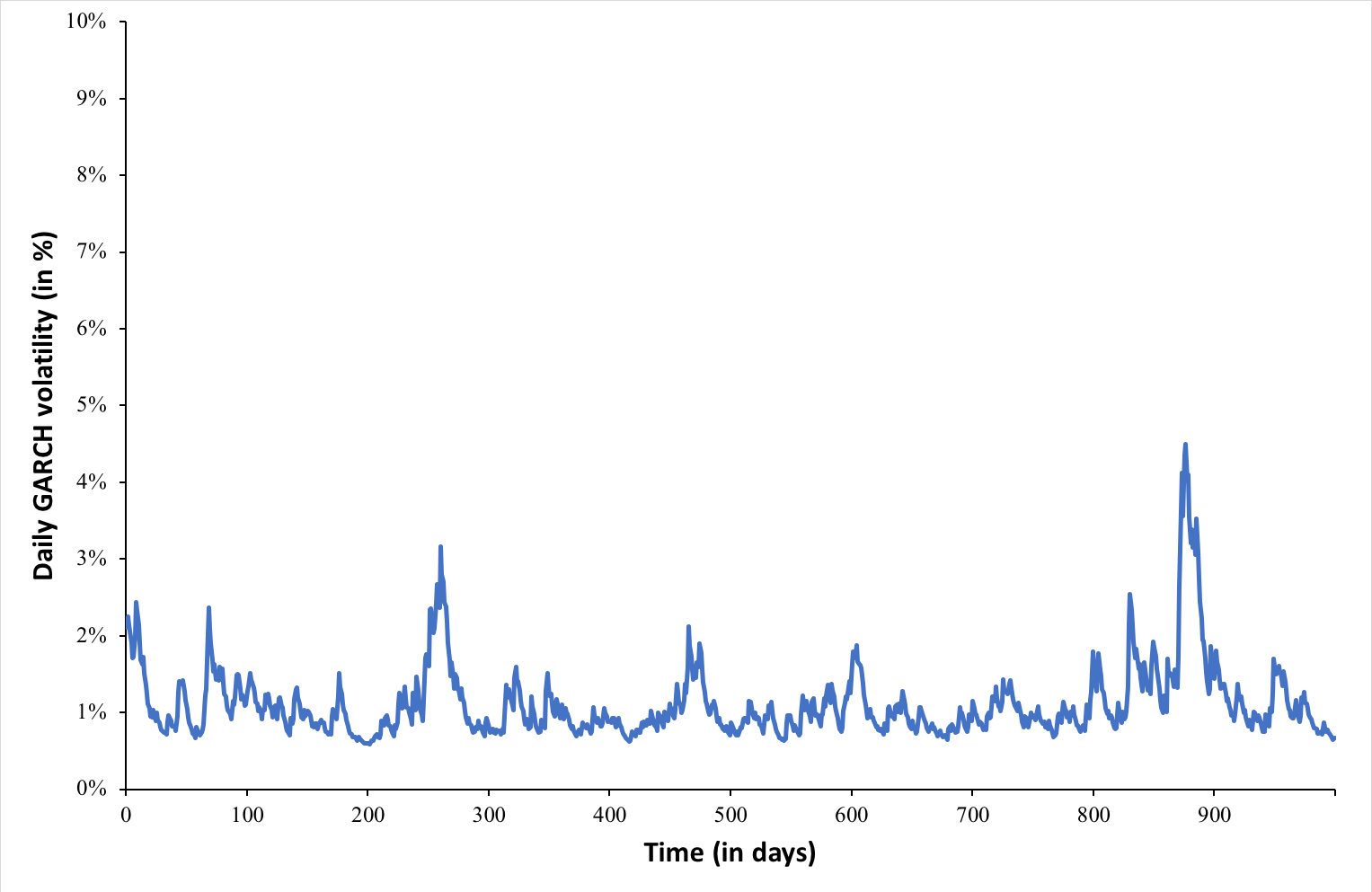

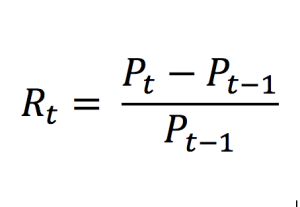

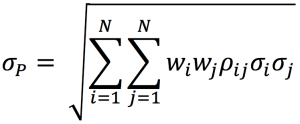

To estimate market risk, we model the statistical distribution of the changes in the market position. Usual models used for the task include normal distribution, the historical distribution and the distributions based on Monte Carlo simulations.

Expected Shortfall

The Expected Shortfall (ES) (also known as Conditional VaR (CVaR), Average Value at risk (AVaR), Expected Tail Loss (ETL) or Beyond the VaR (BVaR)) is a statistic measure used to quantify the market risk of a portfolio. This measure represents the expected loss when it is greater than the value of the VaR calculated with a specific probability level (also known as confidence level).

Credit Risk Measures

Probability of Default (PD) is the probability that a borrower may default on his debt over a period of 1 year. Exposure at Default (EAD) is the expected amount outstanding in case the borrower defaults and Loss given Default (LGD) refers to the amount expected to lose by the lender as a proportion of the EAD. Thus the expected loss in case of default is calculated as PD*EAD*LGD.

Related Posts on the SimTrade blog

▶ Jayati WALIA Value at Risk

▶ Akshit GUPTA Options

▶ Jayati WALIA Black-Scholes-Merton option pricing model

Useful resources

Articles

Longin F. (1996) The asymptotic distribution of extreme stock market returns Journal of Business, 63, 383-408.

Longin F. (2000) From VaR to stress testing : the extreme value approach Journal of Banking and Finance, 24, 1097-1130.

Longin F. and B. Solnik (2001) Extreme correlation of international equity markets Journal of Finance, 56, 651-678.

Books

Embrechts P., C. Klüppelberg and T Mikosch (1997) Modelling Extremal Events for Insurance and Finance.

Embrechts P., R. Frey, McNeil A. J. (2022) Quantitative Risk Management, Princeton University Press.

Gumbel, E. J. (1958) Statistics of extremes. New York: Columbia University Press.

Longin F. (2016) Extreme events in finance: a handbook of extreme value theory and its applications Wiley Editions.

Corporate Finance Institute Basel Accords

Other materials

Extreme Events in Finance

QRM Tutorial

About the author

The article was written in September 2021 by Jayati WALIA (ESSEC Business School, Grande Ecole Program – Master in Management, 2019-2022).